Update README.md

Browse files

README.md

CHANGED

|

@@ -8,23 +8,26 @@ license: creativeml-openrail-m

|

|

| 8 |

|

| 9 |

This is a low-quality bocchi-the-rock (ぼっち・ざ・ろっく!) character model.

|

| 10 |

Similar to my [yama-no-susume model](https://huggingface.co/alea31415/yama-no-susume), this model is capable of generating **multi-character scenes** beyond images of a single character.

|

| 11 |

-

Of course, the result is still hit-or-miss, but I

|

| 12 |

and otherwise, you can always rely on inpainting.

|

| 13 |

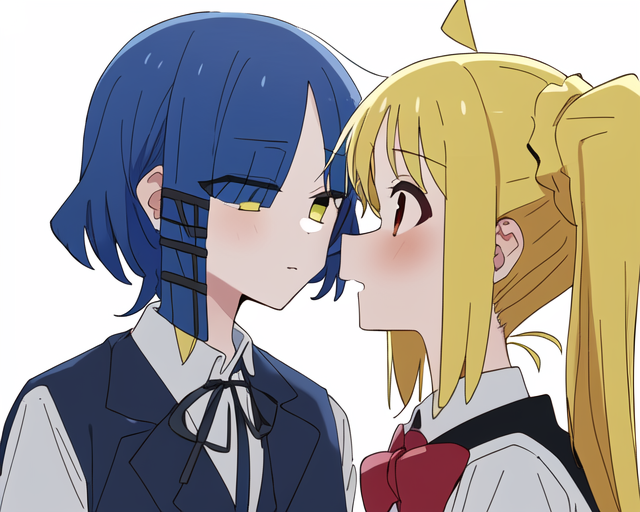

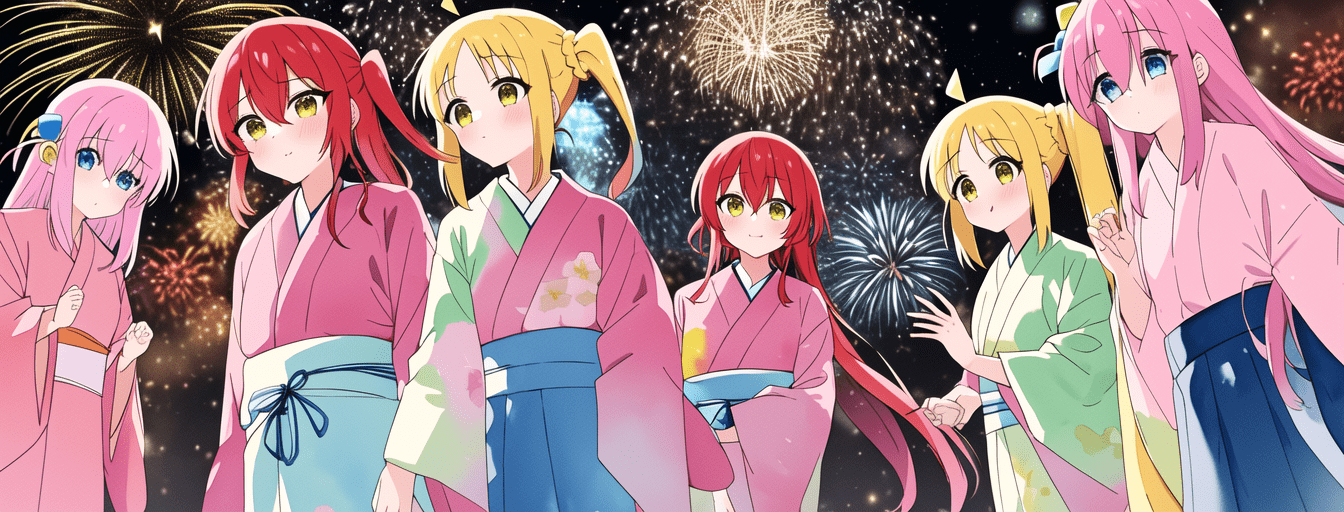

Here are two examples:

|

| 14 |

|

| 15 |

With inpainting

|

| 16 |

-

|

| 17 |

|

| 18 |

Without inpainting

|

| 19 |

-

|

| 20 |

|

| 21 |

|

| 22 |

### Characters

|

| 23 |

|

| 24 |

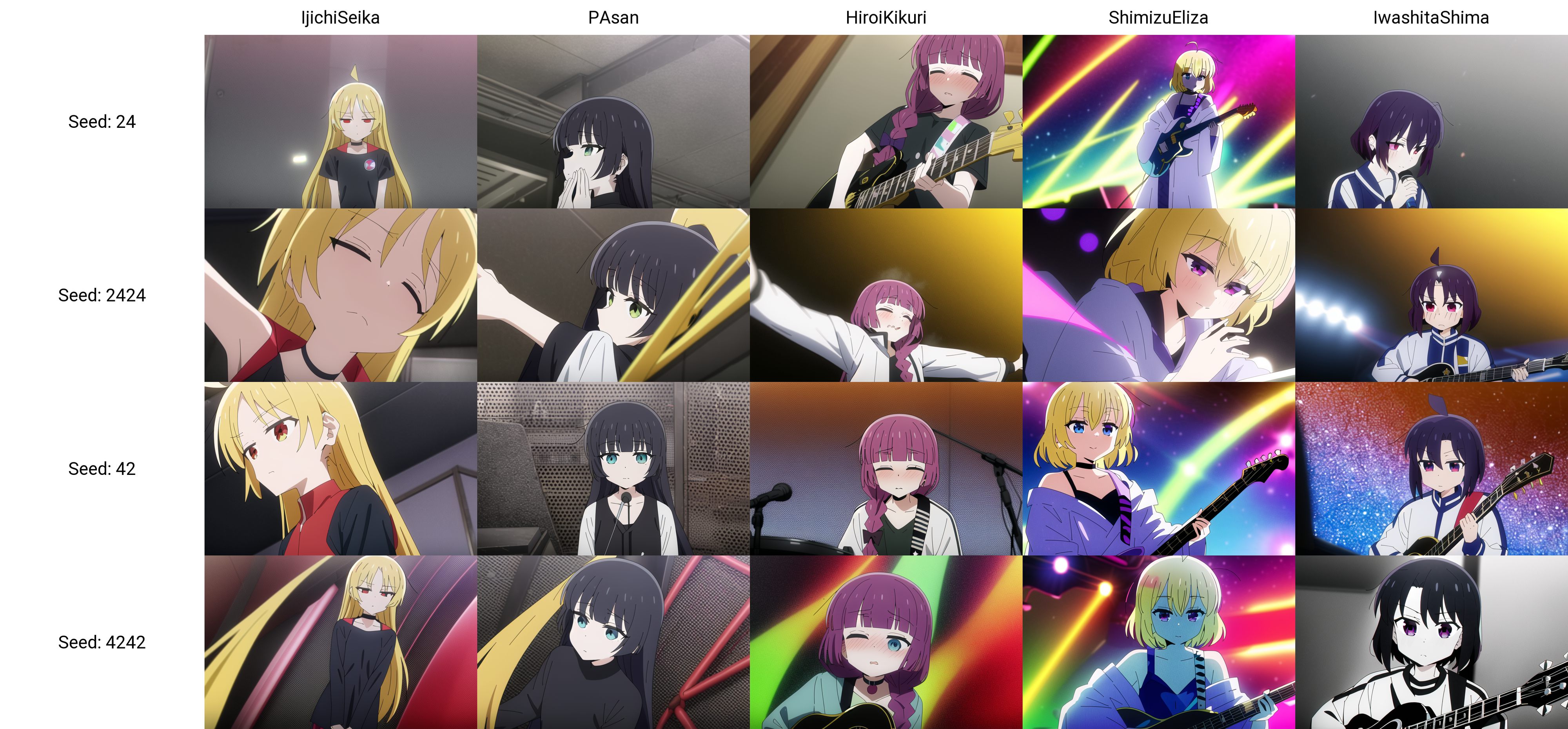

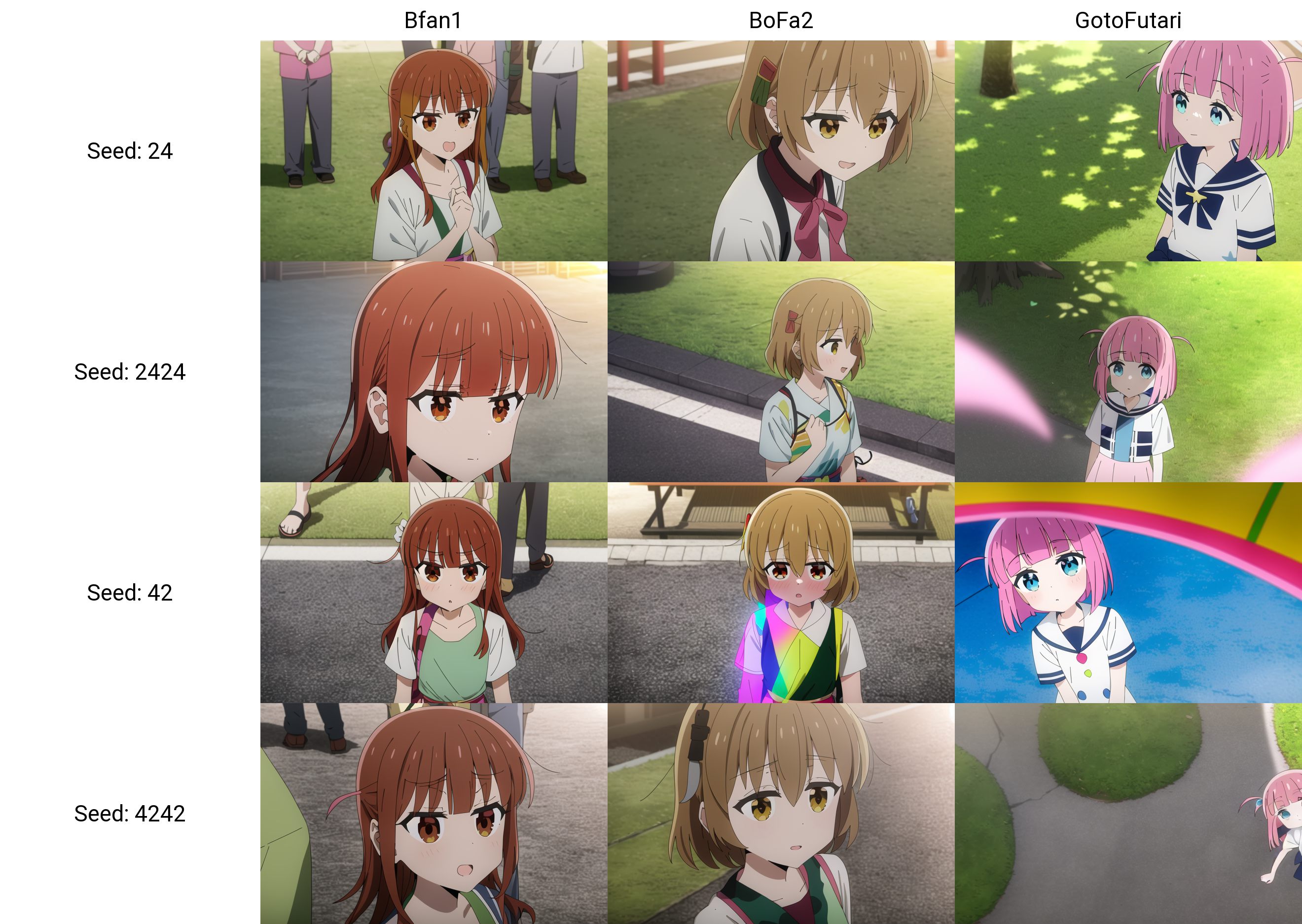

The model knows 12 characters from bocchi the rock.

|

| 25 |

-

The ressemblance with a character can be improved by a better description of their appearance.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

-

*Coming soon*

|

| 28 |

|

| 29 |

### Dataset description

|

| 30 |

|

|

@@ -51,7 +54,7 @@ The model is trained on runpod using 3090 and cost me around 15 dollors.

|

|

| 51 |

|

| 52 |

#### Hyperparameter specification

|

| 53 |

|

| 54 |

-

|

| 55 |

|

| 56 |

Note that as a consequence of the weighting scheme which translates into a number of different multiply for each image,

|

| 57 |

the count of repeat and epoch has a quite different meaning here.

|

|

@@ -61,16 +64,33 @@ and therefore I did not even finish an entire epoch with the 48000 steps at batc

|

|

| 61 |

### Failures

|

| 62 |

|

| 63 |

- For the first 24000 steps I use the trigger words `Bfan1` and `Bfan2` for the two fans of Bocchi.

|

| 64 |

-

However, these two words are too similar and the model fails to different characters for these.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 65 |

|

| 66 |

|

| 67 |

### More Example Generations

|

| 68 |

|

| 69 |

With inpainting

|

| 70 |

-

|

|

|

|

|

|

|

|

|

|

| 71 |

|

| 72 |

Without inpainting

|

| 73 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 74 |

|

| 75 |

Some failure cases

|

| 76 |

-

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

This is a low-quality bocchi-the-rock (ぼっち・ざ・ろっく!) character model.

|

| 10 |

Similar to my [yama-no-susume model](https://huggingface.co/alea31415/yama-no-susume), this model is capable of generating **multi-character scenes** beyond images of a single character.

|

| 11 |

+

Of course, the result is still hit-or-miss, but I with some chance you can get the entire Kessoku Band right in one shot,

|

| 12 |

and otherwise, you can always rely on inpainting.

|

| 13 |

Here are two examples:

|

| 14 |

|

| 15 |

With inpainting

|

| 16 |

+

|

| 17 |

|

| 18 |

Without inpainting

|

| 19 |

+

|

| 20 |

|

| 21 |

|

| 22 |

### Characters

|

| 23 |

|

| 24 |

The model knows 12 characters from bocchi the rock.

|

| 25 |

+

The ressemblance with a character can be improved by a better description of their appearance (for example by adding long wavy hair to ShimizuEliza).

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

|

| 30 |

|

|

|

|

| 31 |

|

| 32 |

### Dataset description

|

| 33 |

|

|

|

|

| 54 |

|

| 55 |

#### Hyperparameter specification

|

| 56 |

|

| 57 |

+

The model is trained for 48000 steps, at batch size 4, lr 1e-6, resolution 512, and conditional dropping rate of 10%.

|

| 58 |

|

| 59 |

Note that as a consequence of the weighting scheme which translates into a number of different multiply for each image,

|

| 60 |

the count of repeat and epoch has a quite different meaning here.

|

|

|

|

| 64 |

### Failures

|

| 65 |

|

| 66 |

- For the first 24000 steps I use the trigger words `Bfan1` and `Bfan2` for the two fans of Bocchi.

|

| 67 |

+

However, these two words are too similar and the model fails to different characters for these.

|

| 68 |

+

Therefore I changed Bfan2 to Bofa2 at step 24000. This seemed to solve the problem.

|

| 69 |

+

- Character blending is always an issue.

|

| 70 |

+

- When prompting the four characters of Kessoku Band we often get side shots.

|

| 71 |

+

I think this is because of some overfitting to a particular image.

|

| 72 |

|

| 73 |

|

| 74 |

### More Example Generations

|

| 75 |

|

| 76 |

With inpainting

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

|

| 81 |

|

| 82 |

Without inpainting

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

|

| 92 |

Some failure cases

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

|