Commit

·

13b72f5

1

Parent(s):

8892bba

init commit

Browse files- LICENSE.md +43 -0

- README.md +157 -0

- dynpose_100k.zip +3 -0

- environment.yml +45 -0

- scripts/extract_frames.py +94 -0

- scripts/visualize_pose.py +334 -0

- teaser.png +3 -0

LICENSE.md

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## NVIDIA License for DynPose-100K

|

| 2 |

+

|

| 3 |

+

### 1. Definitions

|

| 4 |

+

|

| 5 |

+

- “Licensor” means any person or entity that distributes its Work.

|

| 6 |

+

- “Work” means (a) the original work of authorship made available under this license, which may include software, documentation, or other files, and (b) any additions to or derivative works thereof that are made available under this license.

|

| 7 |

+

- The terms “reproduce,” “reproduction,” “derivative works,” and “distribution” have the meaning as provided under U.S. copyright law; provided, however, that for the purposes of this license, derivative works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work.

|

| 8 |

+

- Works are “made available” under this license by including in or with the Work either (a) a copyright notice referencing the applicability of this license to the Work, or (b) a copy of this license.

|

| 9 |

+

|

| 10 |

+

### 2. License Grant

|

| 11 |

+

|

| 12 |

+

#### 2.1 Copyright Grant.

|

| 13 |

+

Subject to the terms and conditions of this license, each Licensor grants to you a perpetual, worldwide, non-exclusive, royalty-free, copyright license to use, reproduce, prepare derivative works of, publicly display, publicly perform, sublicense and distribute its Work and any resulting derivative works in any form.

|

| 14 |

+

|

| 15 |

+

### 3. Limitations

|

| 16 |

+

|

| 17 |

+

#### 3.1 Redistribution.

|

| 18 |

+

You may reproduce or distribute the Work only if (a) you do so under this license, (b) you include a complete copy of this license with your distribution, and (c) you retain without modification any copyright, patent, trademark, or attribution notices that are present in the Work.

|

| 19 |

+

|

| 20 |

+

#### 3.2 Derivative Works.

|

| 21 |

+

You may specify that additional or different terms apply to the use, reproduction, and distribution of your derivative works of the Work (“Your Terms”) only if (a) Your Terms provide that the use limitation in Section 3.3 applies to your derivative works, and (b) you identify the specific derivative works that are subject to Your Terms. Notwithstanding Your Terms, this license (including the redistribution requirements in Section 3.1) will continue to apply to the Work itself.

|

| 22 |

+

|

| 23 |

+

#### 3.3 Use Limitation.

|

| 24 |

+

The Work and any derivative works thereof only may be used or intended for use non-commercially. Notwithstanding the foregoing, NVIDIA Corporation and its affiliates may use the Work and any derivative works commercially. As used herein, “non-commercially” means for non-commercial research purposes only.

|

| 25 |

+

|

| 26 |

+

#### 3.4 Patent Claims.

|

| 27 |

+

If you bring or threaten to bring a patent claim against any Licensor (including any claim, cross-claim or counterclaim in a lawsuit) to enforce any patents that you allege are infringed by any Work, then your rights under this license from such Licensor (including the grant in Section 2.1) will terminate immediately.

|

| 28 |

+

|

| 29 |

+

#### 3.5 Trademarks.

|

| 30 |

+

This license does not grant any rights to use any Licensor’s or its affiliates’ names, logos, or trademarks, except as necessary to reproduce the notices described in this license.

|

| 31 |

+

|

| 32 |

+

#### 3.6 Termination.

|

| 33 |

+

If you violate any term of this license, then your rights under this license (including the grant in Section 2.1) will terminate immediately.

|

| 34 |

+

|

| 35 |

+

### 4. Disclaimer of Warranty.

|

| 36 |

+

|

| 37 |

+

THE WORK IS PROVIDED “AS IS” WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING WARRANTIES OR CONDITIONS OF

|

| 38 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR NON-INFRINGEMENT. YOU BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER THIS LICENSE.

|

| 39 |

+

|

| 40 |

+

### 5. Limitation of Liability.

|

| 41 |

+

|

| 42 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL THEORY, WHETHER IN TORT (INCLUDING NEGLIGENCE), CONTRACT, OR OTHERWISE SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF OR RELATED TO THIS LICENSE, THE USE OR INABILITY TO USE THE WORK (INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION, LOST PROFITS OR DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

|

| 43 |

+

|

README.md

ADDED

|

@@ -0,0 +1,157 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: other

|

| 3 |

+

language:

|

| 4 |

+

- en

|

| 5 |

+

pretty_name: dynpose-100k

|

| 6 |

+

size_categories:

|

| 7 |

+

- 100K<n<1M

|

| 8 |

+

task_categories:

|

| 9 |

+

- other

|

| 10 |

+

---

|

| 11 |

+

# DynPose-100K

|

| 12 |

+

|

| 13 |

+

**[Dynamic Camera Poses and Where to Find Them](https://research.nvidia.com/labs/dir/dynpose-100k)** \

|

| 14 |

+

[Chris Rockwell<sup>1,2</sup>](https://crockwell.github.io), [Joseph Tung<sup>3</sup>](https://jot-jt.github.io/), [Tsung-Yi Lin<sup>1</sup>](https://tsungyilin.info/),

|

| 15 |

+

[Ming-Yu Liu<sup>1</sup>](https://mingyuliu.net/), [David F. Fouhey<sup>3</sup>](https://cs.nyu.edu/~fouhey/), [Chen-Hsuan Lin<sup>1</sup>](https://chenhsuanlin.bitbucket.io/) \

|

| 16 |

+

<sup>1</sup>NVIDIA <sup>2</sup>University of Michigan <sup>3</sup>New York University

|

| 17 |

+

|

| 18 |

+

[](https://research.nvidia.com/labs/dir/dynpose-100k) [](https://arxiv.org/abs/XXXX.XXXXX)

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

## Overview

|

| 23 |

+

|

| 24 |

+

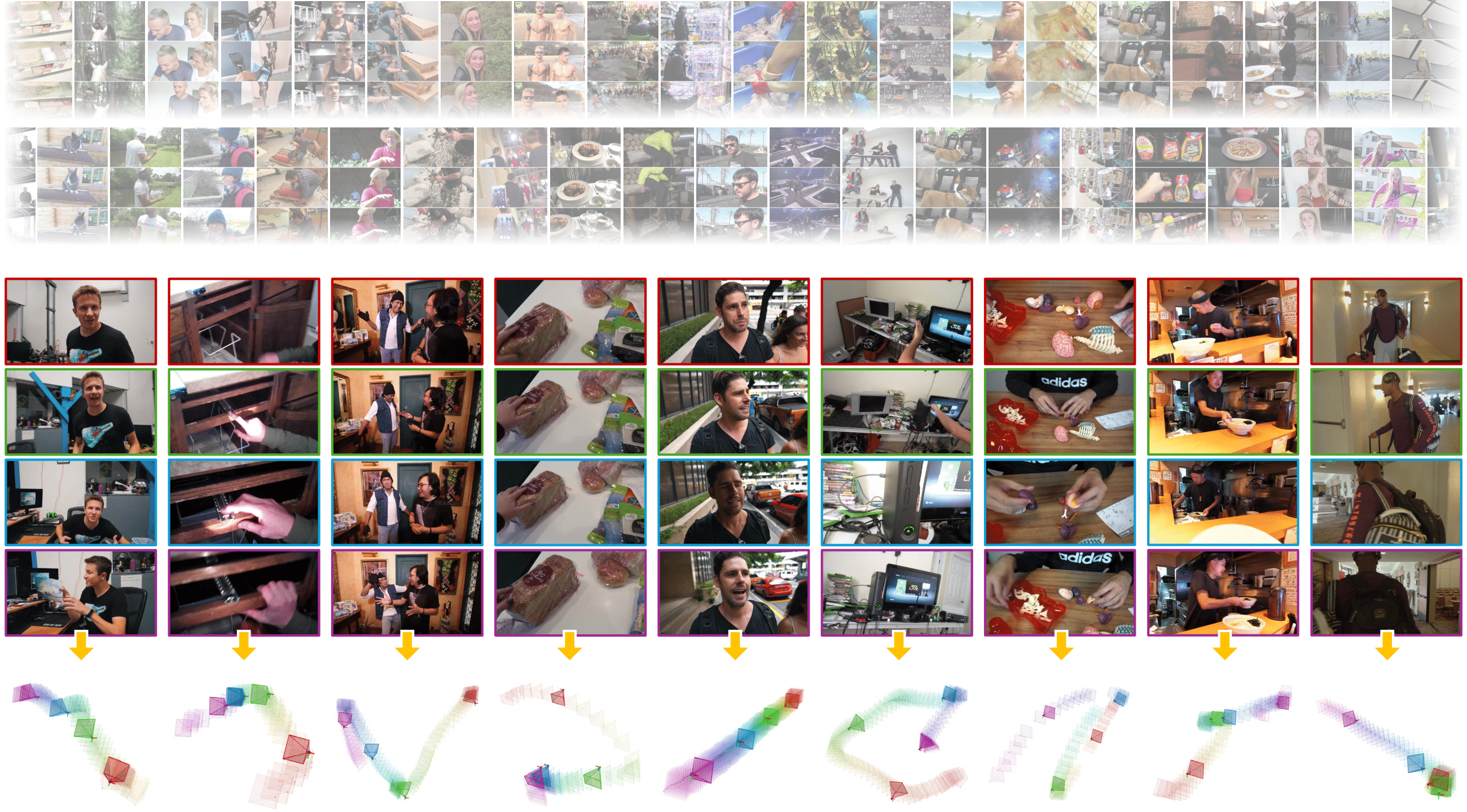

DynPose-100K is a large-scale dataset of diverse, dynamic videos with camera annotations. We curate 100K videos containing dynamic content while ensuring cameras can be accurately estimated (including intrinsics and poses), addressing two key challenges:

|

| 25 |

+

1. Identifying videos suitable for camera estimation

|

| 26 |

+

2. Improving camera estimation algorithms for dynamic videos

|

| 27 |

+

|

| 28 |

+

| Characteristic | Value |

|

| 29 |

+

| --- | --- |

|

| 30 |

+

| **Size** | 100K videos |

|

| 31 |

+

| **Resolution** | 1280×720 (720p) |

|

| 32 |

+

| **Annotation type** | Camera poses (world-to-camera), intrinsics |

|

| 33 |

+

| **Format** | MP4 (videos), PKL (camera data), JPG (frames) |

|

| 34 |

+

| **Frame rate** | 12 fps (extracted frames) |

|

| 35 |

+

| **Storage** | ~200 GB (videos) + ~400 GB (frames) + 0.7 GB (annotations) |

|

| 36 |

+

| **License** | NVIDIA License (for DynPose-100K) |

|

| 37 |

+

|

| 38 |

+

## DynPose-100K Download

|

| 39 |

+

|

| 40 |

+

DynPose-100K contains diverse Internet videos annotated with state-of-the-art camera pose estimation. Videos were selected from 3.2M candidates through advanced filtering.

|

| 41 |

+

|

| 42 |

+

### 1. Camera annotation download (0.7 GB)

|

| 43 |

+

```bash

|

| 44 |

+

git clone https://huggingface.co/datasets/nvidia/dynpose-100k

|

| 45 |

+

cd dynpose-100k

|

| 46 |

+

unzip dynpose_100k.zip

|

| 47 |

+

export DYNPOSE_100K_ROOT=$(pwd)/dynpose_100k

|

| 48 |

+

```

|

| 49 |

+

|

| 50 |

+

### 2. Video download (~200 GB for all videos at 720p)

|

| 51 |

+

```bash

|

| 52 |

+

git clone https://github.com/snap-research/Panda-70M.git

|

| 53 |

+

pip install -e Panda-70M/dataset_dataloading/video2dataset

|

| 54 |

+

```

|

| 55 |

+

- For experiments we use (1280, 720) video resolution rather than the default (640, 360). To download at this resolution (optional), modify [download size](https://github.com/snap-research/Panda-70M/blob/main/dataset_dataloading/video2dataset/video2dataset/configs/panda70m.yaml#L5) to 720

|

| 56 |

+

```bash

|

| 57 |

+

video2dataset --url_list="${DYNPOSE_100K_ROOT}/metadata.csv" --output_folder="${DYNPOSE_100K_ROOT}/video" \

|

| 58 |

+

--url_col="url" --caption_col="caption" --clip_col="timestamp" \

|

| 59 |

+

--save_additional_columns="[matching_score,desirable_filtering,shot_boundary_detection]" \

|

| 60 |

+

--config="video2dataset/video2dataset/configs/panda70m.yaml"

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

### 3. Video frame extraction (~400 GB for 12 fps over all videos at 720p)

|

| 64 |

+

```bash

|

| 65 |

+

python scripts/extract_frames.py --input_video_dir ${DYNPOSE_100K_ROOT}/video \

|

| 66 |

+

--output_frame_parent ${DYNPOSE_100K_ROOT}/frames-12fps \

|

| 67 |

+

--url_list ${DYNPOSE_100K_ROOT}/metadata.csv \

|

| 68 |

+

--uid_mapping ${DYNPOSE_100K_ROOT}/uid_mapping.csv

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

### 4. Camera pose visualization

|

| 72 |

+

Create a conda environment if you haven't done so:

|

| 73 |

+

```bash

|

| 74 |

+

conda env create -f environment.yml

|

| 75 |

+

conda activate dynpose-100k

|

| 76 |

+

```

|

| 77 |

+

Run the below under the `dynpose-100k` environment:

|

| 78 |

+

```bash

|

| 79 |

+

python scripts/visualize_pose.py --dset dynpose_100k --dset_parent ${DYNPOSE_100K_ROOT}

|

| 80 |

+

```

|

| 81 |

+

|

| 82 |

+

### Dataset structure

|

| 83 |

+

```

|

| 84 |

+

dynpose_100k

|

| 85 |

+

├── cameras

|

| 86 |

+

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6.pkl {uid}

|

| 87 |

+

| ├── poses {camera poses (all frames) ([N',3,4])}

|

| 88 |

+

| ├── intrinsics {camera intrinsic matrix ([3,3])}

|

| 89 |

+

| ├── frame_idxs {corresponding frame indices ([N']), values within [0,N-1]}

|

| 90 |

+

| ├── mean_reproj_error {average reprojection error from SfM ([N'])}

|

| 91 |

+

| ├── num_points {number of reprojected points ([N'])}

|

| 92 |

+

| ├── num_frames {number of video frames N (scalar)}

|

| 93 |

+

| # where N' is number of registered frames

|

| 94 |

+

| ├── 00031466-5496-46fa-a992-77772a118b17.pkl

|

| 95 |

+

| ├── poses # camera poses (all frames) ([N',3,4])

|

| 96 |

+

| └── ...

|

| 97 |

+

| └── ...

|

| 98 |

+

├── video

|

| 99 |

+

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6.mp4 {uid}

|

| 100 |

+

| ├── 00031466-5496-46fa-a992-77772a118b17.mp4

|

| 101 |

+

| └── ...

|

| 102 |

+

├── frames-12fps

|

| 103 |

+

| ├── 00011ee6-cbc1-4ec4-be6f-292bfa698fc6 {uid}

|

| 104 |

+

| ├── 00001.jpg {frame id}

|

| 105 |

+

| ├── 00002.jpg

|

| 106 |

+

| └── ...

|

| 107 |

+

| ├── 00031466-5496-46fa-a992-77772a118b17

|

| 108 |

+

| ├── 00001.jpg

|

| 109 |

+

| └── ...

|

| 110 |

+

| └── ...

|

| 111 |

+

├── metadata.csv {used to download video & extract frames}

|

| 112 |

+

| ├── uid

|

| 113 |

+

| ├── 00031466-5496-46fa-a992-77772a118b17

|

| 114 |

+

| └── ...

|

| 115 |

+

├── uid_mapping.csv {used to download video & extract frames}

|

| 116 |

+

| ├── videoID,url,timestamp,caption,matching_score,desirable_filtering,shot_boundary_detection

|

| 117 |

+

| ├── --106WvnIhc,https://www.youtube.com/watch?v=--106WvnIhc,"[['0:13:34.029', '0:13:40.035']]",['A man is swimming in a pool with an inflatable mattress.'],[0.44287109375],['desirable'],"[[['0:00:00.000', '0:00:05.989']]]"

|

| 118 |

+

| └── ...

|

| 119 |

+

├── viz_list.txt {used as index for pose visualization}

|

| 120 |

+

| ├── 004cd3b5-8af4-4613-97a0-c51363d80c31 {uid}

|

| 121 |

+

| ├── 0c3e06ae-0d0e-4c41-999a-058b4ea6a831

|

| 122 |

+

| └── ...

|

| 123 |

+

```

|

| 124 |

+

|

| 125 |

+

## LightSpeed Benchmark

|

| 126 |

+

|

| 127 |

+

LightSpeed contains ground truth camera pose and is used to validate DynPose-100K's pose annotation method.

|

| 128 |

+

|

| 129 |

+

Coming soon!

|

| 130 |

+

|

| 131 |

+

## FAQ

|

| 132 |

+

|

| 133 |

+

**Q: What coordinate system do the camera poses use?**

|

| 134 |

+

A: Camera poses are world-to-camera and follow OpenCV "RDF" convention (same as COLMAP): X axis points to the right, the Y axis to the bottom, and the Z axis to the front as seen from the image.

|

| 135 |

+

|

| 136 |

+

**Q: How do I map between frame indices and camera poses?**

|

| 137 |

+

A: The `frame_idxs` field in each camera PKL file contains the corresponding frame indices for the poses.

|

| 138 |

+

|

| 139 |

+

**Q: How can I contribute to this dataset?**

|

| 140 |

+

A: Please contact the authors for collaboration opportunities.

|

| 141 |

+

|

| 142 |

+

## Citation

|

| 143 |

+

|

| 144 |

+

If you find this dataset useful in your research, please cite our paper:

|

| 145 |

+

|

| 146 |

+

```bibtex

|

| 147 |

+

@inproceedings{rockwell2025dynpose,

|

| 148 |

+

author = {Rockwell, Chris and Tung, Joseph and Lin, Tsung-Yi and Liu, Ming-Yu and Fouhey, David F. and Lin, Chen-Hsuan},

|

| 149 |

+

title = {Dynamic Camera Poses and Where to Find Them},

|

| 150 |

+

booktitle = {CVPR},

|

| 151 |

+

year = 2025

|

| 152 |

+

}

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

+

## Acknowledgements

|

| 156 |

+

|

| 157 |

+

We thank Gabriele Leone and the NVIDIA Lightspeed Content Tech team for sharing the original 3D assets and scene data for creating the Lightspeed benchmark. We thank Yunhao Ge, Zekun Hao, Yin Cui, Xiaohui Zeng, Zhaoshuo Li, Hanzi Mao, Jiahui Huang, Justin Johnson, JJ Park and Andrew Owens for invaluable inspirations, discussions and feedback on this project.

|

dynpose_100k.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0cfe45ada47dcf0a42ecf8570433dcdbb44bdfad5c000060acd17a4f7c99684a

|

| 3 |

+

size 703105132

|

environment.yml

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: dynpose-100k

|

| 2 |

+

channels:

|

| 3 |

+

- defaults

|

| 4 |

+

dependencies:

|

| 5 |

+

- _libgcc_mutex=0.1=main

|

| 6 |

+

- _openmp_mutex=5.1=1_gnu

|

| 7 |

+

- ca-certificates=2024.11.26=h06a4308_0

|

| 8 |

+

- ld_impl_linux-64=2.40=h12ee557_0

|

| 9 |

+

- libffi=3.4.4=h6a678d5_1

|

| 10 |

+

- libgcc-ng=11.2.0=h1234567_1

|

| 11 |

+

- libgomp=11.2.0=h1234567_1

|

| 12 |

+

- libstdcxx-ng=11.2.0=h1234567_1

|

| 13 |

+

- ncurses=6.4=h6a678d5_0

|

| 14 |

+

- openssl=3.0.15=h5eee18b_0

|

| 15 |

+

- pip=24.2=py39h06a4308_0

|

| 16 |

+

- python=3.9.21=he870216_1

|

| 17 |

+

- readline=8.2=h5eee18b_0

|

| 18 |

+

- setuptools=75.1.0=py39h06a4308_0

|

| 19 |

+

- sqlite=3.45.3=h5eee18b_0

|

| 20 |

+

- tk=8.6.14=h39e8969_0

|

| 21 |

+

- wheel=0.44.0=py39h06a4308_0

|

| 22 |

+

- xz=5.4.6=h5eee18b_1

|

| 23 |

+

- zlib=1.2.13=h5eee18b_1

|

| 24 |

+

- pip:

|

| 25 |

+

- contourpy==1.3.0

|

| 26 |

+

- cycler==0.12.1

|

| 27 |

+

- ffmpeg-python==0.2.0

|

| 28 |

+

- fonttools==4.55.3

|

| 29 |

+

- future==1.0.0

|

| 30 |

+

- importlib-resources==6.4.5

|

| 31 |

+

- kiwisolver==1.4.7

|

| 32 |

+

- matplotlib==3.9.4

|

| 33 |

+

- numpy==2.0.2

|

| 34 |

+

- packaging==24.2

|

| 35 |

+

- pandas==2.2.3

|

| 36 |

+

- pillow==11.0.0

|

| 37 |

+

- plotly==5.24.1

|

| 38 |

+

- pyparsing==3.2.0

|

| 39 |

+

- python-dateutil==2.9.0.post0

|

| 40 |

+

- pytz==2024.2

|

| 41 |

+

- six==1.17.0

|

| 42 |

+

- tenacity==9.0.0

|

| 43 |

+

- tqdm==4.67.1

|

| 44 |

+

- tzdata==2024.2

|

| 45 |

+

- zipp==3.21.0

|

scripts/extract_frames.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -----------------------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2025, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# NVIDIA CORPORATION and its licensors retain all intellectual property

|

| 5 |

+

# and proprietary rights in and to this software, related documentation

|

| 6 |

+

# and any modifications thereto. Any use, reproduction, disclosure or

|

| 7 |

+

# distribution of this software and related documentation without an express

|

| 8 |

+

# license agreement from NVIDIA CORPORATION is strictly prohibited.

|

| 9 |

+

# -----------------------------------------------------------------------------

|

| 10 |

+

|

| 11 |

+

import argparse

|

| 12 |

+

import ast

|

| 13 |

+

import csv

|

| 14 |

+

import json

|

| 15 |

+

import os

|

| 16 |

+

import shutil

|

| 17 |

+

import subprocess

|

| 18 |

+

from tqdm import tqdm

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def get_vid_mapping(input_video_dir):

|

| 22 |

+

"""

|

| 23 |

+

Create mapping from video ID to local file path.

|

| 24 |

+

|

| 25 |

+

Args:

|

| 26 |

+

input_video_dir: Directory containing downloaded videos organized in folders

|

| 27 |

+

|

| 28 |

+

Returns:

|

| 29 |

+

vid_mapping: Dictionary mapping video IDs to local file paths

|

| 30 |

+

"""

|

| 31 |

+

print("mapping video id to local path")

|

| 32 |

+

vid_mapping = {}

|

| 33 |

+

folders = [x for x in os.listdir(input_video_dir) if os.path.isdir(f"{input_video_dir}/{x}")]

|

| 34 |

+

for folder in sorted(folders):

|

| 35 |

+

vids = [x for x in os.listdir(f"{input_video_dir}/{folder}") if x[-4:] == ".mp4"]

|

| 36 |

+

for vid in tqdm(sorted(vids)):

|

| 37 |

+

vid_path = f"{input_video_dir}/{folder}/{vid}"

|

| 38 |

+

vid_json = f"{input_video_dir}/{folder}/{vid[:-4]}.json"

|

| 39 |

+

# Extract video ID from URL in JSON metadata

|

| 40 |

+

vid_name = json.load(open(vid_json))["url"].split("?v=")[-1]

|

| 41 |

+

vid_mapping[vid_name] = vid_path

|

| 42 |

+

return vid_mapping

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def extract(url_list, input_video_dir, output_frame_parent, uid_mapping):

|

| 46 |

+

"""

|

| 47 |

+

Extract frames from videos at 12 fps.

|

| 48 |

+

|

| 49 |

+

Args:

|

| 50 |

+

url_list: CSV file containing video URLs and timestamps

|

| 51 |

+

input_video_dir: Directory containing downloaded videos

|

| 52 |

+

output_frame_parent: Output directory for extracted frames

|

| 53 |

+

uid_mapping: CSV file mapping timestamps to unique IDs

|

| 54 |

+

"""

|

| 55 |

+

vid_mapping = get_vid_mapping(input_video_dir)

|

| 56 |

+

|

| 57 |

+

with open(uid_mapping, "r") as file2:

|

| 58 |

+

with open(url_list, "r") as file:

|

| 59 |

+

csv_reader = csv.reader(file)

|

| 60 |

+

csv_reader2 = csv.reader(file2)

|

| 61 |

+

for i, (row, row2) in enumerate(tqdm(zip(csv_reader, csv_reader2))):

|

| 62 |

+

# Skip header row and empty rows

|

| 63 |

+

if i == 0 or len(row) == 0:

|

| 64 |

+

continue

|

| 65 |

+

|

| 66 |

+

try:

|

| 67 |

+

full_vid_path = vid_mapping[row[0]]

|

| 68 |

+

except KeyError:

|

| 69 |

+

if i % 10 == 0:

|

| 70 |

+

print("video not found, skipping", row[0])

|

| 71 |

+

continue

|

| 72 |

+

|

| 73 |

+

print("extracting", row[0])

|

| 74 |

+

for j, timestamps in enumerate(ast.literal_eval(row[2])):

|

| 75 |

+

uid = row2[j]

|

| 76 |

+

# Copy video with unique ID name

|

| 77 |

+

vid_parent = os.path.dirname(os.path.dirname(full_vid_path))

|

| 78 |

+

vid_uid_path = shutil.copyfile(full_vid_path, f"{vid_parent}/{uid}.mp4")

|

| 79 |

+

os.makedirs(f"{output_frame_parent}/{uid}", exist_ok=True)

|

| 80 |

+

# Extract frames using ffmpeg

|

| 81 |

+

cmd = f"nice -n 19 ffmpeg -i {vid_uid_path} -q:v 2 -vf fps=12 {output_frame_parent}/{uid}/%05d.jpg"

|

| 82 |

+

|

| 83 |

+

subprocess.run(cmd, shell=True, capture_output=True)

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

if __name__ == "__main__":

|

| 87 |

+

parser = argparse.ArgumentParser()

|

| 88 |

+

parser.add_argument("--url_list", type=str, help="path for url list")

|

| 89 |

+

parser.add_argument("--input_video_dir", type=str, help="video directory")

|

| 90 |

+

parser.add_argument("--output_frame_parent", type=str, help="parent for output frames")

|

| 91 |

+

parser.add_argument("--uid_mapping", type=str, help="path for uid mapping")

|

| 92 |

+

args = parser.parse_args()

|

| 93 |

+

print("args", args)

|

| 94 |

+

extract(args.url_list, args.input_video_dir, args.output_frame_parent, args.uid_mapping)

|

scripts/visualize_pose.py

ADDED

|

@@ -0,0 +1,334 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -----------------------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2025, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# NVIDIA CORPORATION and its licensors retain all intellectual property

|

| 5 |

+

# and proprietary rights in and to this software, related documentation

|

| 6 |

+

# and any modifications thereto. Any use, reproduction, disclosure or

|

| 7 |

+

# distribution of this software and related documentation without an express

|

| 8 |

+

# license agreement from NVIDIA CORPORATION is strictly prohibited.

|

| 9 |

+

# -----------------------------------------------------------------------------

|

| 10 |

+

|

| 11 |

+

import argparse

|

| 12 |

+

import matplotlib

|

| 13 |

+

import matplotlib.pyplot as plt

|

| 14 |

+

import numpy as np

|

| 15 |

+

import os

|

| 16 |

+

import pickle as pkl

|

| 17 |

+

import plotly.graph_objs as go

|

| 18 |

+

import plotly.io as pio

|

| 19 |

+

from tqdm import tqdm

|

| 20 |

+

|

| 21 |

+

matplotlib.use("agg")

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class Pose():

|

| 25 |

+

"""

|

| 26 |

+

A class of operations on camera poses (numpy arrays with shape [...,3,4]).

|

| 27 |

+

Each [3,4] camera pose takes the form of [R|t].

|

| 28 |

+

"""

|

| 29 |

+

|

| 30 |

+

def __call__(self, R=None, t=None):

|

| 31 |

+

"""

|

| 32 |

+

Construct a camera pose from the given rotation matrix R and/or translation vector t.

|

| 33 |

+

|

| 34 |

+

Args:

|

| 35 |

+

R: Rotation matrix [...,3,3] or None

|

| 36 |

+

t: Translation vector [...,3] or None

|

| 37 |

+

|

| 38 |

+

Returns:

|

| 39 |

+

pose: Camera pose matrix [...,3,4]

|

| 40 |

+

"""

|

| 41 |

+

assert R is not None or t is not None

|

| 42 |

+

if R is None:

|

| 43 |

+

if not isinstance(t, np.ndarray):

|

| 44 |

+

t = np.array(t)

|

| 45 |

+

R = np.eye(3, device=t.device).repeat(*t.shape[:-1], 1, 1)

|

| 46 |

+

elif t is None:

|

| 47 |

+

if not isinstance(R, np.ndarray):

|

| 48 |

+

R = np.array(R)

|

| 49 |

+

t = np.zeros(R.shape[:-1], device=R.device)

|

| 50 |

+

else:

|

| 51 |

+

if not isinstance(R, np.ndarray):

|

| 52 |

+

R = np.array(R)

|

| 53 |

+

if not isinstance(t, np.ndarray):

|

| 54 |

+

t = np.tensor(t)

|

| 55 |

+

assert R.shape[:-1] == t.shape and R.shape[-2:] == (3, 3)

|

| 56 |

+

R = R.astype(np.float32)

|

| 57 |

+

t = t.astype(np.float32)

|

| 58 |

+

pose = np.concatenate([R, t[..., None]], axis=-1) # [...,3,4]

|

| 59 |

+

assert pose.shape[-2:] == (3, 4)

|

| 60 |

+

return pose

|

| 61 |

+

|

| 62 |

+

def invert(self, pose, use_inverse=False):

|

| 63 |

+

"""

|

| 64 |

+

Invert a camera pose.

|

| 65 |

+

|

| 66 |

+

Args:

|

| 67 |

+

pose: Camera pose matrix [...,3,4]

|

| 68 |

+

use_inverse: Whether to use matrix inverse instead of transpose

|

| 69 |

+

|

| 70 |

+

Returns:

|

| 71 |

+

pose_inv: Inverted camera pose matrix [...,3,4]

|

| 72 |

+

"""

|

| 73 |

+

R, t = pose[..., :3], pose[..., 3:]

|

| 74 |

+

R_inv = R.inverse() if use_inverse else R.transpose(0, 2, 1)

|

| 75 |

+

t_inv = (-R_inv @ t)[..., 0]

|

| 76 |

+

pose_inv = self(R=R_inv, t=t_inv)

|

| 77 |

+

return pose_inv

|

| 78 |

+

|

| 79 |

+

def compose(self, pose_list):

|

| 80 |

+

"""

|

| 81 |

+

Compose a sequence of poses together.

|

| 82 |

+

pose_new(x) = poseN o ... o pose2 o pose1(x)

|

| 83 |

+

|

| 84 |

+

Args:

|

| 85 |

+

pose_list: List of camera poses to compose

|

| 86 |

+

|

| 87 |

+

Returns:

|

| 88 |

+

pose_new: Composed camera pose

|

| 89 |

+

"""

|

| 90 |

+

pose_new = pose_list[0]

|

| 91 |

+

for pose in pose_list[1:]:

|

| 92 |

+

pose_new = self.compose_pair(pose_new, pose)

|

| 93 |

+

return pose_new

|

| 94 |

+

|

| 95 |

+

def compose_pair(self, pose_a, pose_b):

|

| 96 |

+

"""

|

| 97 |

+

Compose two poses together.

|

| 98 |

+

pose_new(x) = pose_b o pose_a(x)

|

| 99 |

+

|

| 100 |

+

Args:

|

| 101 |

+

pose_a: First camera pose

|

| 102 |

+

pose_b: Second camera pose

|

| 103 |

+

|

| 104 |

+

Returns:

|

| 105 |

+

pose_new: Composed camera pose

|

| 106 |

+

"""

|

| 107 |

+

R_a, t_a = pose_a[..., :3], pose_a[..., 3:]

|

| 108 |

+

R_b, t_b = pose_b[..., :3], pose_b[..., 3:]

|

| 109 |

+

R_new = R_b @ R_a

|

| 110 |

+

t_new = (R_b @ t_a + t_b)[..., 0]

|

| 111 |

+

pose_new = self(R=R_new, t=t_new)

|

| 112 |

+

return pose_new

|

| 113 |

+

|

| 114 |

+

def scale_center(self, pose, scale):

|

| 115 |

+

"""

|

| 116 |

+

Scale the camera center from the origin.

|

| 117 |

+

0 = R@c+t --> c = -R^T@t (camera center in world coordinates)

|

| 118 |

+

0 = R@(sc)+t' --> t' = -R@(sc) = -R@(-R^T@st) = st

|

| 119 |

+

|

| 120 |

+

Args:

|

| 121 |

+

pose: Camera pose to scale

|

| 122 |

+

scale: Scale factor

|

| 123 |

+

|

| 124 |

+

Returns:

|

| 125 |

+

pose_new: Scaled camera pose

|

| 126 |

+

"""

|

| 127 |

+

R, t = pose[..., :3], pose[..., 3:]

|

| 128 |

+

pose_new = np.concatenate([R, t * scale], axis=-1)

|

| 129 |

+

return pose_new

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

def to_hom(X):

|

| 133 |

+

"""Get homogeneous coordinates of the input by appending ones."""

|

| 134 |

+

X_hom = np.concatenate([X, np.ones_like(X[..., :1])], axis=-1)

|

| 135 |

+

return X_hom

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

def cam2world(X, pose):

|

| 139 |

+

"""Transform points from camera to world coordinates."""

|

| 140 |

+

X_hom = to_hom(X)

|

| 141 |

+

pose_inv = Pose().invert(pose)

|

| 142 |

+

return X_hom @ pose_inv.transpose(0, 2, 1)

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

def get_camera_mesh(pose, depth=1):

|

| 146 |

+

"""

|

| 147 |

+

Create a camera mesh visualization.

|

| 148 |

+

|

| 149 |

+

Args:

|

| 150 |

+

pose: Camera pose matrix

|

| 151 |

+

depth: Size of the camera frustum

|

| 152 |

+

|

| 153 |

+

Returns:

|

| 154 |

+

vertices: Camera mesh vertices

|

| 155 |

+

faces: Camera mesh faces

|

| 156 |

+

wireframe: Camera wireframe vertices

|

| 157 |

+

"""

|

| 158 |

+

vertices = np.array([[-0.5, -0.5, 1],

|

| 159 |

+

[0.5, -0.5, 1],

|

| 160 |

+

[0.5, 0.5, 1],

|

| 161 |

+

[-0.5, 0.5, 1],

|

| 162 |

+

[0, 0, 0]]) * depth # [6,3]

|

| 163 |

+

faces = np.array([[0, 1, 2],

|

| 164 |

+

[0, 2, 3],

|

| 165 |

+

[0, 1, 4],

|

| 166 |

+

[1, 2, 4],

|

| 167 |

+

[2, 3, 4],

|

| 168 |

+

[3, 0, 4]]) # [6,3]

|

| 169 |

+

vertices = cam2world(vertices[None], pose) # [N,6,3]

|

| 170 |

+

wireframe = vertices[:, [0, 1, 2, 3, 0, 4, 1, 2, 4, 3]] # [N,10,3]

|

| 171 |

+

return vertices, faces, wireframe

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

def merge_xyz_indicators_plotly(xyz):

|

| 175 |

+

"""Merge xyz coordinate indicators for plotly visualization."""

|

| 176 |

+

xyz = xyz[:, [[-1, 0], [-1, 1], [-1, 2]]] # [N,3,2,3]

|

| 177 |

+

xyz_0, xyz_1 = unbind_np(xyz, axis=2) # [N,3,3]

|

| 178 |

+

xyz_dummy = xyz_0 * np.nan

|

| 179 |

+

xyz_merged = np.stack([xyz_0, xyz_1, xyz_dummy], axis=2) # [N,3,3,3]

|

| 180 |

+

xyz_merged = xyz_merged.reshape(-1, 3)

|

| 181 |

+

return xyz_merged

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

def get_xyz_indicators(pose, length=0.1):

|

| 185 |

+

"""Get xyz coordinate axis indicators for a camera pose."""

|

| 186 |

+

xyz = np.eye(4, 3)[None] * length

|

| 187 |

+

xyz = cam2world(xyz, pose)

|

| 188 |

+

return xyz

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

def merge_wireframes_plotly(wireframe):

|

| 192 |

+

"""Merge camera wireframes for plotly visualization."""

|

| 193 |

+

wf_dummy = wireframe[:, :1] * np.nan

|

| 194 |

+

wireframe_merged = np.concatenate([wireframe, wf_dummy], axis=1).reshape(-1, 3)

|

| 195 |

+

return wireframe_merged

|

| 196 |

+

|

| 197 |

+

|

| 198 |

+

def merge_meshes(vertices, faces):

|

| 199 |

+

"""Merge multiple camera meshes into a single mesh."""

|

| 200 |

+

mesh_N, vertex_N = vertices.shape[:2]

|

| 201 |

+

faces_merged = np.concatenate([faces + i * vertex_N for i in range(mesh_N)], axis=0)

|

| 202 |

+

vertices_merged = vertices.reshape(-1, vertices.shape[-1])

|

| 203 |

+

return vertices_merged, faces_merged

|

| 204 |

+

|

| 205 |

+

|

| 206 |

+

def unbind_np(array, axis=0):

|

| 207 |

+

"""Split numpy array along specified axis into list."""

|

| 208 |

+

if axis == 0:

|

| 209 |

+

return [array[i, :] for i in range(array.shape[0])]

|

| 210 |

+

elif axis == 1 or (len(array.shape) == 2 and axis == -1):

|

| 211 |

+

return [array[:, j] for j in range(array.shape[1])]

|

| 212 |

+

elif axis == 2 or (len(array.shape) == 3 and axis == -1):

|

| 213 |

+

return [array[:, :, j] for j in range(array.shape[2])]

|

| 214 |

+

else:

|

| 215 |

+

raise ValueError("Invalid axis. Use 0 for rows or 1 for columns.")

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

def plotly_visualize_pose(poses, vis_depth=0.5, xyz_length=0.5, center_size=2, xyz_width=5, mesh_opacity=0.05):

|

| 219 |

+

"""

|

| 220 |

+

Create plotly visualization traces for camera poses.

|

| 221 |

+

|

| 222 |

+

Args:

|

| 223 |

+

poses: Camera poses to visualize [N,3,4]

|

| 224 |

+

vis_depth: Size of camera frustum visualization

|

| 225 |

+

xyz_length: Length of coordinate axis indicators

|

| 226 |

+

center_size: Size of camera center markers

|

| 227 |

+

xyz_width: Width of coordinate axis lines

|

| 228 |

+

mesh_opacity: Opacity of camera frustum mesh

|

| 229 |

+

|

| 230 |

+

Returns:

|

| 231 |

+

plotly_traces: List of plotly visualization traces

|

| 232 |

+

"""

|

| 233 |

+

N = len(poses)

|

| 234 |

+

centers_cam = np.zeros([N, 1, 3])

|

| 235 |

+

centers_world = cam2world(centers_cam, poses)

|

| 236 |

+

centers_world = centers_world[:, 0]

|

| 237 |

+

# Get the camera wireframes.

|

| 238 |

+

vertices, faces, wireframe = get_camera_mesh(poses, depth=vis_depth)

|

| 239 |

+

xyz = get_xyz_indicators(poses, length=xyz_length)

|

| 240 |

+

vertices_merged, faces_merged = merge_meshes(vertices, faces)

|

| 241 |

+

wireframe_merged = merge_wireframes_plotly(wireframe)

|

| 242 |

+

xyz_merged = merge_xyz_indicators_plotly(xyz)

|

| 243 |

+

# Break up (x,y,z) coordinates.

|

| 244 |

+

wireframe_x, wireframe_y, wireframe_z = unbind_np(wireframe_merged, axis=-1)

|

| 245 |

+

xyz_x, xyz_y, xyz_z = unbind_np(xyz_merged, axis=-1)

|

| 246 |

+

centers_x, centers_y, centers_z = unbind_np(centers_world, axis=-1)

|

| 247 |

+

vertices_x, vertices_y, vertices_z = unbind_np(vertices_merged, axis=-1)

|

| 248 |

+

# Set the color map for the camera trajectory and the xyz indicators.

|

| 249 |

+

color_map = plt.get_cmap("gist_rainbow") # red -> yellow -> green -> blue -> purple

|

| 250 |

+

center_color = []

|

| 251 |

+

faces_merged_color = []

|

| 252 |

+

wireframe_color = []

|

| 253 |

+

xyz_color = []

|

| 254 |

+

x_color, y_color, z_color = *np.eye(3).T,

|

| 255 |

+

for i in range(N):

|

| 256 |

+

# Set the camera pose colors (with a smooth gradient color map).

|

| 257 |

+

r, g, b, _ = color_map(i / (N - 1))

|

| 258 |

+

rgb = np.array([r, g, b]) * 0.8

|

| 259 |

+

wireframe_color += [rgb] * 11

|

| 260 |

+

center_color += [rgb]

|

| 261 |

+

faces_merged_color += [rgb] * 6

|

| 262 |

+

xyz_color += [x_color] * 3 + [y_color] * 3 + [z_color] * 3

|

| 263 |

+

# Plot in plotly.

|

| 264 |

+

plotly_traces = [

|

| 265 |

+

go.Scatter3d(x=wireframe_x, y=wireframe_y, z=wireframe_z, mode="lines",

|

| 266 |

+

line=dict(color=wireframe_color, width=1)),

|

| 267 |

+

go.Scatter3d(x=xyz_x, y=xyz_y, z=xyz_z, mode="lines", line=dict(color=xyz_color, width=xyz_width)),

|

| 268 |

+

go.Scatter3d(x=centers_x, y=centers_y, z=centers_z, mode="markers",

|

| 269 |

+

marker=dict(color=center_color, size=center_size, opacity=1)),

|

| 270 |

+

go.Mesh3d(x=vertices_x, y=vertices_y, z=vertices_z,

|

| 271 |

+

i=[f[0] for f in faces_merged], j=[f[1] for f in faces_merged], k=[f[2] for f in faces_merged],

|

| 272 |

+

facecolor=faces_merged_color, opacity=mesh_opacity),

|

| 273 |

+

]

|

| 274 |

+

return plotly_traces

|

| 275 |

+

|

| 276 |

+

|

| 277 |

+

def write_html(poses, file, dset, vis_depth=1, xyz_length=0.2, center_size=0.01, xyz_width=2):

|

| 278 |

+

"""Write camera pose visualization to HTML file."""

|

| 279 |

+

if dset == "lightspeed":

|

| 280 |

+

xyz_length = xyz_length / 3

|

| 281 |

+

xyz_width = xyz_width

|

| 282 |

+

vis_depth = vis_depth / 3

|

| 283 |

+

center_size *= 3

|

| 284 |

+

|

| 285 |

+

traces_poses = plotly_visualize_pose(poses, vis_depth=vis_depth, xyz_length=xyz_length,

|

| 286 |

+

center_size=center_size, xyz_width=xyz_width, mesh_opacity=0.05)

|

| 287 |

+

traces_all2 = traces_poses

|

| 288 |

+

layout2 = go.Layout(scene=dict(xaxis=dict(visible=False), yaxis=dict(visible=False), zaxis=dict(visible=False),

|

| 289 |

+

dragmode="orbit", aspectratio=dict(x=1, y=1, z=1), aspectmode="data"),

|

| 290 |

+

height=400, width=600, showlegend=False)

|

| 291 |

+

|

| 292 |

+

fig2 = go.Figure(data=traces_all2, layout=layout2)

|

| 293 |

+

html_str2 = pio.to_html(fig2, full_html=False)

|

| 294 |

+

|

| 295 |

+

file.write(html_str2)

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

def viz_poses(i, seq, file, dset, dset_parent):

|

| 299 |

+

"""Visualize camera poses for a sequence and write to HTML file."""

|

| 300 |

+

if "/" in seq:

|

| 301 |

+

seq = "_".join(seq.split("/"))

|

| 302 |

+

|

| 303 |

+

if "mp4" in seq:

|

| 304 |

+

seq = seq[:-4]

|

| 305 |

+

|

| 306 |

+

file.write(f"<span style='font-size: 18pt;'>{i} {seq}</span><br>")

|

| 307 |

+

|

| 308 |

+

if dset == "dynpose_100k":

|

| 309 |

+

with open(f"{dset_parent}/cameras/{seq}.pkl", "rb") as f:

|

| 310 |

+

poses = pkl.load(f)["poses"]

|

| 311 |

+

else:

|

| 312 |

+

with open(f"{dset_parent}/poses.pkl", "rb") as f:

|

| 313 |

+

poses = pkl.load(f)[seq]

|

| 314 |

+

|

| 315 |

+

write_html(poses, file, dset)

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

if __name__ == "__main__":

|

| 319 |

+

parser = argparse.ArgumentParser()

|

| 320 |

+

parser.add_argument("--dset", type=str, default="dynpose_100k", choices=["dynpose_100k", "lightspeed"])

|

| 321 |

+

parser.add_argument("--dset_parent", type=str, default=".")

|

| 322 |

+

args = parser.parse_args()

|

| 323 |

+

|

| 324 |

+

outdir = f"pose_viz/{args.dset}"

|

| 325 |

+

os.makedirs(outdir, exist_ok=True)

|

| 326 |

+

split_size = 6 # to avoid poses disappearing

|

| 327 |

+

|

| 328 |

+

viz_list = f"{args.dset_parent}/viz_list.txt"

|

| 329 |

+

seqs = open(viz_list).read().split()

|

| 330 |

+

|

| 331 |

+

for j in tqdm(range(int(np.ceil(len(seqs)/split_size)))):

|

| 332 |

+

with open(f"{outdir}/index_{str(j)}.html", "w") as file:

|

| 333 |

+

for i, seq in enumerate(tqdm(seqs[j*split_size:j*split_size+split_size])):

|

| 334 |

+

viz_poses(i, seq, file, args.dset, args.dset_parent)

|

teaser.png

ADDED

|

Git LFS Details

|