MetaMind: Modeling Human Social Thoughts with Metacognitive Multi-Agent Systems

Abstract

MetaMind, a multi-agent framework inspired by metacognition, enhances LLMs' ability to perform Theory of Mind tasks by decomposing social understanding into hypothesis generation, refinement, and response generation, achieving human-like performance.

Human social interactions depend on the ability to infer others' unspoken intentions, emotions, and beliefs-a cognitive skill grounded in the psychological concept of Theory of Mind (ToM). While large language models (LLMs) excel in semantic understanding tasks, they struggle with the ambiguity and contextual nuance inherent in human communication. To bridge this gap, we introduce MetaMind, a multi-agent framework inspired by psychological theories of metacognition, designed to emulate human-like social reasoning. MetaMind decomposes social understanding into three collaborative stages: (1) a Theory-of-Mind Agent generates hypotheses user mental states (e.g., intent, emotion), (2) a Domain Agent refines these hypotheses using cultural norms and ethical constraints, and (3) a Response Agent generates contextually appropriate responses while validating alignment with inferred intent. Our framework achieves state-of-the-art performance across three challenging benchmarks, with 35.7% improvement in real-world social scenarios and 6.2% gain in ToM reasoning. Notably, it enables LLMs to match human-level performance on key ToM tasks for the first time. Ablation studies confirm the necessity of all components, which showcase the framework's ability to balance contextual plausibility, social appropriateness, and user adaptation. This work advances AI systems toward human-like social intelligence, with applications in empathetic dialogue and culturally sensitive interactions. Code is available at https://github.com/XMZhangAI/MetaMind.

Community

“What is meant often goes far beyond what is said, and that is what makes conversation possible.” ——H. P. Grice

Introduction: The leap from "semantic understanding" to "mental empathy"

In human daily communication, the meaning of words often goes beyond the literal meaning. For example, when someone says "It's so cold here", the true intention may be far more than just describing temperature - it may be a tactful request (hoping the other person to close the window), or expressing discomfort or seeking care. Similarly, when a human says "I've been suffering from insomnia recently", the underlying meaning may be work pressure, emotional distress, or physical illness - the reason why humans can understand the implied meaning in social interactions is through fragmented information, such as social context, prior knowledge, and preset feedback to infer the intentions, emotions, and beliefs of others, a psychological state known as Theory of Mind (ToM) , which is also the core of social intelligence. Developmental psychology research shows that children begin to have this ability to reason about the mentality of others around the age of 4. This "mind-reading" type of social intelligence allows humans to understand language beyond the literal meaning, truly achieving "listening to the implied meaning and observing the thoughts in their hearts".

Giving machines similar human social intelligence has always been a huge challenge in the field of artificial intelligence. Although large language models (LLMs) perform well in semantic understanding and QA conversations, they often perform poorly in the face of ambiguity and indirect hints in human communication. Therefore, their performance in real social scenarios is often criticized as mechanical responses. For example, current conversational AI often cannot reliably speculate on users' potential emotions or intentions, so they uniformly reply when dealing with euphemistic expressions, implicit emotions, or culturally sensitive topics, bringing people an uncomfortable interactive experience. People soon discovered: Pure language fluency ≠ understanding of "human relationships" . Some attempts to inject social behavior into models, such as pre-setting roles to simulate social conversations or fine-tuning models through preference data, often only align the models on the surface (such as following dialogue formats or avoiding taboos), without truly endowing the models with human-like hierarchical psychological reasoning abilities. In short, traditional methods mostly treat social reasoning as a one-time single-step prediction problem, rather than a multi-stage process of interpretation-reflection-adaptation like humans. How to enable AI to have this human-like multi-layer social reasoning ability has become a key threshold to higher-level artificial intelligence.

To address this challenge, the latest research from the University of Wisconsin-Madison "MetaMind: Modeling Human Social Thoughts with Metacognitive Multi-Agent Systems" is the first to integrate the theory of metacognition in developmental psychology into the LLM architecture. Through a cognitive closed loop of biomimetic human hypothesis generation, reflection correction, and behavior verification, the LLM reached the average human level in eight standardized theory-of-mind tests. This achievement not only refreshes multiple benchmark records and significantly improves the model's ability to grasp implicit intentions, emotions, and social norms, but also reveals a systematic methodology for building social intelligence AI, allowing AI to "read people's minds".

- Paper Link: https://arxiv.org/abs/2505.18943

- Github: https://github.com/XMZhangAI/MetaMind

MetaMind Framework: Three-Phase Metacognitive Multi-Agent

MetaMind attempts to enable LLM to simulate human social reasoning processes through multi-agent collaboration. This framework is inspired by the metacognition theory in psychology. US psychologist Flavell proposed the concept of metacognition in 1979, pointing out that humans self-monitor and regulate in cognitive activities: we reflect on our own thoughts, correct our understanding according to social rules, and adjust our behavior in complex situations. MetaMind draws on this concept of "thinking above thinking" and breaks down social understanding into three stages, each of which is handled by a specialized agent responsible for different levels of reasoning tasks.

Stage 1: The Theory-of-Mind Agent (ToM Agent) is responsible for generating mental state hypotheses. In this initial stage, the ToM Agent attempts to infer the "unrevealed meaning" behind the user's words and generates multiple hypotheses about the user's potential mental state. These hypotheses cover different types of possible beliefs, desires, intentions, emotions, and so on. For example, when a user says "I've been exhausted from work lately," the ToM Agent does not directly generate a response, normally "prioritize your rest", but first speculates the user's true mental state: it may assume that the user feels "tired and frustrated," or assumes that the user is seeking sympathy and understanding. By generating a series of diverse hypotheses, LLM has a more comprehensive consideration of the user's potential appeals before answering.

Stage 2: The Domain Agent is responsible for applying social norm constraints to examine and filter the mental hypotheses generated in the previous stage This agent plays the role of a "social common sense and norm reviewer": it considers the cultural background, ethical standards, and situational appropriateness in the current scenario, and corrects or rejects unreasonable or inappropriate assumptions. Just as humans adjust their interpretation of others' words based on social experience, the agent ensures that the model's reasoning conforms to social norms. For example, if the ToM stage assumes "romantic intent" but the conversation takes place in the workplace, the Domain Agent will adjust this interpretation to a normal "colleague appreciation" based on the norms of the professional setting to avoid crossing the line of understanding. By introducing social constraints, the model can suppress untimely speculation and make the reasoning results more reasonable and responsible in the context. Notably, this stage ensures the contextual rationality of the optimal hypothesis and the scenario-specific information gain by balancing the probability of the target hypothesis in context and the contingency of the hypothesis.

Stage 3: The Response Agent is responsible for generating and verifying the final answer. After the first two stages, the model has "figured out" the potential demands of the user and filtered out the most appropriate hypothesis. In the final step, the Response Agent needs to take actions based on them and perform self-validation during the generation process. The agent takes the best hypothesis proposed in the previous two stages as a condition, and adds the user's social memory as an additional input. On the one hand, it ensures that the response is relevant and the tone matches the user's current feelings. On the other hand, it evaluates the quality of the response after completion. It will reflect on whether it is consistent with the inferred user intention and self-social status, whether it is appropriate in emotion and context. If there is any deviation, it can trigger a cognitive cycle, injecting experiential feedback into social memory to improve the response in the next iteration. Through this "generation + verification" closed loop, the final output of the model is more empathetic and socially cognitive than normal semantic accuracy.

The above three-step cycle allows MetaMind to go through a process of hypothesis, reflection, and adjustment like humans when understanding and responding to others, rather than giving arbitrary answers at the beginning. This multi-agent collaborative hierarchical reasoning design enables the model to have preliminary human-style social cognitive abilities. It is worth mentioning that MetaMind's agents do not work in isolation, but form an organic whole through shared memory and information. For example, in the first stage of generating hypotheses, user preferences in social memory will be referenced, and in the third stage of generating answers, hypotheses corrected by domain agents will be used - the entire process constructs a "metacognitive cycle", constantly self-feedback and improvement, just as the human brain does in social interaction.

Dynamic social memory: Long-term, evolvable user portraits

In the MetaMind framework, there is a key mechanism throughout called Social Memory. It is like a constantly updated notebook in the AI brain, used to record important information about users during interactions . Specifically, Social Memory stores users' long-term preferences, personality traits, and prominent emotional patterns , and dynamically updates them as the conversation progresses. Whenever the model needs to reason about the user's intentions or decide how to respond, Social Memory can be retrieved to provide additional background references. For example, in consecutive rounds of interaction, if a user has shown a shy and introverted personality or prefers a metaphorical communication style, MetaMind can take this historical information into account, thus having a more consistent and coherent grasp of the user .

The role of social memory runs through the MetaMind architecture: In the first stage, the ToM agent cross-references social memory when generating mental state hypotheses to ensure that the speculation conforms to the user's consistent behavioral patterns. Based on the hypothesis type, when the hypothesis is judged as a new user preference, it is injected to social memory as an intuitively user model; in the third stage, the response agent will call up social memory when generating answers to adjust the emotional tone of the reply , so that the tone and content are coordinated with the user's previous emotional pattern. Notably, when the round of validation fails, social memory will be optimized again through risk feedback - based on this approach, MetaMind has achieved two major improvements: long-term user modeling and emotional consistency. On the one hand, the model can continuously accumulate user information to form a more comprehensive user portrait; on the other hand, in long conversations or multi-round interactions, the emotional style of model's response will not be contradictory, but will echo the user's previous preference. This effectively avoids the common problems of "amnesia" and emotional incoherence in traditional LLM.

Furthermore, social memory endows the model with a certain degree of personalized adaptation ability. If traditional models start from scratch for every new conversation, then MetaMind with social memory can "remember who you are". For example, in an educational scenario, if a teaching AI assistant has social memory, it can remember the mastery curve and emotional feedback of students' past knowledge, thereby adjusting teaching strategies and grasping the tone of feedback. This personalized long-term adaptation is crucial for human-machine interaction experience and is also a big step towards more emotionally intelligent AI.

From Folk Psychology to Metacognitive Theory

MetaMind's design is deeply rooted in cognitive psychology theory and highly aligned with the principles of human social cognition. Firstly, it draws on the concept of " Folk Psychology " in developmental psychology. Folk psychology refers to a set of reasoning methods spontaneously formed by people in daily life about the psychological states behind other people's behavior - simply put, we intuitively understand the thoughts and motivations of others, which is the foundation of theory of mind. What MetaMind's first stage-ToM agent does is essentially imitating the process of human folk psychology: facing a sentence, it lists possible implicit attitudes (beliefs, emotions, etc.), just as we guess in our minds whether the other person is "implying XX". This design allows AI to understand language no longer at the literal level , but to try to touch the psychological context behind it.

Secondly, the concept of metacognitive multi-stage cycle introduced by MetaMind directly benefits from Flavell's metacognitive theory . Metacognition emphasizes that people plan, monitor, and evaluate their cognitive activities to achieve self-regulation. Corresponding to the MetaMind framework, the collaborative process of the three agents reflects a similar self-regulation mechanism: the ToM agent completes the plan and hypothesis (corresponding to the planning stage), the domain agent reviews and adjusts the hypothesis (corresponding to the monitoring reflection stage), and the response agent evaluates and verifies the final output (corresponding to the evaluation stage). It can be said that MetaMind explicitly integrates the principles of human metacognition in the LLM architecture. This system of division and cooperation is closer to the way humans think when solving complex social tasks than simply relying on prompt words to let a single model give an answer "in one step".

In contrast, the commonly used LLM alignment methods are too flat . For example, although chain-of-thought prompting guides the model to think step by step to some extent, it lacks a mechanism for dynamically adjusting according to the context; preseting profile or script makes the model pretend to play in a certain role, but it is difficult to capture the dynamically changing social intentions in the real dialogue ; RLHF fine-tunes the model through large-scale manual feedback to improve politeness and safety, but it is difficult to achieve analogy in ever-changing social scenarios. Moreover, collecting widely covered high quality training data is challenging. These methods essentially teach the model a "static" or "superficial" alignment strategy, lacking a deeper simulation of human social cognitive processes. The emergence of MetaMind is a reflection and breakthrough of this situation: it no longer regards social interaction as a static problem , but allows AI to reproduce human social reasoning chain internally through multi-stage reasoning of metacognition. Therefore, MetaMind shows stronger contextual adaptability and behavior appropriateness in various complex social scenarios.

SOTA Performance : Approaches human level on multiple benchmarks

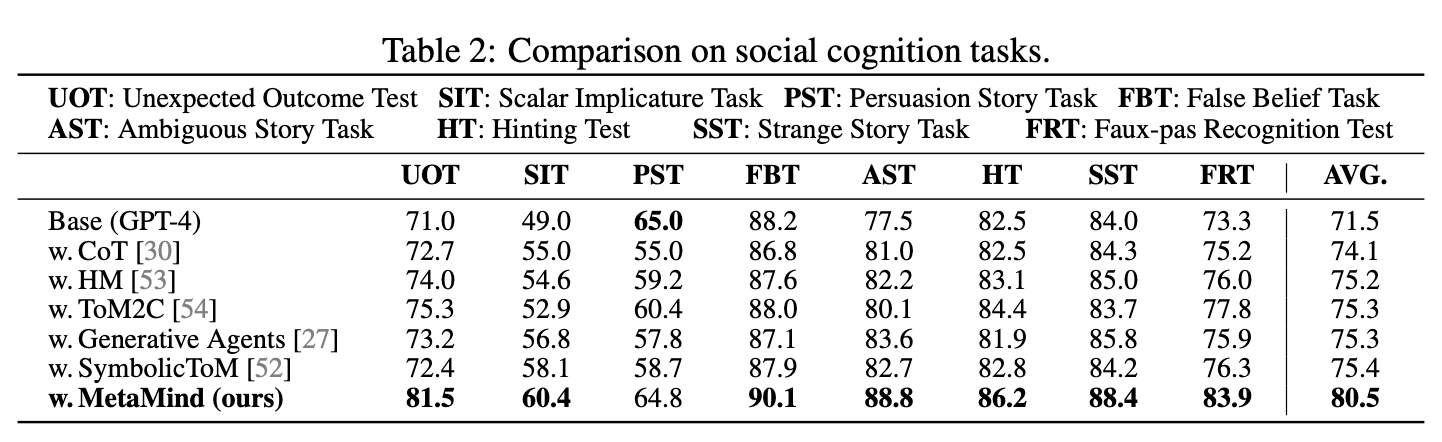

The MetaMind framework has achieved outperforming results in a series of rigorous benchmark tests, demonstrating its effectiveness in endowing LLM with social reasoning ability. The authors selected three challenging tests in the paper: 1. ToMBench, which specifically evaluates social reasoning ability and covers a variety of ToM reasoning tasks; 2. A series of social cognitive tasks (such as Fax-paus Recognization, SocialIQA, etc.), which examine the model's understanding of social situations; 3. Social simulation task set STSS and SOTOPIA, which allow the model to make behavioral decisions in interactive situations. Combining these aspects, the social intelligence of the model can be measured more comprehensively.

Experimental results show that MetaMind has achieved significant performance improvements on various underlying LLMs on these benchmarks. For example, on ToMBench, the MetaMind framework has improved the average mental inference accuracy of GPT-4 from about 74.8% to 81.0%, surpassing all previous methods for improving ToM capabilities. It is worth noting that whether it is small models (7-13 billion parameter level), large models (GPT-3.5/4, etc.), or the most advanced reasoning models (DeepSeek r1, OpenAI o3, etc.), almost all of them are significantly improved by MetaMind: This shows that the multi-stage inference mechanism provided by MetaMind is universally effective for various models, not just for individual ones.

MetaMind not only excels in multi-choice ToM tests, but also performing well in real social interactions, proved by improvement on more open and complex simulation tasks. In social cognitive tasks (such as judging implicit motivations in conversations, identifying awkward scenes, etc.), MetaMind also achieved much higher comprehensive score than existing methods. In Social Tasks in Sandbox Simulation (STSS), MetaMind achieved a 34.5% performance improvement compared to the original model , significantly enhancing the model's ability to cope in real social scenarios. A remarkable milestone is that with the help of the MetaMind framework, some LLMs achieved average human performance in key social reasoning tasks for the first time - which was unimaginable in the past. Considering the cost of reasoning models for large scale deployment, we focus on analyzing the ability radar charts of eight non-reasoning models in six typical ToM ability dimensions. It can be seen that their original distributions are generally smaller than human standards and have different shapes, indicating that these models have uneven abilities in different mental dimensions and are overall inferior to humans. After integrating MetaMind, the distribution areas have significantly increased, and GPT-4 even almost equals the human performance. In particular, after joining MetaMind, GPT-4 scored 89.3 points in the "belief" dimension, surpassing the average human performance of 88.6 points; it also scored 89.0 points in the "natural language communication" dimension, exceeding the average human performance of 88.5 points.These results clearly show that MetaMind effectively bridges the gap between LLM and human social cognitive level , enabling LLMs to grasp the ability of human reasoning about others' status more comprehensively and evenly.

Real Dialogue Profile: The "Persuasion Technique" of Ten-Minute Running Suggestion

In actual case studies, MetaMind has shown the ability to understand users' explicit and implicit expressions, and to communicate with users using appropriate strategies. This ability can be flexibly generalized in persuasion, negotiation, and cooperation scenarios. In addition, when the MetaMind-enhanced model talks to a normal model, its interaction quality is significantly improved: when external judges review, whether it is AI or human reviewers, they tend to identify the conversation as human-machine or human-to-human, and determine that the MetaMind belongs to a human, which is extremely difficult to misjudge in normal model interactions. This phenomenon further highlights the social intelligence potential of MetaMind - establishing a self-interaction data system to construct valuable heterogeneous and long-tail interaction stories for future model training; and the major goal of artificial intelligence - attempting to conquer the Turing test.

Future Outlook : AI Applications Towards Higher Social Intelligence

MetaMind's results have shown us the huge potential of endowing AI with human-like social intelligence . This multi-agent metacognitive framework has not only achieved outperforming results in well-defined benchmarks, but also opened up new doors for practical applications. Firstly, in terms of human-computer interaction , AI with ToM reasoning will understand users' implied meanings and emotional states better, thus providing more intimate and appropriate responses. Whether it is digital customer service, virtual assistants, or companion robots, they are expected to become more reasonable due to MetaMind-style upgrades, truly understanding users' thoughts and ideas, rather than mechanically answering questions.

Secondly, in cross-cultural interaction , MetaMind's domain agent can play an important role. When AI faces users from different cultural backgrounds, it can adjust its understanding and response based on local social norms and etiquette. This means that future global AI systems can better avoid cultural offenses and misunderstandings, and achieve an experience of cultural Self-Adaptation. For example, in an international negotiation, AI assistance upgraded by MetaMind can recognize certain expressions may be impolite to other's culture, and automatically correct the response to meet the social expectations correspondingly.

In educational scenario , AI tutors with social intelligence will shine. It can understand students' knowledge mastery and emotional changes through social memory, gain insight into students' possible confusion or frustration in teaching in advance (ToM agent), and guide them in a way that fits students' cultural background and personality characteristics (domain agent), ultimately giving warm and guiding feedback (response agent). Such an intelligent teaching system will be more like a personal tutor who not only answer academic questions, but also emotionally motivate students and provide humanized companionship.

Finally, from a broader perspective, MetaMind represents a shift in AI design philosophy - from pursuing extreme performance on a single metric to pursuing isomorphism with human cognitive processes . It suggests that instead of constantly increasing the scale of model parameters, it is better to make AI's understanding closer to humans: learn to think deep like us, reflect on our own cognition, and regulate behavior according to social rules. Such AI will be more likely to integrate into human society and help us solve problems that require both intelligence, empathy and ethical considerations , such as psychological counseling, medical care, group decision-making, and so on. In short, MetaMind has shown us the dawn of "building AI that understands human's mind" : the future artificial intelligence may not only understand what we say, but also understand those words that have not been spoken . This is undoubtedly a big step towards Artificial General Intelligence and a beautiful vision of making technology better serve people.

Welcome to leave a message: In which social scenarios do you expect AI to show its skills?

Simply put, if we want AI’s responses to match human expectations, please, don’t just stack parameters blindly - maybe try out human cognitive processes first🔥!

Thanks Prompt Cat for Chinese version promotion, it's a nice account focus on great questions!

https://mp.weixin.qq.com/s/BzFBGNojzBy43dE8gbf7vA

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- Sentient Agent as a Judge: Evaluating Higher-Order Social Cognition in Large Language Models (2025)

- Do Theory of Mind Benchmarks Need Explicit Human-like Reasoning in Language Models? (2025)

- Towards Dynamic Theory of Mind: Evaluating LLM Adaptation to Temporal Evolution of Human States (2025)

- Rethinking Theory of Mind Benchmarks for LLMs: Towards A User-Centered Perspective (2025)

- Why We Feel: Breaking Boundaries in Emotional Reasoning with Multimodal Large Language Models (2025)

- Theory of Mind in Large Language Models: Assessment and Enhancement (2025)

- AI Awareness (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Models citing this paper 0

No model linking this paper

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper