Spaces:

Running

Running

Upload 141 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +50 -0

- .gitignore +8 -0

- LICENSE +21 -0

- README.md +78 -0

- app.py +86 -0

- requirements.txt +26 -0

- src/audeo/Midi_synth.py +165 -0

- src/audeo/README.md +67 -0

- src/audeo/Roll2MidiNet.py +139 -0

- src/audeo/Roll2MidiNet_enhance.py +164 -0

- src/audeo/Roll2Midi_dataset.py +160 -0

- src/audeo/Roll2Midi_dataset_tv2a_eval.py +118 -0

- src/audeo/Roll2Midi_evaluate.py +126 -0

- src/audeo/Roll2Midi_evaluate_tv2a.py +93 -0

- src/audeo/Roll2Midi_inference.py +100 -0

- src/audeo/Roll2Midi_train.py +280 -0

- src/audeo/Video2RollNet.py +264 -0

- src/audeo/Video2Roll_dataset.py +148 -0

- src/audeo/Video2Roll_evaluate.py +90 -0

- src/audeo/Video2Roll_inference.py +151 -0

- src/audeo/Video2Roll_solver.py +204 -0

- src/audeo/Video2Roll_train.py +26 -0

- src/audeo/Video_Id.md +30 -0

- src/audeo/balance_data.py +91 -0

- src/audeo/models/Video2Roll_50_0.4/14.pth +3 -0

- src/audeo/piano_coords.py +9 -0

- src/audeo/thumbnail_image.png +3 -0

- src/audeo/videomae_fintune.ipynb +0 -0

- src/audioldm/__init__.py +8 -0

- src/audioldm/__main__.py +183 -0

- src/audioldm/audio/__init__.py +2 -0

- src/audioldm/audio/audio_processing.py +100 -0

- src/audioldm/audio/stft.py +186 -0

- src/audioldm/audio/tools.py +85 -0

- src/audioldm/clap/__init__.py +0 -0

- src/audioldm/clap/encoders.py +170 -0

- src/audioldm/clap/open_clip/__init__.py +25 -0

- src/audioldm/clap/open_clip/bert.py +40 -0

- src/audioldm/clap/open_clip/bpe_simple_vocab_16e6.txt.gz +3 -0

- src/audioldm/clap/open_clip/factory.py +279 -0

- src/audioldm/clap/open_clip/feature_fusion.py +192 -0

- src/audioldm/clap/open_clip/htsat.py +1308 -0

- src/audioldm/clap/open_clip/linear_probe.py +66 -0

- src/audioldm/clap/open_clip/loss.py +398 -0

- src/audioldm/clap/open_clip/model.py +936 -0

- src/audioldm/clap/open_clip/model_configs/HTSAT-base.json +23 -0

- src/audioldm/clap/open_clip/model_configs/HTSAT-large.json +23 -0

- src/audioldm/clap/open_clip/model_configs/HTSAT-tiny-win-1536.json +23 -0

- src/audioldm/clap/open_clip/model_configs/HTSAT-tiny.json +23 -0

- src/audioldm/clap/open_clip/model_configs/PANN-10.json +23 -0

.gitattributes

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

Video-to-Audio-and-Piano-HF/src/audeo/thumbnail_image.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

Video-to-Audio-and-Piano-HF/tests/piano_2h_cropped2_cuts/nwwHuxHMIpc.00000000.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

Video-to-Audio-and-Piano-HF/tests/piano_2h_cropped2_cuts/nwwHuxHMIpc.00000001.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

Video-to-Audio-and-Piano-HF/tests/scps/tango-master/data/audiocaps/train_audiocaps.json filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

Video-to-Audio-and-Piano-HF/tests/scps/tango-master/data/train_audioset_sl.json filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

Video-to-Audio-and-Piano-HF/tests/scps/tango-master/data/train_bbc_sound_effects.json filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

Video-to-Audio-and-Piano-HF/tests/scps/tango-master/data/train_val_audioset_sl.json filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

Video-to-Audio-and-Piano-HF/tests/scps/VGGSound/train.scp filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

Video-to-Audio-and-Piano-HF/tests/VGGSound/video/1u1orBeV4xI_000428.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

Video-to-Audio-and-Piano-HF/tests/VGGSound/video/1uCzQCdCC1U_000170.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

src/audeo/thumbnail_image.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

tests/piano_2h_cropped2_cuts/nwwHuxHMIpc.00000000.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

tests/piano_2h_cropped2_cuts/nwwHuxHMIpc.00000001.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

tests/VGGSound/video/1u1orBeV4xI_000428.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

tests/VGGSound/video/1uCzQCdCC1U_000170.mp4 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**/__pycache__

|

| 2 |

+

src/audeo/data/

|

| 3 |

+

ckpts/

|

| 4 |

+

outputs/

|

| 5 |

+

outputs_piano/

|

| 6 |

+

outputs_vgg/

|

| 7 |

+

src/train*

|

| 8 |

+

src/inference3*

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 Phil Wang

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: DeepAudio-V1

|

| 3 |

+

emoji: 🔊

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: indigo

|

| 6 |

+

sdk: gradio

|

| 7 |

+

app_file: app.py

|

| 8 |

+

pinned: false

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

## Enhance Generation Quality of Flow Matching V2A Model via Multi-Step CoT-Like Guidance and Combined Preference Optimization

|

| 13 |

+

## Towards Video to Piano Music Generation with Chain-of-Perform Support Benchmarks

|

| 14 |

+

|

| 15 |

+

## Results

|

| 16 |

+

|

| 17 |

+

**1. Results of Video-to-Audio Synthesis**

|

| 18 |

+

|

| 19 |

+

https://github.com/user-attachments/assets/d6761371-8fc2-427c-8b2b-6d2ac22a2db2

|

| 20 |

+

|

| 21 |

+

https://github.com/user-attachments/assets/50b33e54-8ba1-4fab-89d3-5a5cc4c22c9a

|

| 22 |

+

|

| 23 |

+

**2. Results of Video-to-Piano Synthesis**

|

| 24 |

+

|

| 25 |

+

https://github.com/user-attachments/assets/b6218b94-1d58-4dc5-873a-c3e8eef6cd67

|

| 26 |

+

|

| 27 |

+

https://github.com/user-attachments/assets/ebdd1d95-2d9e-4add-b61a-d181f0ae38d0

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

## Installation

|

| 31 |

+

|

| 32 |

+

**1. Create a conda environment**

|

| 33 |

+

|

| 34 |

+

```bash

|

| 35 |

+

conda create -n v2ap python=3.10

|

| 36 |

+

conda activate v2ap

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

**2. Install requirements**

|

| 40 |

+

|

| 41 |

+

```bash

|

| 42 |

+

pip install -r requirements.txt

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

**Pretrained models**

|

| 47 |

+

|

| 48 |

+

The models are available at https://huggingface.co/lshzhm/Video-to-Audio-and-Piano/tree/main.

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

## Inference

|

| 52 |

+

|

| 53 |

+

**1. Video-to-Audio inference**

|

| 54 |

+

|

| 55 |

+

```bash

|

| 56 |

+

python src/inference_v2a.py

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

**2. Video-to-Piano inference**

|

| 60 |

+

|

| 61 |

+

```bash

|

| 62 |

+

python src/inference_v2p.py

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

## Dateset is in progress

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

## Metrix

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

## Acknowledgement

|

| 72 |

+

|

| 73 |

+

- [Audeo](https://github.com/shlizee/Audeo) for video to midi prediction

|

| 74 |

+

- [E2TTS](https://github.com/lucidrains/e2-tts-pytorch) for CFM structure and base E2 implementation

|

| 75 |

+

- [FLAN-T5](https://huggingface.co/google/flan-t5-large) for FLAN-T5 text encode

|

| 76 |

+

- [CLIP](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k) for CLIP image encode

|

| 77 |

+

- [AudioLDM Eval](https://github.com/haoheliu/audioldm_eval) for audio evaluation

|

| 78 |

+

|

app.py

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

try:

|

| 3 |

+

import torchaudio

|

| 4 |

+

except ImportError:

|

| 5 |

+

os.system("cd ./F5-TTS; pip install -e .")

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

import spaces

|

| 9 |

+

import logging

|

| 10 |

+

from datetime import datetime

|

| 11 |

+

from pathlib import Path

|

| 12 |

+

|

| 13 |

+

import gradio as gr

|

| 14 |

+

import torch

|

| 15 |

+

import torchaudio

|

| 16 |

+

|

| 17 |

+

import tempfile

|

| 18 |

+

|

| 19 |

+

import requests

|

| 20 |

+

import shutil

|

| 21 |

+

import numpy as np

|

| 22 |

+

|

| 23 |

+

from huggingface_hub import hf_hub_download

|

| 24 |

+

|

| 25 |

+

model_path = "./ckpts/"

|

| 26 |

+

|

| 27 |

+

if not os.path.exists(model_path):

|

| 28 |

+

os.makedirs(model_path)

|

| 29 |

+

|

| 30 |

+

file_path = hf_hub_download(repo_id="lshzhm/Video-to-Audio-and-Piano", local_dir=model_path)

|

| 31 |

+

|

| 32 |

+

print(f"Model saved at: {file_path}")

|

| 33 |

+

|

| 34 |

+

log = logging.getLogger()

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

#@spaces.GPU(duration=120)

|

| 38 |

+

def video_to_audio(video: gr.Video, prompt: str, num_steps: int):

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

return video_save_path, video_gen

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def video_to_piano(video: gr.Video, prompt: str, num_steps: int):

|

| 45 |

+

|

| 46 |

+

return video_save_path, video_gen

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

video_to_audio_and_speech_tab = gr.Interface(

|

| 50 |

+

fn=video_to_audio_and_speech,

|

| 51 |

+

description="""

|

| 52 |

+

Project page: <a href="https://acappemin.github.io/DeepAudio-V1.github.io">https://acappemin.github.io/DeepAudio-V1.github.io</a><br>

|

| 53 |

+

Code: <a href="https://github.com/acappemin/DeepAudio-V1">https://github.com/acappemin/DeepAudio-V1</a><br>

|

| 54 |

+

""",

|

| 55 |

+

inputs=[

|

| 56 |

+

gr.Video(label="Input Video"),

|

| 57 |

+

gr.Text(label='Video-to-Audio Text Prompt'),

|

| 58 |

+

gr.Number(label='Video-to-Audio Num Steps', value=64, precision=0, minimum=1),

|

| 59 |

+

gr.Text(label='Video-to-Speech Transcription'),

|

| 60 |

+

gr.Audio(label='Video-to-Speech Speech Prompt'),

|

| 61 |

+

gr.Text(label='Video-to-Speech Speech Prompt Transcription'),

|

| 62 |

+

gr.Number(label='Video-to-Speech Num Steps', value=64, precision=0, minimum=1),

|

| 63 |

+

],

|

| 64 |

+

outputs=[

|

| 65 |

+

gr.Video(label="Video-to-Audio Output"),

|

| 66 |

+

gr.Video(label="Video-to-Speech Output"),

|

| 67 |

+

],

|

| 68 |

+

cache_examples=False,

|

| 69 |

+

title='Video-to-Audio-and-Speech',

|

| 70 |

+

examples=[

|

| 71 |

+

[

|

| 72 |

+

'./tests/VGGSound/video/1u1orBeV4xI_000428.mp4',

|

| 73 |

+

'',

|

| 74 |

+

64,

|

| 75 |

+

],

|

| 76 |

+

[

|

| 77 |

+

'./tests/VGGSound/video/1uCzQCdCC1U_000170.mp4',

|

| 78 |

+

'',

|

| 79 |

+

64,

|

| 80 |

+

],

|

| 81 |

+

])

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

if __name__ == "__main__":

|

| 85 |

+

gr.TabbedInterface([video_to_audio_and_speech_tab], ['Video-to-Audio-and-Speech']).launch()

|

| 86 |

+

|

requirements.txt

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

accelerate==0.34.2

|

| 2 |

+

beartype==0.18.5

|

| 3 |

+

einops==0.8.0

|

| 4 |

+

einx==0.3.0

|

| 5 |

+

ema-pytorch==0.6.2

|

| 6 |

+

g2p-en==2.1.0

|

| 7 |

+

jaxtyping==0.2.34

|

| 8 |

+

loguru==0.7.2

|

| 9 |

+

tensorboard==2.18.0

|

| 10 |

+

torch==2.4.1

|

| 11 |

+

torchaudio==2.4.1

|

| 12 |

+

torchdiffeq==0.2.4

|

| 13 |

+

torchlibrosa==0.1.0

|

| 14 |

+

torchmetrics==1.6.1

|

| 15 |

+

torchvision==0.19.1

|

| 16 |

+

numpy==1.23.5

|

| 17 |

+

tqdm==4.66.5

|

| 18 |

+

vocos==0.1.0

|

| 19 |

+

x-transformers==1.37.4

|

| 20 |

+

transformers==4.46.0

|

| 21 |

+

moviepy==1.0.3

|

| 22 |

+

jieba==0.42.1

|

| 23 |

+

pypinyin==0.44.0

|

| 24 |

+

progressbar==2.5

|

| 25 |

+

datasets==3.0.1

|

| 26 |

+

matplotlib==3.9.2

|

src/audeo/Midi_synth.py

ADDED

|

@@ -0,0 +1,165 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import numpy as np

|

| 3 |

+

os.environ["LD_PRELOAD"] = "/usr/lib/x86_64-linux-gnu/libffi.so.7"

|

| 4 |

+

import pretty_midi

|

| 5 |

+

import glob

|

| 6 |

+

import librosa

|

| 7 |

+

import soundfile as sf

|

| 8 |

+

|

| 9 |

+

# Synthesizing Audio using Fluid Synth

|

| 10 |

+

class MIDISynth():

|

| 11 |

+

def __init__(self, out_folder, video_name, instrument, midi=True):

|

| 12 |

+

self.video_name = video_name

|

| 13 |

+

# synthesize midi or roll

|

| 14 |

+

self.midi = False

|

| 15 |

+

# synthsized output dir, change to your own path

|

| 16 |

+

self.syn_dir = '/ailab-train/speech/shansizhe/audeo/data/Midi_Synth/training/'

|

| 17 |

+

self.min_key = 15

|

| 18 |

+

self.max_key = 65

|

| 19 |

+

self.frame = 50

|

| 20 |

+

self.piano_keys = 88

|

| 21 |

+

if self.midi:

|

| 22 |

+

self.midi_out_folder = out_folder + video_name

|

| 23 |

+

self.syn_dir = self.syn_dir + 'w_Roll2Midi/'

|

| 24 |

+

self.process_midi()

|

| 25 |

+

else:

|

| 26 |

+

self.est_roll_folder = out_folder + video_name

|

| 27 |

+

self.syn_dir = self.syn_dir + 'wo_Roll2Midi/'

|

| 28 |

+

self.process_roll()

|

| 29 |

+

self.spf = 0.04 # second per frame

|

| 30 |

+

self.sample_rate = 16000

|

| 31 |

+

self.ins = instrument

|

| 32 |

+

|

| 33 |

+

def process_roll(self):

|

| 34 |

+

self.wo_Roll2Midi_data = []

|

| 35 |

+

self.est_roll_files = glob.glob(self.est_roll_folder + '/*.npz')

|

| 36 |

+

self.est_roll_files.sort(key=lambda x: int(x.split('/')[-1].split('.')[0].split('-')[0]))

|

| 37 |

+

|

| 38 |

+

# Use the Roll prediction for Synthesis

|

| 39 |

+

print("need to process {0} files".format(len(self.est_roll_folder)))

|

| 40 |

+

for i in range(len(self.est_roll_files)):

|

| 41 |

+

with np.load(self.est_roll_files[i]) as data:

|

| 42 |

+

est_roll = data['roll']

|

| 43 |

+

if est_roll.shape[0] != self.frame:

|

| 44 |

+

target = np.zeros((self.frame, self.piano_keys))

|

| 45 |

+

target[:est_roll.shape[0], :] = est_roll

|

| 46 |

+

est_roll = target

|

| 47 |

+

est_roll = np.where(est_roll > 0, 1, 0)

|

| 48 |

+

self.wo_Roll2Midi_data.append(est_roll)

|

| 49 |

+

self.complete_wo_Roll2Midi_midi = np.concatenate(self.wo_Roll2Midi_data)

|

| 50 |

+

print("Without Roll2MidiNet, the Roll result has shape:", self.complete_wo_Roll2Midi_midi.shape)

|

| 51 |

+

# compute onsets and offsets

|

| 52 |

+

onset = np.zeros(self.complete_wo_Roll2Midi_midi.shape)

|

| 53 |

+

offset = np.zeros(self.complete_wo_Roll2Midi_midi.shape)

|

| 54 |

+

for j in range(self.complete_wo_Roll2Midi_midi.shape[0]):

|

| 55 |

+

if j != 0:

|

| 56 |

+

onset[j][np.setdiff1d(self.complete_wo_Roll2Midi_midi[j].nonzero(),

|

| 57 |

+

self.complete_wo_Roll2Midi_midi[j - 1].nonzero())] = 1

|

| 58 |

+

offset[j][np.setdiff1d(self.complete_wo_Roll2Midi_midi[j - 1].nonzero(),

|

| 59 |

+

self.complete_wo_Roll2Midi_midi[j].nonzero())] = -1

|

| 60 |

+

else:

|

| 61 |

+

onset[j][self.complete_wo_Roll2Midi_midi[j].nonzero()] = 1

|

| 62 |

+

onset += offset

|

| 63 |

+

self.complete_wo_Roll2Midi_onset = onset.T

|

| 64 |

+

print("Without Roll2MidiNet, the onset has shape:", self.complete_wo_Roll2Midi_onset.shape)

|

| 65 |

+

|

| 66 |

+

def process_midi(self):

|

| 67 |

+

self.w_Roll2Midi_data = []

|

| 68 |

+

self.infer_out_files = glob.glob(self.midi_out_folder + '/*.npz')

|

| 69 |

+

self.infer_out_files.sort(key=lambda x: int(x.split('/')[-1].split('.')[0].split('-')[0]))

|

| 70 |

+

|

| 71 |

+

# Use the Midi prediction for Synthesis

|

| 72 |

+

for i in range(len(self.infer_out_files)):

|

| 73 |

+

with np.load(self.infer_out_files[i]) as data:

|

| 74 |

+

est_midi = data['midi']

|

| 75 |

+

target = np.zeros((self.frame, self.piano_keys))

|

| 76 |

+

target[:est_midi.shape[0], self.min_key:self.max_key+1] = est_midi

|

| 77 |

+

est_midi = target

|

| 78 |

+

est_midi = np.where(est_midi > 0, 1, 0)

|

| 79 |

+

self.w_Roll2Midi_data.append(est_midi)

|

| 80 |

+

self.complete_w_Roll2Midi_midi = np.concatenate(self.w_Roll2Midi_data)

|

| 81 |

+

print("With Roll2MidiNet Midi, the Midi result has shape:", self.complete_w_Roll2Midi_midi.shape)

|

| 82 |

+

# compute onsets and offsets

|

| 83 |

+

onset = np.zeros(self.complete_w_Roll2Midi_midi.shape)

|

| 84 |

+

offset = np.zeros(self.complete_w_Roll2Midi_midi.shape)

|

| 85 |

+

for j in range(self.complete_w_Roll2Midi_midi.shape[0]):

|

| 86 |

+

if j != 0:

|

| 87 |

+

onset[j][np.setdiff1d(self.complete_w_Roll2Midi_midi[j].nonzero(),

|

| 88 |

+

self.complete_w_Roll2Midi_midi[j - 1].nonzero())] = 1

|

| 89 |

+

offset[j][np.setdiff1d(self.complete_w_Roll2Midi_midi[j - 1].nonzero(),

|

| 90 |

+

self.complete_w_Roll2Midi_midi[j].nonzero())] = -1

|

| 91 |

+

else:

|

| 92 |

+

onset[j][self.complete_w_Roll2Midi_midi[j].nonzero()] = 1

|

| 93 |

+

onset += offset

|

| 94 |

+

self.complete_w_Roll2Midi_onset = onset.T

|

| 95 |

+

print("With Roll2MidiNet, the onset has shape:", self.complete_w_Roll2Midi_onset.shape)

|

| 96 |

+

|

| 97 |

+

def GetNote(self):

|

| 98 |

+

if self.midi:

|

| 99 |

+

self.w_Roll2Midi_notes = {}

|

| 100 |

+

for i in range(self.complete_w_Roll2Midi_onset.shape[0]):

|

| 101 |

+

tmp = self.complete_w_Roll2Midi_onset[i]

|

| 102 |

+

start = np.where(tmp == 1)[0]

|

| 103 |

+

end = np.where(tmp == -1)[0]

|

| 104 |

+

if len(start) != len(end):

|

| 105 |

+

end = np.append(end, tmp.shape)

|

| 106 |

+

merged_list = [(start[i], end[i]) for i in range(0, len(start))]

|

| 107 |

+

# 21 is the lowest piano key in the Midi note number (Midi has 128 notes)

|

| 108 |

+

self.w_Roll2Midi_notes[21 + i] = merged_list

|

| 109 |

+

else:

|

| 110 |

+

self.wo_Roll2Midi_notes = {}

|

| 111 |

+

for i in range(self.complete_wo_Roll2Midi_onset.shape[0]):

|

| 112 |

+

tmp = self.complete_wo_Roll2Midi_onset[i]

|

| 113 |

+

start = np.where(tmp==1)[0]

|

| 114 |

+

end = np.where(tmp==-1)[0]

|

| 115 |

+

if len(start)!=len(end):

|

| 116 |

+

end = np.append(end, tmp.shape)

|

| 117 |

+

merged_list = [(start[i], end[i]) for i in range(0, len(start))]

|

| 118 |

+

self.wo_Roll2Midi_notes[21 + i] = merged_list

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

def Synthesize(self):

|

| 123 |

+

if self.midi:

|

| 124 |

+

wav = self.generate_midi(self.w_Roll2Midi_notes, self.ins)

|

| 125 |

+

path = self.create_output_dir()

|

| 126 |

+

out_file = path + f'/Midi-{self.video_name}-{self.ins}.wav'

|

| 127 |

+

#librosa.output.write_wav(out_file, wav, sr=self.sample_rate)

|

| 128 |

+

sf.write(out_file, wav, self.sample_rate)

|

| 129 |

+

else:

|

| 130 |

+

wav = self.generate_midi(self.wo_Roll2Midi_notes, self.ins)

|

| 131 |

+

path = self.create_output_dir()

|

| 132 |

+

out_file = path + f'/Roll-{self.video_name}-{self.ins}.wav'

|

| 133 |

+

#librosa.output.write_wav(out_file, wav, sr=self.sample_rate)

|

| 134 |

+

sf.write(out_file, wav, self.sample_rate)

|

| 135 |

+

|

| 136 |

+

def generate_midi(self, notes, ins):

|

| 137 |

+

pm = pretty_midi.PrettyMIDI(initial_tempo=80)

|

| 138 |

+

piano_program = pretty_midi.instrument_name_to_program(ins) #Acoustic Grand Piano

|

| 139 |

+

piano = pretty_midi.Instrument(program=piano_program)

|

| 140 |

+

for key in list(notes.keys()):

|

| 141 |

+

values = notes[key]

|

| 142 |

+

for i in range(len(values)):

|

| 143 |

+

start, end = values[i]

|

| 144 |

+

note = pretty_midi.Note(velocity=100, pitch=key, start=start * self.spf, end=end * self.spf)

|

| 145 |

+

piano.notes.append(note)

|

| 146 |

+

pm.instruments.append(piano)

|

| 147 |

+

wav = pm.fluidsynth(fs=16000)

|

| 148 |

+

return wav

|

| 149 |

+

|

| 150 |

+

def create_output_dir(self):

|

| 151 |

+

synth_out_dir = os.path.join(self.syn_dir, self.video_name)

|

| 152 |

+

os.makedirs(synth_out_dir, exist_ok=True)

|

| 153 |

+

return synth_out_dir

|

| 154 |

+

|

| 155 |

+

if __name__ == "__main__":

|

| 156 |

+

# could select any instrument available in Midi

|

| 157 |

+

instrument = 'Acoustic Grand Piano'

|

| 158 |

+

for i in [1,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,19,21,22,23,24,25,26,27]:

|

| 159 |

+

video_name = f'{i}'

|

| 160 |

+

#print(video_name)

|

| 161 |

+

Midi_out_folder = '/ailab-train/speech/shansizhe/audeo/data/estimate_Roll/training/'# Generated Midi output folder, change to your own path

|

| 162 |

+

Synth = MIDISynth(Midi_out_folder, video_name, instrument)

|

| 163 |

+

Synth.GetNote()

|

| 164 |

+

Synth.Synthesize()

|

| 165 |

+

|

src/audeo/README.md

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

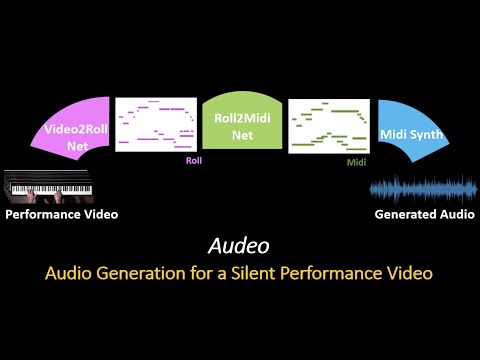

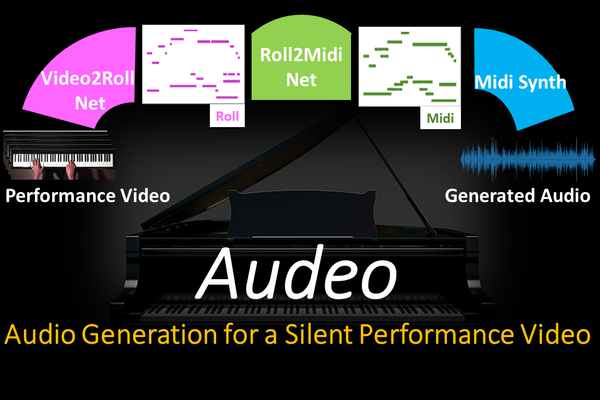

| 1 |

+

# Audeo

|

| 2 |

+

|

| 3 |

+

## Introduction

|

| 4 |

+

This repository contains the code for the paper **"Audeo: Audio Generation for a Silent Performance Video"**, which is avilable [here](https://proceedings.neurips.cc/paper/2020/file/227f6afd3b7f89b96c4bb91f95d50f6d-Paper.pdf), published in NeurIPS 2020. More samples can be found in our [project webpage](http://faculty.washington.edu/shlizee/audeo/) and [Youtube Video](https://www.youtube.com/watch?v=8rS3VgjG7_c).

|

| 5 |

+

|

| 6 |

+

[](https://www.youtube.com/watch?v=8rS3VgjG7_c)

|

| 7 |

+

|

| 8 |

+

## Abstract

|

| 9 |

+

We present a novel system that gets as an input, video frames of a musician playing the piano, and generates the music for that video. The generation of music from

|

| 10 |

+

visual cues is a challenging problem and it is not clear whether it is an attainable goal at all. Our main aim in this work is to explore the plausibility of such a

|

| 11 |

+

transformation and to identify cues and components able to carry the association of sounds with visual events. To achieve the transformation we built a full pipeline

|

| 12 |

+

named ‘Audeo’ containing three components. We first translate the video frames of the keyboard and the musician hand movements into raw mechanical musical

|

| 13 |

+

symbolic representation Piano-Roll (Roll) for each video frame which represents the keys pressed at each time step. We then adapt the Roll to be amenable for audio

|

| 14 |

+

synthesis by including temporal correlations. This step turns out to be critical for meaningful audio generation. In the last step, we implement Midi synthesizers

|

| 15 |

+

to generate realistic music. Audeo converts video to audio smoothly and clearly with only a few setup constraints. We evaluate Audeo on piano performance videos

|

| 16 |

+

collected from Youtube and obtain that their generated music is of reasonable audio quality and can be successfully recognized with high precision by popular

|

| 17 |

+

music identification software.

|

| 18 |

+

|

| 19 |

+

## Data

|

| 20 |

+

We use Youtube Channel videos recorded by [Paul Barton](https://www.youtube.com/user/PaulBartonPiano) to evaluate the Audeo pipeline. For **Pseudo Midi Evaluation**, we use 24 videos of Bach Well-Tempered Clavier Book One (WTC B1). The testing set contains the first 3 Prelude and Fugue performances of Bach Well-Tempered Clavier Book Two (WTC B2) The Youtube Video Id can be found in [here](https://github.com/shlizee/Audeo/blob/master/Video_Id.md). For **Audio Evaluation**, we use 35 videos from WTC B2 (24 Prelude and Fugue pairs and their 11 variants), 8 videos from WTC B1 Variants, and 9 videos from other composers. Since we cannot host the videos due to copyright issues, you need to download the videos yourself.

|

| 21 |

+

|

| 22 |

+

All videos are set at the frame of 25 fps and the audio sampling rate of 16kHz. The **Pseudo GT Midi** are obtained via [Onsets and Frames framework (OF)](https://github.com/magenta/magenta/tree/master/magenta/models/onsets_frames_transcription). We process all videos and keep the full keyboard only and remove all frames that do not contribute to the piano performance (e.g., logos, black screens, etc). The **cropped piano coordinates** can be found in [here](https://github.com/shlizee/Audeo/blob/master/piano_coords.py) (The order is the same as in **Video_Id** file. We trim the initial silent sections up to the first frame in which the first key is being pressed, to align the video, Pseudo GT Midi, and the audio. All silent frames inside each performance are kept.

|

| 23 |

+

|

| 24 |

+

For your convenience, we provide the following folders/files in [Google Drive](https://drive.google.com/drive/folders/1w9wsZM-tPPUVqwdpsefEkrDgkN3kfg7G?usp=sharing):

|

| 25 |

+

- **input_images**: examples of how the images data should look like.

|

| 26 |

+

- **labels**: training and testing labels of for training/testing Video2Roll Net. Each folder contains a **pkl** file for one video. The labels are dictionaries where **key** is the **frame number** and **value** is a 88 dim vector. See **Video2Roll_dataset.py** for more details.

|

| 27 |

+

- **OF_midi_files**: the original Pseudo ground truth midi files obtained from **Onsets and Frames Framework**.

|

| 28 |

+

- **midi**: we process the Pseudo GT Midi files to 2D matrix (Piano keys x Time) and down-sampled to 25 fps. Then for each video, we divide them into multiple 2 seconds (50 frames) segments. For example **253-303.npz** includes the 2D matrix from frame 253 to frame 302.

|

| 29 |

+

- **estimate_Roll**: the **Roll** predictions obtained from **Video2Roll Net**. Same format as the **midi**. You can directly use them for training **Roll2Midi Net**.

|

| 30 |

+

- **Roll2Midi_results**: the **Midi** predictions obtained from **Roll2Midi Net**. Same format as the **midi** and **estimate_Roll**. Ready for **Midy Synth**.

|

| 31 |

+

- **Midi_Synth**: synthesized audios from **Roll2Midi_results**.

|

| 32 |

+

- **Video2Roll_models**: contains the pre-trained **Video2RollNet.pth**.

|

| 33 |

+

- **Roll2Midi_models**: contains the pre-trained **Roll2Midi Net**.

|

| 34 |

+

|

| 35 |

+

## How to Use

|

| 36 |

+

- Video2Roll Net

|

| 37 |

+

1. Please check the **Video2Roll_dataset.py** and make sure you satisfy the data formats.

|

| 38 |

+

2. Run **Video2Roll_train.py** for training.

|

| 39 |

+

3. Run **Video2Roll_evaluate.py** for evaluation.

|

| 40 |

+

4. Run **Video2Roll_inference.py** to generate **Roll** predictions.

|

| 41 |

+

- Roll2Midi Net

|

| 42 |

+

1. Run **Roll2Midi_train.py** for training.

|

| 43 |

+

2. Run **Roll2Midi_evaluate.py** for evaluation.

|

| 44 |

+

2. Run **Roll2Midi_inference.py** to generate **Midi** predictions.

|

| 45 |

+

- Midi Synth

|

| 46 |

+

1. Run **Midi_synth.py** to use **Fluid Synth** to synthesize audio.

|

| 47 |

+

|

| 48 |

+

## Requirements

|

| 49 |

+

- Pytorch >= 1.6

|

| 50 |

+

- Python 3

|

| 51 |

+

- numpy 1.19

|

| 52 |

+

- scikit-learn 0.22.1

|

| 53 |

+

- librosa 0.7.1

|

| 54 |

+

- pretty-midi 0.2.8

|

| 55 |

+

|

| 56 |

+

## Citation

|

| 57 |

+

|

| 58 |

+

Please cite ["Audeo: Audio Generation for a Silent Performance Video"](https://proceedings.neurips.cc/paper/2020/file/227f6afd3b7f89b96c4bb91f95d50f6d-Paper.pdf) when you use this code:

|

| 59 |

+

```

|

| 60 |

+

@article{su2020audeo,

|

| 61 |

+

title={Audeo: Audio generation for a silent performance video},

|

| 62 |

+

author={Su, Kun and Liu, Xiulong and Shlizerman, Eli},

|

| 63 |

+

journal={Advances in Neural Information Processing Systems},

|

| 64 |

+

volume={33},

|

| 65 |

+

year={2020}

|

| 66 |

+

}

|

| 67 |

+

```

|

src/audeo/Roll2MidiNet.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torch.nn.functional as F

|

| 3 |

+

import torch

|

| 4 |

+

##############################

|

| 5 |

+

# U-NET

|

| 6 |

+

##############################

|

| 7 |

+

class UNetDown(nn.Module):

|

| 8 |

+

def __init__(self, in_size, out_size, normalize=True, dropout=0.0):

|

| 9 |

+

super(UNetDown, self).__init__()

|

| 10 |

+

model = [nn.Conv2d(in_size, out_size, 3, stride=1, padding=1, bias=False)]

|

| 11 |

+

if normalize:

|

| 12 |

+

model.append(nn.BatchNorm2d(out_size, 0.8))

|

| 13 |

+

model.append(nn.LeakyReLU(0.2))

|

| 14 |

+

if dropout:

|

| 15 |

+

model.append(nn.Dropout(dropout))

|

| 16 |

+

|

| 17 |

+

self.model = nn.Sequential(*model)

|

| 18 |

+

|

| 19 |

+

def forward(self, x):

|

| 20 |

+

return self.model(x)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class UNetUp(nn.Module):

|

| 24 |

+

def __init__(self, in_size, out_size, dropout=0.0):

|

| 25 |

+

super(UNetUp, self).__init__()

|

| 26 |

+

model = [

|

| 27 |

+

nn.ConvTranspose2d(in_size, out_size, 3, stride=1, padding=1, bias=False),

|

| 28 |

+

nn.BatchNorm2d(out_size, 0.8),

|

| 29 |

+

nn.ReLU(inplace=True),

|

| 30 |

+

]

|

| 31 |

+

if dropout:

|

| 32 |

+

model.append(nn.Dropout(dropout))

|

| 33 |

+

|

| 34 |

+

self.model = nn.Sequential(*model)

|

| 35 |

+

|

| 36 |

+

def forward(self, x, skip_input):

|

| 37 |

+

x = self.model(x)

|

| 38 |

+

out = torch.cat((x, skip_input), 1)

|

| 39 |

+

return out

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

class Generator(nn.Module):

|

| 43 |

+

def __init__(self, input_shape):

|

| 44 |

+

super(Generator, self).__init__()

|

| 45 |

+

channels, _ , _ = input_shape

|

| 46 |

+

self.down1 = UNetDown(channels, 64, normalize=False)

|

| 47 |

+

self.down2 = UNetDown(64, 128)

|

| 48 |

+

self.down3 = UNetDown(128, 256, dropout=0.5)

|

| 49 |

+

self.down4 = UNetDown(256, 512, dropout=0.5)

|

| 50 |

+

self.down5 = UNetDown(512, 1024, dropout=0.5)

|

| 51 |

+

self.down6 = UNetDown(1024, 1024, dropout=0.5)

|

| 52 |

+

|

| 53 |

+

self.up1 = UNetUp(1024, 512, dropout=0.5)

|

| 54 |

+

self.up2 = UNetUp(1024+512, 256, dropout=0.5)

|

| 55 |

+

self.up3 = UNetUp(512+256, 128, dropout=0.5)

|

| 56 |

+

self.up4 = UNetUp(256+128, 64)

|

| 57 |

+

self.up5 = UNetUp(128+64, 16)

|

| 58 |

+

self.conv1d = nn.Conv2d(80, 1, kernel_size=1)

|

| 59 |

+

|

| 60 |

+

def forward(self, x):

|

| 61 |

+

# U-Net generator with skip connections from encoder to decoder

|

| 62 |

+

d1 = self.down1(x)

|

| 63 |

+

|

| 64 |

+

d2 = self.down2(d1)

|

| 65 |

+

|

| 66 |

+

d3 = self.down3(d2)

|

| 67 |

+

|

| 68 |

+

d4 = self.down4(d3)

|

| 69 |

+

|

| 70 |

+

d5 = self.down5(d4)

|

| 71 |

+

|

| 72 |

+

d6 = self.down6(d5)

|

| 73 |

+

|

| 74 |

+

u1 = self.up1(d6, d5)

|

| 75 |

+

|

| 76 |

+

u2 = self.up2(u1, d4)

|

| 77 |

+

|

| 78 |

+

u3 = self.up3(u2, d3)

|

| 79 |

+

|

| 80 |

+

u4 = self.up4(u3, d2)

|

| 81 |

+

|

| 82 |

+

u5 = self.up5(u4, d1)

|

| 83 |

+

|

| 84 |

+

out = self.conv1d(u5)

|

| 85 |

+

|

| 86 |

+

out = F.sigmoid(out)

|

| 87 |

+

return out

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

class Discriminator(nn.Module):

|

| 91 |

+

def __init__(self, input_shape):

|

| 92 |

+

super(Discriminator, self).__init__()

|

| 93 |

+

|

| 94 |

+

channels, height, width = input_shape #1 51 50

|

| 95 |

+

|

| 96 |

+

# Calculate output of image discriminator (PatchGAN)

|

| 97 |

+

patch_h, patch_w = int(height / 2 ** 3)+1, int(width / 2 ** 3)+1

|

| 98 |

+

self.output_shape = (1, patch_h, patch_w)

|

| 99 |

+

|

| 100 |

+

def discriminator_block(in_filters, out_filters, stride, normalize):

|

| 101 |

+

"""Returns layers of each discriminator block"""

|

| 102 |

+

layers = [nn.Conv2d(in_filters, out_filters, 3, stride, 1)]

|

| 103 |

+

if normalize:

|

| 104 |

+

layers.append(nn.InstanceNorm2d(out_filters))

|

| 105 |

+

layers.append(nn.LeakyReLU(0.2, inplace=True))

|

| 106 |

+

return layers

|

| 107 |

+

|

| 108 |

+

layers = []

|

| 109 |

+

in_filters = channels

|

| 110 |

+

for out_filters, stride, normalize in [(64, 2, False), (128, 2, True), (256, 2, True), (512, 1, True)]:

|

| 111 |

+

layers.extend(discriminator_block(in_filters, out_filters, stride, normalize))

|

| 112 |

+

in_filters = out_filters

|

| 113 |

+

|

| 114 |

+

layers.append(nn.Conv2d(out_filters, 1, 3, 1, 1))

|

| 115 |

+

|

| 116 |

+

self.model = nn.Sequential(*layers)

|

| 117 |

+

|

| 118 |

+

def forward(self, img):

|

| 119 |

+

return self.model(img)

|

| 120 |

+

|

| 121 |

+

def weights_init_normal(m):

|

| 122 |

+

classname = m.__class__.__name__

|

| 123 |

+

if classname.find("Conv") != -1:

|

| 124 |

+

torch.nn.init.normal_(m.weight.data, 0.0, 0.02)

|

| 125 |

+

elif classname.find("BatchNorm2d") != -1:

|

| 126 |

+

torch.nn.init.normal_(m.weight.data, 1.0, 0.02)

|

| 127 |

+

torch.nn.init.constant_(m.bias.data, 0.0)

|

| 128 |

+

|

| 129 |

+

if __name__ == "__main__":

|

| 130 |

+

input_shape = (1,51, 100)

|

| 131 |

+

gnet = Generator(input_shape)

|

| 132 |

+

dnet = Discriminator(input_shape)

|

| 133 |

+

print(dnet.output_shape)

|

| 134 |

+

imgs = torch.rand((64,1,51,100))

|

| 135 |

+

gen = gnet(imgs)

|

| 136 |

+

print(gen.shape)

|

| 137 |

+

dis = dnet(gen)

|

| 138 |

+

print(dis.shape)

|

| 139 |

+

|

src/audeo/Roll2MidiNet_enhance.py

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch.nn as nn

|

| 2 |

+

import torch.nn.functional as F

|

| 3 |

+

import torch

|

| 4 |

+

##############################

|

| 5 |

+

# U-NET

|

| 6 |

+

##############################

|

| 7 |

+

class UNetDown(nn.Module):

|

| 8 |

+

def __init__(self, in_size, out_size, normalize=True, dropout=0.0):

|

| 9 |

+

super(UNetDown, self).__init__()

|

| 10 |

+

model = [nn.Conv2d(in_size, out_size, 3, stride=1, padding=1, bias=False)]

|

| 11 |

+

if normalize:

|

| 12 |

+

model.append(nn.BatchNorm2d(out_size, 0.8))

|

| 13 |

+

model.append(nn.LeakyReLU(0.2))

|

| 14 |

+

if dropout:

|

| 15 |

+

model.append(nn.Dropout(dropout))

|

| 16 |

+

|

| 17 |

+

self.model = nn.Sequential(*model)

|

| 18 |

+

|

| 19 |

+

def forward(self, x):

|

| 20 |

+

return self.model(x)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class UNetUp(nn.Module):

|

| 24 |

+

def __init__(self, in_size, out_size, dropout=0.0):

|

| 25 |

+

super(UNetUp, self).__init__()

|

| 26 |

+

model = [

|

| 27 |

+

nn.ConvTranspose2d(in_size, out_size, 3, stride=1, padding=1, bias=False),

|

| 28 |

+

nn.BatchNorm2d(out_size, 0.8),

|

| 29 |

+

nn.ReLU(inplace=True),

|

| 30 |

+

]

|

| 31 |

+

if dropout:

|

| 32 |

+

model.append(nn.Dropout(dropout))

|

| 33 |

+

|

| 34 |

+

self.model = nn.Sequential(*model)

|

| 35 |

+

|

| 36 |

+

def forward(self, x, skip_input):

|

| 37 |

+

x = self.model(x)

|

| 38 |

+

out = torch.cat((x, skip_input), 1)

|

| 39 |

+

return out

|

| 40 |

+

|

| 41 |

+

class AttentionGate(nn.Module):

|

| 42 |

+

def __init__(self, in_channels, g_channels, out_channels):

|

| 43 |

+

super(AttentionGate, self).__init__()

|

| 44 |

+

self.theta_x = nn.Conv2d(in_channels, out_channels, kernel_size=1)

|

| 45 |

+

self.phi_g = nn.Conv2d(g_channels, out_channels, kernel_size=1)

|

| 46 |

+

self.psi = nn.Conv2d(out_channels, 1, kernel_size=1)

|

| 47 |

+

self.sigmoid = nn.Sigmoid()

|

| 48 |

+

|

| 49 |

+

def forward(self, x, g):

|

| 50 |

+

theta_x = self.theta_x(x)

|

| 51 |

+

phi_g = self.phi_g(g)

|

| 52 |

+

f = theta_x + phi_g

|

| 53 |

+

f = self.psi(f)

|

| 54 |

+

alpha = self.sigmoid(f)

|

| 55 |

+

return x * alpha

|

| 56 |

+

|

| 57 |

+

class Generator(nn.Module):

|

| 58 |

+

def __init__(self, input_shape):

|

| 59 |

+

super(Generator, self).__init__()

|

| 60 |

+

channels, _ , _ = input_shape

|

| 61 |

+

self.down1 = UNetDown(channels, 64, normalize=False)

|

| 62 |

+

self.down2 = UNetDown(64, 128)

|

| 63 |

+

self.down3 = UNetDown(128, 256, dropout=0.5)

|

| 64 |

+

self.down4 = UNetDown(256, 512, dropout=0.5)

|

| 65 |

+

self.down5 = UNetDown(512, 1024, dropout=0.5)

|

| 66 |

+

self.down6 = UNetDown(1024, 1024, dropout=0.5)

|

| 67 |

+

|

| 68 |

+

# Attention Gates

|

| 69 |

+

self.att1 = AttentionGate(2048, 1024, 512)

|

| 70 |

+

self.att2 = AttentionGate(1024, 512, 256)

|

| 71 |

+

self.att3 = AttentionGate(512, 256, 128)

|

| 72 |

+

self.att4 = AttentionGate(256, 128, 64)

|

| 73 |

+

|

| 74 |

+

self.up1 = UNetUp(1024, 1024, dropout=0.5)

|

| 75 |

+

self.up2 = UNetUp(2048, 512, dropout=0.5)

|

| 76 |

+

self.up3 = UNetUp(1024, 256, dropout=0.5)

|

| 77 |

+

self.up4 = UNetUp(512, 128)

|

| 78 |

+

self.up5 = UNetUp(256, 64)

|

| 79 |

+

self.conv1d = nn.Conv2d(128, 1, kernel_size=1)

|

| 80 |

+

|

| 81 |

+

def forward(self, x):

|

| 82 |

+

# U-Net generator with skip connections from encoder to decoder

|

| 83 |

+

d1 = self.down1(x)

|

| 84 |

+

|

| 85 |

+

d2 = self.down2(d1)

|

| 86 |

+

|

| 87 |

+

d3 = self.down3(d2)

|

| 88 |

+

|

| 89 |

+

d4 = self.down4(d3)

|

| 90 |

+

|

| 91 |

+

d5 = self.down5(d4)

|

| 92 |

+

|

| 93 |

+

d6 = self.down6(d5)

|

| 94 |

+

|

| 95 |

+

u1 = self.up1(d6, d5)

|

| 96 |

+

u1 = self.att1(u1, d5)

|

| 97 |

+

|

| 98 |

+

u2 = self.up2(u1, d4)

|

| 99 |

+

u2 = self.att2(u2, d4)

|

| 100 |

+

|

| 101 |

+

u3 = self.up3(u2, d3)

|

| 102 |

+

u3 = self.att3(u3, d3)

|

| 103 |

+

|

| 104 |

+

u4 = self.up4(u3, d2)

|

| 105 |

+

u4 = self.att4(u4, d2)

|

| 106 |

+

|

| 107 |

+

u5 = self.up5(u4, d1)

|

| 108 |

+

|

| 109 |

+

out = self.conv1d(u5)

|

| 110 |

+

|

| 111 |

+

out = F.sigmoid(out)

|

| 112 |

+

return out

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

class Discriminator(nn.Module):

|

| 116 |

+

def __init__(self, input_shape):

|

| 117 |

+

super(Discriminator, self).__init__()

|

| 118 |

+

|

| 119 |

+

channels, height, width = input_shape #1 51 50

|

| 120 |

+

|

| 121 |

+

# Calculate output of image discriminator (PatchGAN)

|

| 122 |

+

patch_h, patch_w = int(height / 2 ** 3)+1, int(width / 2 ** 3)+1

|