Qwen-7B-Chat

🤗 Hugging Face | 🤖 ModelScope | 📑 Paper | 🖥️ Demo

WeChat (微信) | Discord | API

介绍(Introduction)

通义千问-7B(Qwen-7B)是阿里云研发的通义千问大模型系列的70亿参数规模的模型。Qwen-7B是基于Transformer的大语言模型, 在超大规模的预训练数据上进行训练得到。预训练数据类型多样,覆盖广泛,包括大量网络文本、专业书籍、代码等。同时,在Qwen-7B的基础上,我们使用对齐机制打造了基于大语言模型的AI助手Qwen-7B-Chat。相较于最初开源的Qwen-7B模型,我们现已将预训练模型和Chat模型更新到效果更优的版本。本仓库为Qwen-7B-Chat的仓库。

如果您想了解更多关于通义千问-7B开源模型的细节,我们建议您参阅GitHub代码库。

Qwen-7B is the 7B-parameter version of the large language model series, Qwen (abbr. Tongyi Qianwen), proposed by Alibaba Cloud. Qwen-7B is a Transformer-based large language model, which is pretrained on a large volume of data, including web texts, books, codes, etc. Additionally, based on the pretrained Qwen-7B, we release Qwen-7B-Chat, a large-model-based AI assistant, which is trained with alignment techniques. Now we have updated both our pretrained and chat models with better performances. This repository is the one for Qwen-7B-Chat.

For more details about Qwen, please refer to the GitHub code repository.

要求(Requirements)

- python 3.8及以上版本

- pytorch 1.12及以上版本,推荐2.0及以上版本

- 建议使用CUDA 11.4及以上(GPU用户、flash-attention用户等需考虑此选项)

- python 3.8 and above

- pytorch 1.12 and above, 2.0 and above are recommended

- CUDA 11.4 and above are recommended (this is for GPU users, flash-attention users, etc.)

依赖项(Dependency)

运行Qwen-7B-Chat,请确保满足上述要求,再执行以下pip命令安装依赖库

To run Qwen-7B-Chat, please make sure you meet the above requirements, and then execute the following pip commands to install the dependent libraries.

pip install transformers==4.32.0 accelerate tiktoken einops scipy transformers_stream_generator==0.0.4 peft deepspeed

另外,推荐安装flash-attention库(当前已支持flash attention 2),以实现更高的效率和更低的显存占用。

In addition, it is recommended to install the flash-attention library (we support flash attention 2 now.) for higher efficiency and lower memory usage.

git clone https://github.com/Dao-AILab/flash-attention

cd flash-attention && pip install .

# 下方安装可选,安装可能比较缓慢。

# pip install csrc/layer_norm

# pip install csrc/rotary

快速使用(Quickstart)

下面我们展示了一个使用Qwen-7B-Chat模型,进行多轮对话交互的样例:

We show an example of multi-turn interaction with Qwen-7B-Chat in the following code:

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

# Note: The default behavior now has injection attack prevention off.

tokenizer = AutoTokenizer.from_pretrained("Qwen/Qwen-7B-Chat", trust_remote_code=True)

# use bf16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, bf16=True).eval()

# use fp16

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True, fp16=True).eval()

# use cpu only

# model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="cpu", trust_remote_code=True).eval()

# use auto mode, automatically select precision based on the device.

model = AutoModelForCausalLM.from_pretrained("Qwen/Qwen-7B-Chat", device_map="auto", trust_remote_code=True).eval()

# Specify hyperparameters for generation. But if you use transformers>=4.32.0, there is no need to do this.

# model.generation_config = GenerationConfig.from_pretrained("Qwen/Qwen-7B-Chat", trust_remote_code=True) # 可指定不同的生成长度、top_p等相关超参

# 第一轮对话 1st dialogue turn

response, history = model.chat(tokenizer, "你好", history=None)

print(response)

# 你好!很高兴为你提供帮助。

# 第二轮对话 2nd dialogue turn

response, history = model.chat(tokenizer, "给我讲一个年轻人奋斗创业最终取得成功的故事。", history=history)

print(response)

# 这是一个关于一个年轻人奋斗创业最终取得成功的故事。

# 故事的主人公叫李明,他来自一个普通的家庭,父母都是普通的工人。从小,李明就立下了一个目标:要成为一名成功的企业家。

# 为了实现这个目标,李明勤奋学习,考上了大学。在大学期间,他积极参加各种创业比赛,获得了不少奖项。他还利用课余时间去实习,积累了宝贵的经验。

# 毕业后,李明决定开始自己的创业之路。他开始寻找投资机会,但多次都被拒绝了。然而,他并没有放弃。他继续努力,不断改进自己的创业计划,并寻找新的投资机会。

# 最终,李明成功地获得了一笔投资,开始了自己的创业之路。他成立了一家科技公司,专注于开发新型软件。在他的领导下,公司迅速发展起来,成为了一家成功的科技企业。

# 李明的成功并不是偶然的。他勤奋、坚韧、勇于冒险,不断学习和改进自己。他的成功也证明了,只要努力奋斗,任何人都有可能取得成功。

# 第三轮对话 3rd dialogue turn

response, history = model.chat(tokenizer, "给这个故事起一个标题", history=history)

print(response)

# 《奋斗创业:一个年轻人的成功之路》

关于更多的使用说明,请参考我们的GitHub repo获取更多信息。

For more information, please refer to our GitHub repo for more information.

Tokenizer

注:作为术语的“tokenization”在中文中尚无共识的概念对应,本文档采用英文表达以利说明。

基于tiktoken的分词器有别于其他分词器,比如sentencepiece分词器。尤其在微调阶段,需要特别注意特殊token的使用。关于tokenizer的更多信息,以及微调时涉及的相关使用,请参阅文档。

Our tokenizer based on tiktoken is different from other tokenizers, e.g., sentencepiece tokenizer. You need to pay attention to special tokens, especially in finetuning. For more detailed information on the tokenizer and related use in fine-tuning, please refer to the documentation.

量化 (Quantization)

用法 (Usage)

请注意:我们更新量化方案为基于AutoGPTQ的量化,提供Qwen-7B-Chat的Int4量化模型点击这里。相比此前方案,该方案在模型评测效果几乎无损,且存储需求更低,推理速度更优。

Note: we provide a new solution based on AutoGPTQ, and release an Int4 quantized model for Qwen-7B-Chat Click here, which achieves nearly lossless model effects but improved performance on both memory costs and inference speed, in comparison with the previous solution.

以下我们提供示例说明如何使用Int4量化模型。在开始使用前,请先保证满足要求(如torch 2.0及以上,transformers版本为4.32.0及以上,等等),并安装所需安装包:

Here we demonstrate how to use our provided quantized models for inference. Before you start, make sure you meet the requirements of auto-gptq (e.g., torch 2.0 and above, transformers 4.32.0 and above, etc.) and install the required packages:

pip install auto-gptq optimum

如安装auto-gptq遇到问题,我们建议您到官方repo搜索合适的预编译wheel。

随后即可使用和上述一致的用法调用量化模型:

If you meet problems installing auto-gptq, we advise you to check out the official repo to find a pre-build wheel.

Then you can load the quantized model easily and run inference as same as usual:

model = AutoModelForCausalLM.from_pretrained(

"Qwen/Qwen-7B-Chat-Int4",

device_map="auto",

trust_remote_code=True

).eval()

response, history = model.chat(tokenizer, "你好", history=None)

效果评测

我们对BF16,Int8和Int4模型在基准评测上做了测试(使用zero-shot设置),发现量化模型效果损失较小,结果如下所示:

We illustrate the zero-shot performance of both BF16, Int8 and Int4 models on the benchmark, and we find that the quantized model does not suffer from significant performance degradation. Results are shown below:

| Quantization | MMLU | CEval (val) | GSM8K | Humaneval |

|---|---|---|---|---|

| BF16 | 55.8 | 59.7 | 50.3 | 37.2 |

| Int8 | 55.4 | 59.4 | 48.3 | 34.8 |

| Int4 | 55.1 | 59.2 | 49.7 | 29.9 |

推理速度 (Inference Speed)

我们测算了不同精度模型以及不同FlashAttn库版本下模型生成2048和8192个token的平均推理速度。如图所示:

We measured the average inference speed of generating 2048 and 8192 tokens with different quantization levels and versions of flash-attention, respectively.

| Quantization | FlashAttn | Speed (2048 tokens) | Speed (8192 tokens) |

|---|---|---|---|

| BF16 | v2 | 40.93 | 36.14 |

| Int8 | v2 | 37.47 | 32.54 |

| Int4 | v2 | 50.09 | 38.61 |

| BF16 | v1 | 40.75 | 35.34 |

| Int8 | v1 | 37.51 | 32.39 |

| Int4 | v1 | 45.98 | 36.47 |

| BF16 | Disabled | 37.55 | 33.56 |

| Int8 | Disabled | 37.84 | 32.65 |

| Int4 | Disabled | 48.12 | 36.70 |

具体而言,我们记录在长度为1的上下文的条件下生成8192个token的性能。评测运行于单张A100-SXM4-80G GPU,使用PyTorch 2.0.1和CUDA 11.8。推理速度是生成8192个token的速度均值。

In detail, the setting of profiling is generating 8192 new tokens with 1 context token. The profiling runs on a single A100-SXM4-80G GPU with PyTorch 2.0.1 and CUDA 11.8. The inference speed is averaged over the generated 8192 tokens.

注意:以上Int4/Int8模型生成速度使用autogptq库给出,当前AutoModelForCausalLM.from_pretrained载入的模型生成速度会慢大约20%。我们已经将该问题汇报给HuggingFace团队,若有解决方案将即时更新。

Note: The generation speed of the Int4/Int8 models mentioned above is provided by the autogptq library. The current speed of the model loaded using "AutoModelForCausalLM.from_pretrained" will be approximately 20% slower. We have reported this issue to the HuggingFace team and will update it promptly if a solution is available.

显存使用 (GPU Memory Usage)

我们还测算了不同模型精度编码2048个token及生成8192个token的峰值显存占用情况。(显存消耗在是否使用FlashAttn的情况下均类似。)结果如下所示:

We also profile the peak GPU memory usage for encoding 2048 tokens as context (and generating single token) and generating 8192 tokens (with single token as context) under different quantization levels, respectively. (The GPU memory usage is similar when using flash-attention or not.)The results are shown below.

| Quantization Level | Peak Usage for Encoding 2048 Tokens | Peak Usage for Generating 8192 Tokens |

|---|---|---|

| BF16 | 16.99GB | 22.53GB |

| Int8 | 11.20GB | 16.62GB |

| Int4 | 8.21GB | 13.63GB |

上述性能测算使用此脚本完成。

The above speed and memory profiling are conducted using this script.

模型细节(Model)

与Qwen-7B预训练模型相同,Qwen-7B-Chat模型规模基本情况如下所示:

The details of the model architecture of Qwen-7B-Chat are listed as follows:

| Hyperparameter | Value |

|---|---|

| n_layers | 32 |

| n_heads | 32 |

| d_model | 4096 |

| vocab size | 151851 |

| sequence length | 8192 |

在位置编码、FFN激活函数和normalization的实现方式上,我们也采用了目前最流行的做法, 即RoPE相对位置编码、SwiGLU激活函数、RMSNorm(可选安装flash-attention加速)。

在分词器方面,相比目前主流开源模型以中英词表为主,Qwen-7B-Chat使用了约15万token大小的词表。

该词表在GPT-4使用的BPE词表cl100k_base基础上,对中文、多语言进行了优化,在对中、英、代码数据的高效编解码的基础上,对部分多语言更加友好,方便用户在不扩展词表的情况下对部分语种进行能力增强。

词表对数字按单个数字位切分。调用较为高效的tiktoken分词库进行分词。

For position encoding, FFN activation function, and normalization calculation methods, we adopt the prevalent practices, i.e., RoPE relative position encoding, SwiGLU for activation function, and RMSNorm for normalization (optional installation of flash-attention for acceleration).

For tokenization, compared to the current mainstream open-source models based on Chinese and English vocabularies, Qwen-7B-Chat uses a vocabulary of over 150K tokens.

It first considers efficient encoding of Chinese, English, and code data, and is also more friendly to multilingual languages, enabling users to directly enhance the capability of some languages without expanding the vocabulary.

It segments numbers by single digit, and calls the tiktoken tokenizer library for efficient tokenization.

评测效果(Evaluation)

对于Qwen-7B-Chat模型,我们同样评测了常规的中文理解(C-Eval)、英文理解(MMLU)、代码(HumanEval)和数学(GSM8K)等权威任务,同时包含了长序列任务的评测结果。由于Qwen-7B-Chat模型经过对齐后,激发了较强的外部系统调用能力,我们还进行了工具使用能力方面的评测。

提示:由于硬件和框架造成的舍入误差,复现结果如有波动属于正常现象。

For Qwen-7B-Chat, we also evaluate the model on C-Eval, MMLU, HumanEval, GSM8K, etc., as well as the benchmark evaluation for long-context understanding, and tool usage.

Note: Due to rounding errors caused by hardware and framework, differences in reproduced results are possible.

中文评测(Chinese Evaluation)

C-Eval

在C-Eval验证集上,我们评价了Qwen-7B-Chat模型的0-shot & 5-shot准确率

We demonstrate the 0-shot & 5-shot accuracy of Qwen-7B-Chat on C-Eval validation set

| Model | Avg. Acc. |

|---|---|

| LLaMA2-7B-Chat | 31.9 |

| LLaMA2-13B-Chat | 36.2 |

| LLaMA2-70B-Chat | 44.3 |

| ChatGLM2-6B-Chat | 52.6 |

| InternLM-7B-Chat | 53.6 |

| Baichuan2-7B-Chat | 55.6 |

| Baichuan2-13B-Chat | 56.7 |

| Qwen-7B-Chat (original) (0-shot) | 54.2 |

| Qwen-7B-Chat (0-shot) | 59.7 |

| Qwen-7B-Chat (5-shot) | 59.3 |

| Qwen-14B-Chat (0-shot) | 69.8 |

| Qwen-14B-Chat (5-shot) | 71.7 |

C-Eval测试集上,Qwen-7B-Chat模型的zero-shot准确率结果如下:

The zero-shot accuracy of Qwen-7B-Chat on C-Eval testing set is provided below:

| Model | Avg. | STEM | Social Sciences | Humanities | Others |

|---|---|---|---|---|---|

| Chinese-Alpaca-Plus-13B | 41.5 | 36.6 | 49.7 | 43.1 | 41.2 |

| Chinese-Alpaca-2-7B | 40.3 | - | - | - | - |

| ChatGLM2-6B-Chat | 50.1 | 46.4 | 60.4 | 50.6 | 46.9 |

| Baichuan-13B-Chat | 51.5 | 43.7 | 64.6 | 56.2 | 49.2 |

| Qwen-7B-Chat (original) | 54.6 | 47.8 | 67.6 | 59.3 | 50.6 |

| Qwen-7B-Chat | 58.6 | 53.3 | 72.1 | 62.8 | 52.0 |

| Qwen-14B-Chat | 69.1 | 65.1 | 80.9 | 71.2 | 63.4 |

在7B规模模型上,经过人类指令对齐的Qwen-7B-Chat模型,准确率在同类相近规模模型中仍然处于前列。

Compared with other pretrained models with comparable model size, the human-aligned Qwen-7B-Chat performs well in C-Eval accuracy.

英文评测(English Evaluation)

MMLU

MMLU评测集上,Qwen-7B-Chat模型的 0-shot & 5-shot 准确率如下,效果同样在同类对齐模型中同样表现较优。

The 0-shot & 5-shot accuracy of Qwen-7B-Chat on MMLU is provided below. The performance of Qwen-7B-Chat still on the top between other human-aligned models with comparable size.

| Model | Avg. Acc. |

|---|---|

| ChatGLM2-6B-Chat | 46.0 |

| LLaMA2-7B-Chat | 46.2 |

| InternLM-7B-Chat | 51.1 |

| Baichuan2-7B-Chat | 52.9 |

| LLaMA2-13B-Chat | 54.6 |

| Baichuan2-13B-Chat | 57.3 |

| LLaMA2-70B-Chat | 63.8 |

| Qwen-7B-Chat (original) (0-shot) | 53.9 |

| Qwen-7B-Chat (0-shot) | 55.8 |

| Qwen-7B-Chat (5-shot) | 57.0 |

| Qwen-14B-Chat (0-shot) | 64.6 |

| Qwen-14B-Chat (5-shot) | 66.5 |

代码评测(Coding Evaluation)

Qwen-7B-Chat在HumanEval的zero-shot Pass@1效果如下

The zero-shot Pass@1 of Qwen-7B-Chat on HumanEval is demonstrated below

| Model | Pass@1 |

|---|---|

| ChatGLM2-6B-Chat | 11.0 |

| LLaMA2-7B-Chat | 12.2 |

| Baichuan2-7B-Chat | 13.4 |

| InternLM-7B-Chat | 14.6 |

| Baichuan2-13B-Chat | 17.7 |

| LLaMA2-13B-Chat | 18.9 |

| LLaMA2-70B-Chat | 32.3 |

| Qwen-7B-Chat (original) | 24.4 |

| Qwen-7B-Chat | 37.2 |

| Qwen-14B-Chat | 43.9 |

数学评测(Mathematics Evaluation)

在评测数学能力的GSM8K上,Qwen-7B-Chat的准确率结果如下

The accuracy of Qwen-7B-Chat on GSM8K is shown below

| Model | Acc. |

|---|---|

| LLaMA2-7B-Chat | 26.3 |

| ChatGLM2-6B-Chat | 28.8 |

| Baichuan2-7B-Chat | 32.8 |

| InternLM-7B-Chat | 33.0 |

| LLaMA2-13B-Chat | 37.1 |

| Baichuan2-13B-Chat | 55.3 |

| LLaMA2-70B-Chat | 59.3 |

| Qwen-7B-Chat (original) (0-shot) | 41.1 |

| Qwen-7B-Chat (0-shot) | 50.3 |

| Qwen-7B-Chat (8-shot) | 54.1 |

| Qwen-14B-Chat (0-shot) | 60.1 |

| Qwen-14B-Chat (8-shot) | 59.3 |

长序列评测(Long-Context Understanding)

通过NTK插值,LogN注意力缩放可以扩展Qwen-7B-Chat的上下文长度。在长文本摘要数据集VCSUM上(文本平均长度在15K左右),Qwen-7B-Chat的Rouge-L结果如下:

(若要启用这些技巧,请将config.json里的use_dynamic_ntk和use_logn_attn设置为true)

We introduce NTK-aware interpolation, LogN attention scaling to extend the context length of Qwen-7B-Chat. The Rouge-L results of Qwen-7B-Chat on long-text summarization dataset VCSUM (The average length of this dataset is around 15K) are shown below:

(To use these tricks, please set use_dynamic_ntk and use_long_attn to true in config.json.)

| Model | VCSUM (zh) |

|---|---|

| GPT-3.5-Turbo-16k | 16.0 |

| LLama2-7B-Chat | 0.2 |

| InternLM-7B-Chat | 13.0 |

| ChatGLM2-6B-Chat | 16.3 |

| Qwen-7B-Chat | 16.6 |

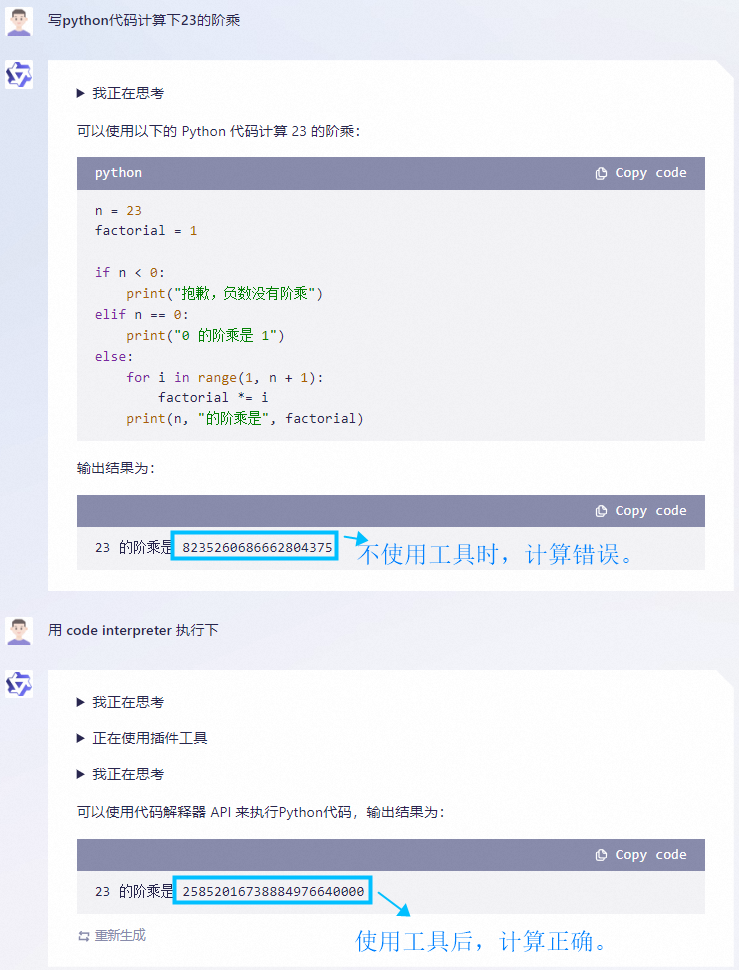

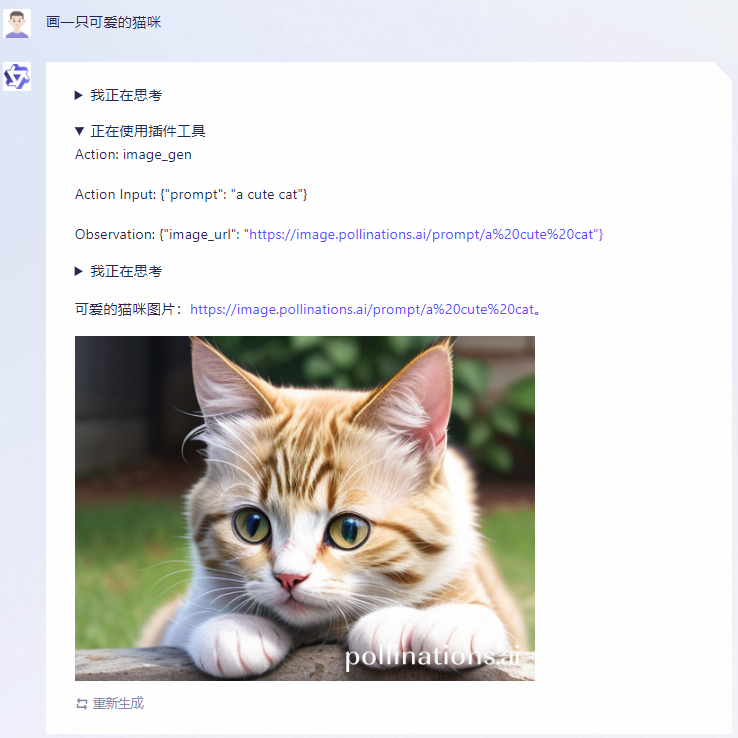

工具使用能力的评测(Tool Usage)

ReAct Prompting

千问支持通过 ReAct Prompting 调用插件/工具/API。ReAct 也是 LangChain 框架采用的主要方式之一。在我们开源的、用于评估工具使用能力的评测基准上,千问的表现如下:

Qwen-Chat supports calling plugins/tools/APIs through ReAct Prompting. ReAct is also one of the main approaches used by the LangChain framework. In our evaluation benchmark for assessing tool usage capabilities, Qwen-Chat's performance is as follows:

| Chinese Tool-Use Benchmark | |||

|---|---|---|---|

| Model | Tool Selection (Acc.↑) | Tool Input (Rouge-L↑) | False Positive Error↓ |

| GPT-4 | 95% | 0.90 | 15.0% |

| GPT-3.5 | 85% | 0.88 | 75.0% |

| Qwen-7B-Chat | 98% | 0.91 | 7.3% |

| Qwen-14B-Chat | 98% | 0.93 | 2.4% |

评测基准中出现的插件均没有出现在千问的训练集中。该基准评估了模型在多个候选插件中选择正确插件的准确率、传入插件的参数的合理性、以及假阳率。假阳率(False Positive)定义:在处理不该调用插件的请求时,错误地调用了插件。

The plugins that appear in the evaluation set do not appear in the training set of Qwen. This benchmark evaluates the accuracy of the model in selecting the correct plugin from multiple candidate plugins, the rationality of the parameters passed into the plugin, and the false positive rate. False Positive: Incorrectly invoking a plugin when it should not have been called when responding to a query.

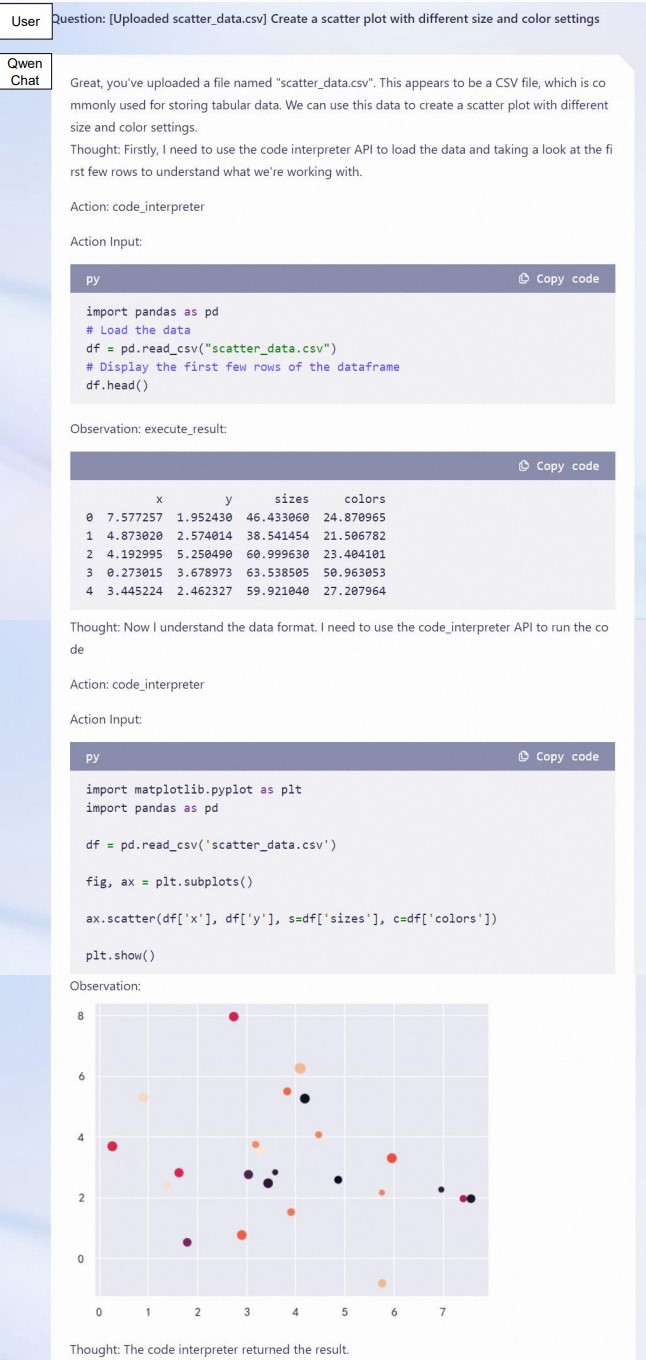

Code Interpreter

为了考察Qwen使用Python Code Interpreter完成数学解题、数据可视化、及文件处理与爬虫等任务的能力,我们专门建设并开源了一个评测这方面能力的评测基准。

我们发现Qwen在生成代码的可执行率、结果正确性上均表现较好:

To assess Qwen's ability to use the Python Code Interpreter for tasks such as mathematical problem solving, data visualization, and other general-purpose tasks such as file handling and web scraping, we have created and open-sourced a benchmark specifically designed for evaluating these capabilities. You can find the benchmark at this link.

We have observed that Qwen performs well in terms of code executability and result accuracy when generating code:

| Executable Rate of Generated Code (%) | |||

|---|---|---|---|

| Model | Math↑ | Visualization↑ | General↑ |

| GPT-4 | 91.9 | 85.9 | 82.8 |

| GPT-3.5 | 89.2 | 65.0 | 74.1 |

| LLaMA2-7B-Chat | 41.9 | 33.1 | 24.1 |

| LLaMA2-13B-Chat | 50.0 | 40.5 | 48.3 |

| CodeLLaMA-7B-Instruct | 85.1 | 54.0 | 70.7 |

| CodeLLaMA-13B-Instruct | 93.2 | 55.8 | 74.1 |

| InternLM-7B-Chat-v1.1 | 78.4 | 44.2 | 62.1 |

| InternLM-20B-Chat | 70.3 | 44.2 | 65.5 |

| Qwen-7B-Chat | 82.4 | 64.4 | 67.2 |

| Qwen-14B-Chat | 89.2 | 84.1 | 65.5 |

| Accuracy of Code Execution Results (%) | |||

|---|---|---|---|

| Model | Math↑ | Visualization-Hard↑ | Visualization-Easy↑ |

| GPT-4 | 82.8 | 66.7 | 60.8 |

| GPT-3.5 | 47.3 | 33.3 | 55.7 |

| LLaMA2-7B-Chat | 3.9 | 14.3 | 39.2 |

| LLaMA2-13B-Chat | 8.3 | 8.3 | 40.5 |

| CodeLLaMA-7B-Instruct | 14.3 | 26.2 | 60.8 |

| CodeLLaMA-13B-Instruct | 28.2 | 27.4 | 62.0 |

| InternLM-7B-Chat-v1.1 | 28.5 | 4.8 | 40.5 |

| InternLM-20B-Chat | 34.6 | 21.4 | 45.6 |

| Qwen-7B-Chat | 41.9 | 40.5 | 54.4 |

| Qwen-14B-Chat | 58.4 | 53.6 | 59.5 |

Huggingface Agent

千问还具备作为 HuggingFace Agent 的能力。它在 Huggingface 提供的run模式评测基准上的表现如下:

Qwen-Chat also has the capability to be used as a HuggingFace Agent. Its performance on the run-mode benchmark provided by HuggingFace is as follows:

| HuggingFace Agent Benchmark- Run Mode | |||

|---|---|---|---|

| Model | Tool Selection↑ | Tool Used↑ | Code↑ |

| GPT-4 | 100 | 100 | 97.4 |

| GPT-3.5 | 95.4 | 96.3 | 87.0 |

| StarCoder-Base-15B | 86.1 | 87.0 | 68.9 |

| StarCoder-15B | 87.0 | 88.0 | 68.9 |

| Qwen-7B-Chat | 87.0 | 87.0 | 71.5 |

| Qwen-14B-Chat | 93.5 | 94.4 | 87.0 |

| HuggingFace Agent Benchmark - Chat Mode | |||

|---|---|---|---|

| Model | Tool Selection↑ | Tool Used↑ | Code↑ |

| GPT-4 | 97.9 | 97.9 | 98.5 |

| GPT-3.5 | 97.3 | 96.8 | 89.6 |

| StarCoder-Base-15B | 97.9 | 97.9 | 91.1 |

| StarCoder-15B | 97.9 | 97.9 | 89.6 |

| Qwen-7B-Chat | 94.7 | 94.7 | 85.1 |

| Qwen-14B-Chat | 97.9 | 97.9 | 95.5 |

x86 平台 (x86 Platforms)

在 酷睿™/至强® 可扩展处理器或 Arc™ GPU 上部署量化模型时,建议使用 OpenVINO™ Toolkit以充分利用硬件,实现更好的推理性能。您可以安装并运行此 example notebook。相关问题,您可在OpenVINO repo中提交。

When deploy on Core™/Xeon® Scalable Processors or with Arc™ GPU, OpenVINO™ Toolkit is recommended. You can install and run this example notebook. For related issues, you are welcome to file an issue at OpenVINO repo.

FAQ

如遇到问题,敬请查阅FAQ以及issue区,如仍无法解决再提交issue。

If you meet problems, please refer to FAQ and the issues first to search a solution before you launch a new issue.

引用 (Citation)

如果你觉得我们的工作对你有帮助,欢迎引用!

If you find our work helpful, feel free to give us a cite.

@article{qwen,

title={Qwen Technical Report},

author={Jinze Bai and Shuai Bai and Yunfei Chu and Zeyu Cui and Kai Dang and Xiaodong Deng and Yang Fan and Wenbin Ge and Yu Han and Fei Huang and Binyuan Hui and Luo Ji and Mei Li and Junyang Lin and Runji Lin and Dayiheng Liu and Gao Liu and Chengqiang Lu and Keming Lu and Jianxin Ma and Rui Men and Xingzhang Ren and Xuancheng Ren and Chuanqi Tan and Sinan Tan and Jianhong Tu and Peng Wang and Shijie Wang and Wei Wang and Shengguang Wu and Benfeng Xu and Jin Xu and An Yang and Hao Yang and Jian Yang and Shusheng Yang and Yang Yao and Bowen Yu and Hongyi Yuan and Zheng Yuan and Jianwei Zhang and Xingxuan Zhang and Yichang Zhang and Zhenru Zhang and Chang Zhou and Jingren Zhou and Xiaohuan Zhou and Tianhang Zhu},

journal={arXiv preprint arXiv:2309.16609},

year={2023}

}

使用协议(License Agreement)

我们的代码和模型权重对学术研究完全开放,并支持商用。请查看LICENSE了解具体的开源协议细节。如需商用,请填写问卷申请。

Our code and checkpoints are open to research purpose, and they are allowed for commercial purposes. Check LICENSE for more details about the license. If you have requirements for commercial use, please fill out the form to apply.

联系我们(Contact Us)

如果你想给我们的研发团队和产品团队留言,欢迎加入我们的微信群、钉钉群以及Discord!同时,也欢迎通过邮件([email protected])联系我们。

If you are interested to leave a message to either our research team or product team, join our Discord or WeChat groups! Also, feel free to send an email to [email protected].

- Downloads last month

- 96,367