Model Card for UIGEN-T2-7B

Model Overview

We're excited to introduce UIGEN-T2, the next evolution in our UI generation model series. Fine-tuned from the highly capable Qwen2.5-Coder-7B-Instruct base model using PEFT/LoRA, UIGEN-T2 is specifically designed to generate HTML and Tailwind CSS code for web interfaces. What sets UIGEN-T2 apart is its training on a massive 50,000 sample dataset (up from 400) and its unique UI-based reasoning capability, allowing it to generate not just code, but code informed by thoughtful design principles.

Model Highlights

- High-Quality UI Code Generation: Produces functional and semantic HTML combined with utility-first Tailwind CSS.

- Massive Training Dataset: Trained on 50,000 diverse UI examples, enabling broader component understanding and stylistic range.

- Innovative UI-Based Reasoning: Incorporates detailed reasoning traces generated by a specialized "teacher" model, ensuring outputs consider usability, layout, and aesthetics. (See example reasoning in description below)

- PEFT/LoRA Trained (Rank 128): Efficiently fine-tuned for UI generation. We've published LoRA checkpoints at each training step for transparency and community use!

- Improved Chat Interaction: Streamlined prompt flow – no more need for the awkward double

thinkprompt! Interaction feels more natural.

Example Reasoning (Internal Guide for Generation)

Here's a glimpse into the kind of reasoning that guides UIGEN-T2 internally, generated by our specialized teacher model:

<|begin_of_thought|>

When approaching the challenge of crafting an elegant stopwatch UI, my first instinct is to dissect what truly makes such an interface delightful yet functional—hence, I consider both aesthetic appeal and usability grounded in established heuristics like Nielsen’s “aesthetic and minimalist design” alongside Gestalt principles... placing the large digital clock prominently aligns with Fitts’ Law... The glassmorphism effect here enhances visual separation... typography choices—the use of a monospace font family ("Fira Code" via Google Fonts) supports readability... iconography paired with labels inside buttons provides dual coding... Tailwind CSS v4 enables utility-driven consistency... critical reflection concerns responsiveness: flexbox layouts combined with relative sizing guarantee graceful adaptation...

<|end_of_thought|>

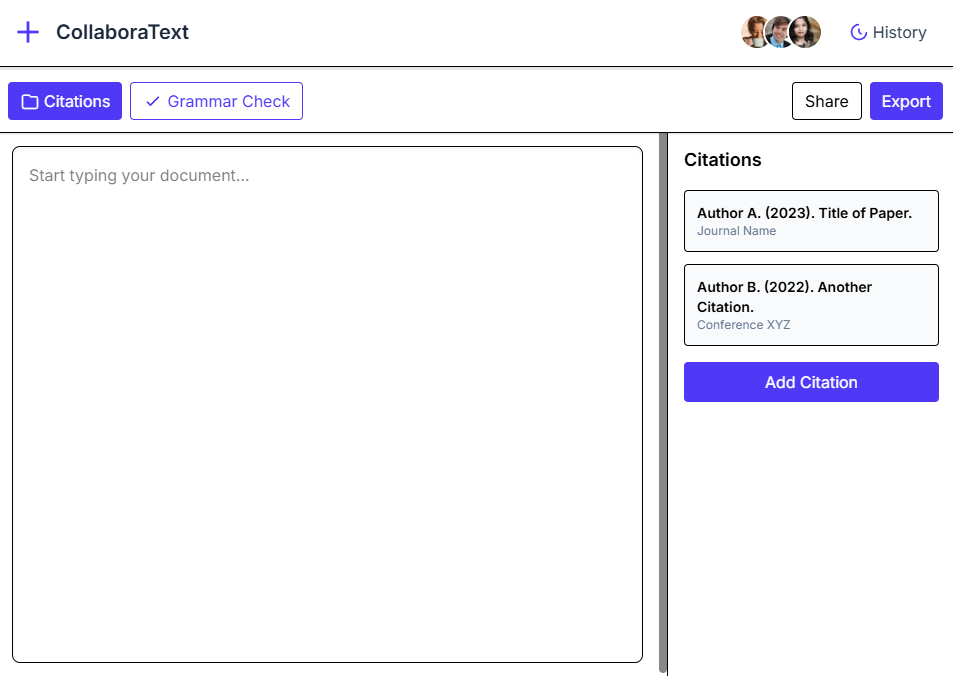

Example Outputs

Use Cases

Recommended Uses

- Rapid UI Prototyping: Quickly generate HTML/Tailwind code snippets from descriptions or wireframes.

- Component Generation: Create standard and custom UI components (buttons, cards, forms, layouts).

- Frontend Development Assistance: Accelerate development by generating baseline component structures.

- Design-to-Code Exploration: Bridge the gap between design concepts and initial code implementation.

Limitations

- Current Framework Focus: Primarily generates HTML and Tailwind CSS. (Bootstrap support is planned!).

- Complex JavaScript Logic: Focuses on structure and styling; dynamic behavior and complex state management typically require manual implementation.

- Highly Specific Design Systems: May need further fine-tuning for strict adherence to unique, complex corporate design systems.

How to Use

You have to use this system prompt:

You are Tesslate, a helpful assistant specialized in UI generation.

These are the reccomended parameters: 0.7 Temp, Top P 0.9.

Inference Example

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

# Make sure you have PEFT installed: pip install peft

from peft import PeftModel

# Use your specific model name/path once uploaded

model_name_or_path = "tesslate/UIGEN-T2" # Placeholder - replace with actual HF repo name

base_model_name = "Qwen/Qwen2.5-Coder-7B-Instruct"

# Load the base model

base_model = AutoModelForCausalLM.from_pretrained(

base_model_name,

torch_dtype=torch.bfloat16, # or float16 if bf16 not supported

device_map="auto"

)

# Load the PEFT model (LoRA weights)

model = PeftModel.from_pretrained(base_model, model_name_or_path)

tokenizer = AutoTokenizer.from_pretrained(base_model_name) # Use base tokenizer

# Note the simplified prompt structure (no double 'think')

prompt = """<|im_start|>user

Create a simple card component using Tailwind CSS with an image, title, and description.<|im_end|>

<|im_start|>assistant

""" # Model will generate reasoning and code following this

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

# Adjust generation parameters as needed

outputs = model.generate(**inputs, max_new_tokens=1024, do_sample=True, temperature=0.6, top_p=0.9)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Performance and Evaluation

- Strengths:

- Generates semantically correct and well-structured HTML/Tailwind CSS.

- Leverages a large dataset (50k samples) for improved robustness and diversity.

- Incorporates design reasoning for more thoughtful UI outputs.

- Improved usability via streamlined chat template.

- Openly published LoRA checkpoints for community use.

- Weaknesses:

- Currently limited to HTML/Tailwind CSS (Bootstrap planned).

- Complex JavaScript interactivity requires manual implementation.

- Reinforcement Learning refinement (for stricter adherence to principles/rewards) is a future step.

Technical Specifications

- Architecture: Transformer-based LLM adapted with PEFT/LoRA

- Base Model: Qwen/Qwen2.5-Coder-7B-Instruct

- Adapter Rank (LoRA): 128

- Training Data Size: 50,000 samples

- Precision: Trained using bf16/fp16. Base model requires appropriate precision handling.

- Hardware Requirements: Recommend GPU with >= 16GB VRAM for efficient inference (depends on quantization/precision).

- Software Dependencies:

- Hugging Face Transformers (

transformers) - PyTorch (

torch) - Parameter-Efficient Fine-Tuning (

peft)

- Hugging Face Transformers (

Citation

If you use UIGEN-T2 or the LoRA checkpoints in your work, please cite us:

@misc{tesslate_UIGEN-T2,

title={UIGEN-T2: Scaling UI Generation with Reasoning on Qwen2.5-Coder-7B},

author={tesslate},

year={2024}, # Adjust year if needed

publisher={Hugging Face},

url={https://huggingface.co/tesslate/UIGEN-T2} # Placeholder URL

}

Contact & Community

- Downloads last month

- 49