All-atom Diffusion Transformers

Independent reproduction of the paper "All-atom Diffusion Transformers: Unified generative modelling of molecules and materials", by Chaitanya K. Joshi, Xiang Fu, Yi-Lun Liao, Vahe Gharakhanyan, Benjamin Kurt Miller, Anuroop Sriram*, and Zachary W. Ulissi* from FAIR Chemistry at Meta (* Joint last author).

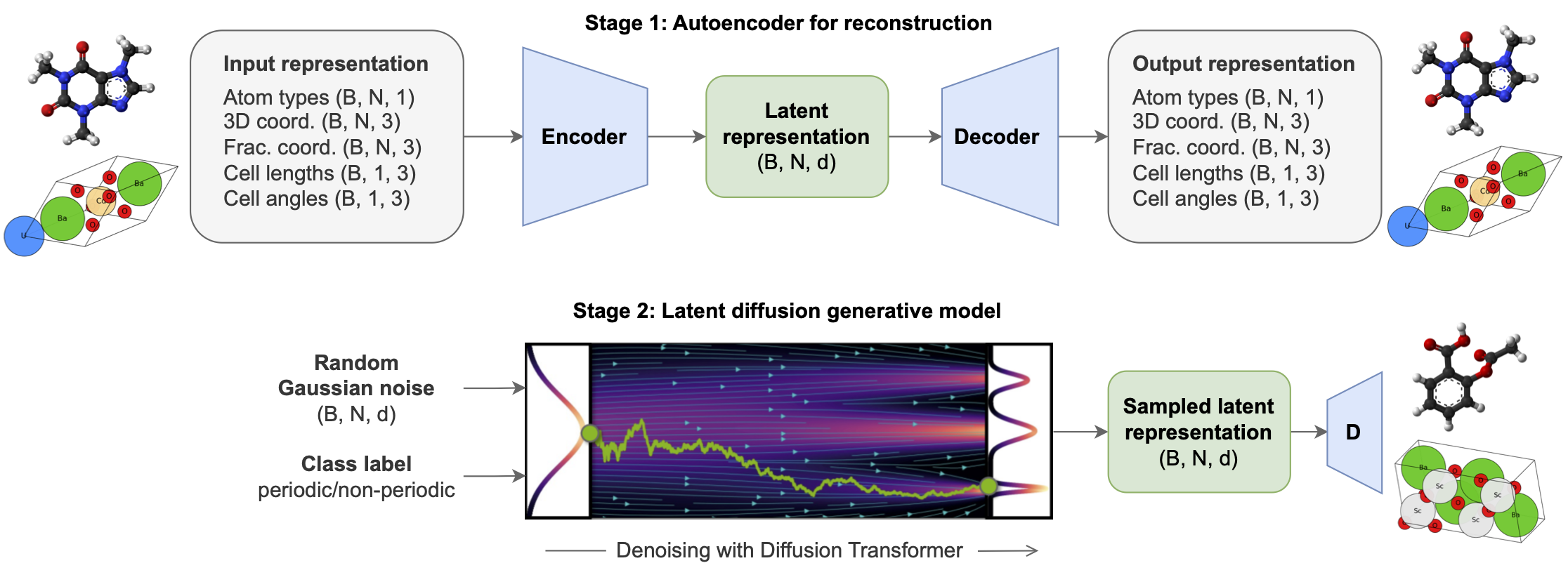

All-atom Diffusion Transformers (ADiTs) jointly generate both periodic materials and non-periodic molecular systems using a unified latent diffusion framework:

- An autoencoder maps a unified, all-atom representations of molecules and materials to a shared latent embedding space; and

- A diffusion model is trained to generate new latent embeddings that the autoencoder can decode to sample new molecules or materials.

Note that these checkpoints are the result of an independent reproduction of this research by Chaitanya K. Joshi, and may not correspond to the exact models/performance metrics reported in the final manuscript.

These checkpoints can be used to run inference as described in the README on GitHub.

Here is a minimal notebook for loading an ADiT checkpoint and sampling some crystals or molecules:

Examples of 10,000 sampled crystals and molecules are also available:

Citation

ArXiv link: All-atom Diffusion Transformers: Unified generative modelling of molecules and materials

@article{joshi2025allatom,

title={All-atom Diffusion Transformers: Unified generative modelling of molecules and materials},

author={Chaitanya K. Joshi and Xiang Fu and Yi-Lun Liao and Vahe Gharakhanyan and Benjamin Kurt Miller and Anuroop Sriram and Zachary W. Ulissi},

journal={arXiv preprint},

year={2025},

}

Datasets used to train chaitjo/all-atom-diffusion-transformer

Evaluation results

- Validity Rate on QM9Unconditional Molecule Generation94.450

- Validity Rate on MP20Unconditional Crystal Generation91.920

- DFT S.U.N. Rate on MP20Unconditional Crystal Generation6.000