Datasets:

Reproducing FineMath results

#50

by

anyasims

- opened

I am trying to reproduce the finemath results from the smollm library here. There are no errors and the loss goes down nicely, but I am not able to match the performance reported in the paper.

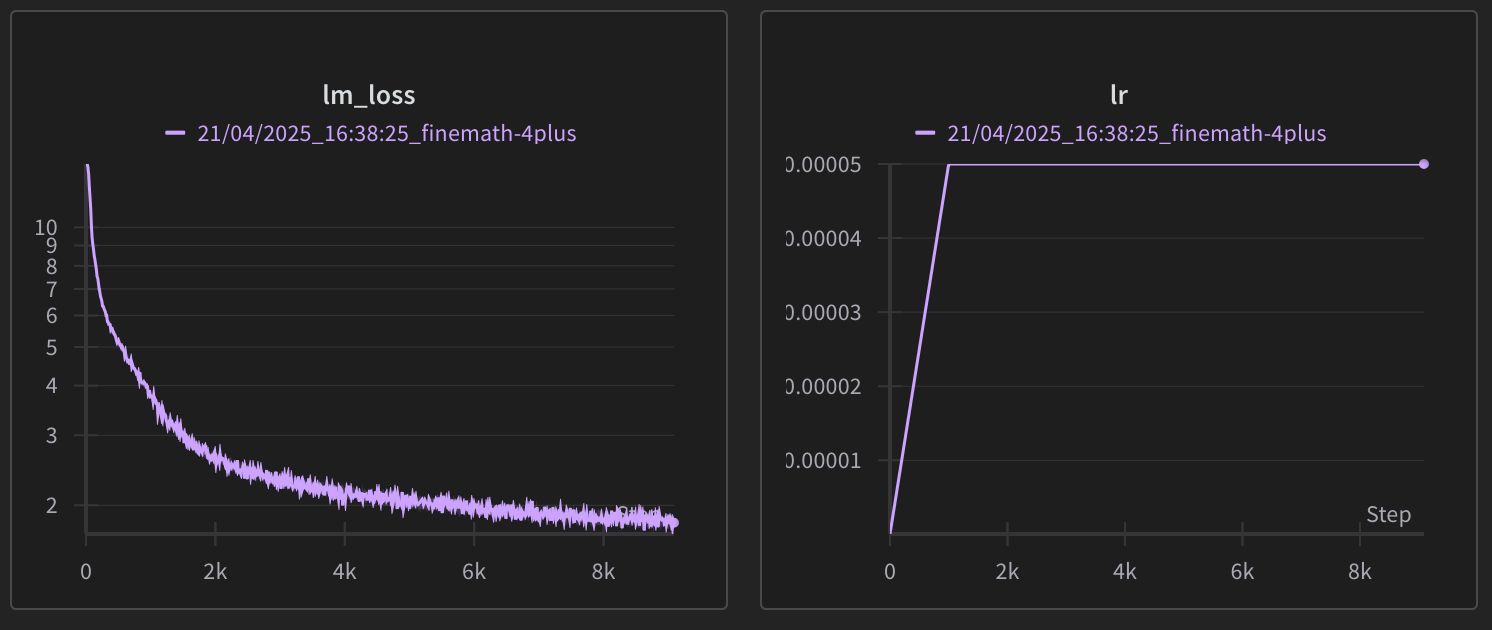

For example the initial model starts at [GSM8K, MATH] = [0.26, 0.08] (from running the eval myself - this matches the paper). After training for ~10B tokens the model should reach [0.44, 0.17] (see figure), but when I run it performance has collapsed to [0.003, 0.0018]. The loss curve looks fine (see below).

Do you have any suggestions on what the issue could be, and is it possible to compare to the loss curve of the original runs?