HiDream-I1_HQ-models

Guide (External Site): English | Japanese

This repository collects high-quality models for generating the best images using HiDream.

Left: HiDream-I1-dev | Right: i2i from SDXL Anime Illustration

Model Details

- Transformers (HiDream-I1-Full, Dev, Fast): 17 billion parameter transformer-based models using the Rectified Flow Transformer architecture, optimized for photorealistic, cartoon, and artistic image generation.

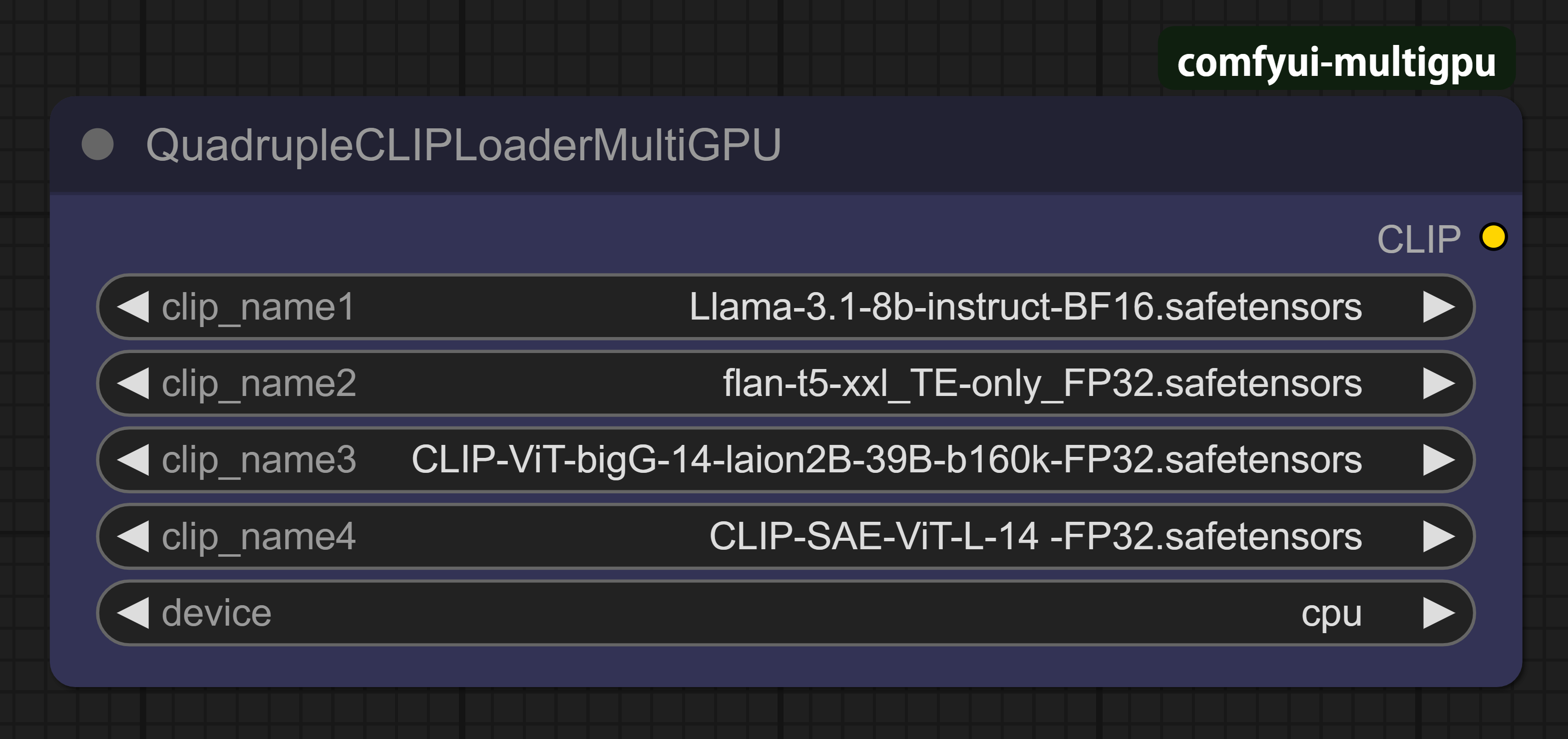

- Text Encoders: Process input text prompts to guide image generation. Includes Llama-3.1, flan-t5-xxl, and CLIP models.

- VAE: The Variational Autoencoder from FLUX.1 [schnell] for encoding and decoding images.

Model List

| Model Name | License | Type | Commercial Use |

|---|---|---|---|

| HiDream-I1-Full-FP16 | MIT | Transformer | ✅ |

| HiDream-I1-Dev-BF16 | MIT | Transformer | ✅ |

| HiDream-I1-Fast-BF16 | MIT | Transformer | ✅ |

| HiDream-E1-Full-BF16 | MIT | Transformer | ✅ |

| Llama-3.1-8B-Instruct-BF16 | Llama 3.1 Community License | Text encoder | ✅ |

| flan-t5-xxl_TE-only_FP32 | Apache 2.0 | Text encoder | ✅ |

| CLIP-ViT-bigG-14-laion2B-39B-b160k-FP32 | MIT | Text encoder | ✅ |

| CLIP-SAE-ViT-L-14-FP32 | MIT | Text encoder | ✅ |

| FLUX1-schnell-AE-FP32 | Apache 2.0 | VAE | ✅ |

- The

Llama-3.1-8B-Instruct-BF16model was created by combining the split-distributed original llama-3.1-8b-instruct model (Built with Meta Llama 3).

Light-weight models

If you are looking for a light-weight model, please refer to the following link.

HiDream Models

Text Encoder

Usage Instructions

Place the downloaded model files in following directories.

To use FP32 format text encoders, enabling the --fp32-text-enc setting at ComfyUI startup.

Transformers

Models

- HiDream-I1-Full-FP16

- HiDream-I1-Dev-BF16

- HiDream-I1-Fast-BF16

- HiDream-E1-Full-BF16

Folder

models/StableDiffusion

Text encoders

- Models

- Llama-3.1-8B-Instruct-BF16

- flan-t5-xxl_TE_only_FP32

- CLIP-ViT-bigG-14-laion2B-39B-b160k-FP32

- CLIP-SAE-ViT-L-14-FP32

- Folder

models/text_encoderormodels/clip

VAE

Models

- FLUX1-schnell-AE-FP32

Folder

models/vae

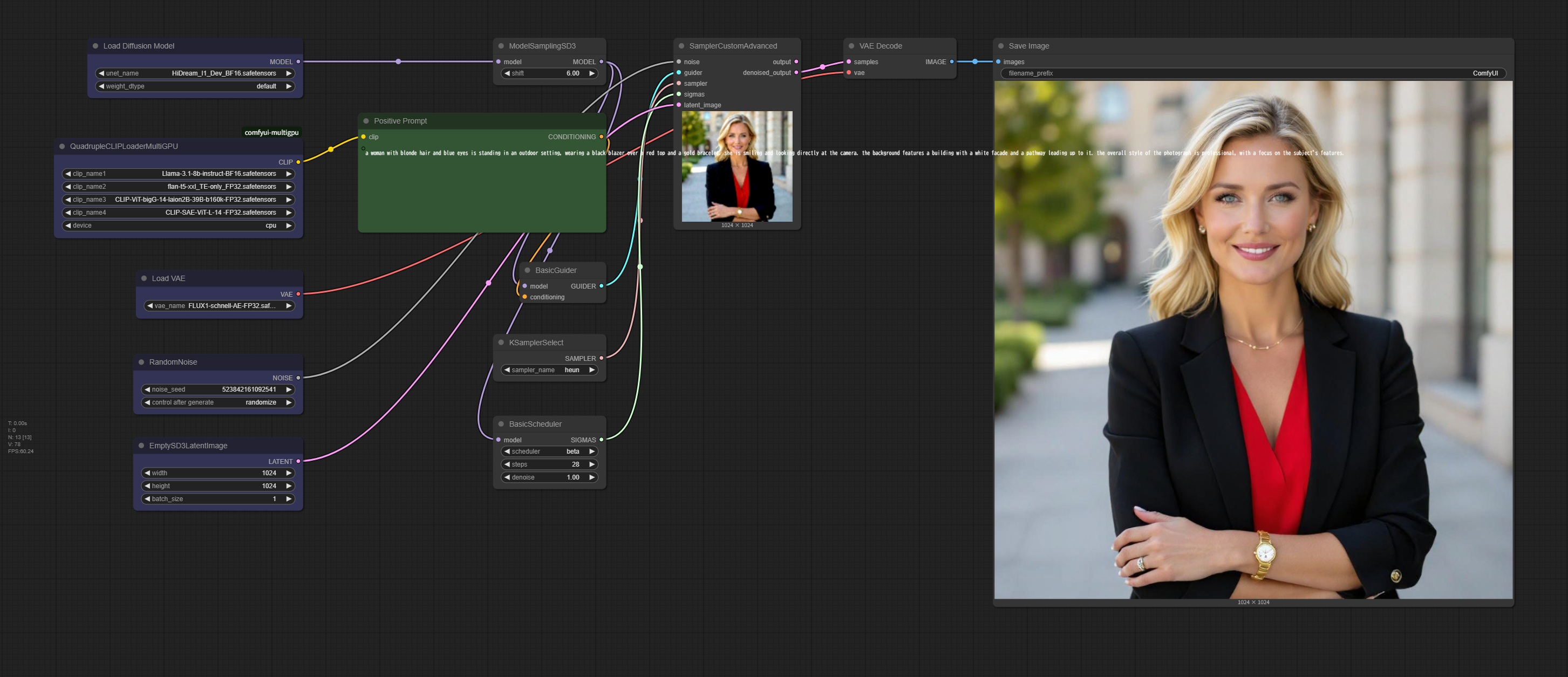

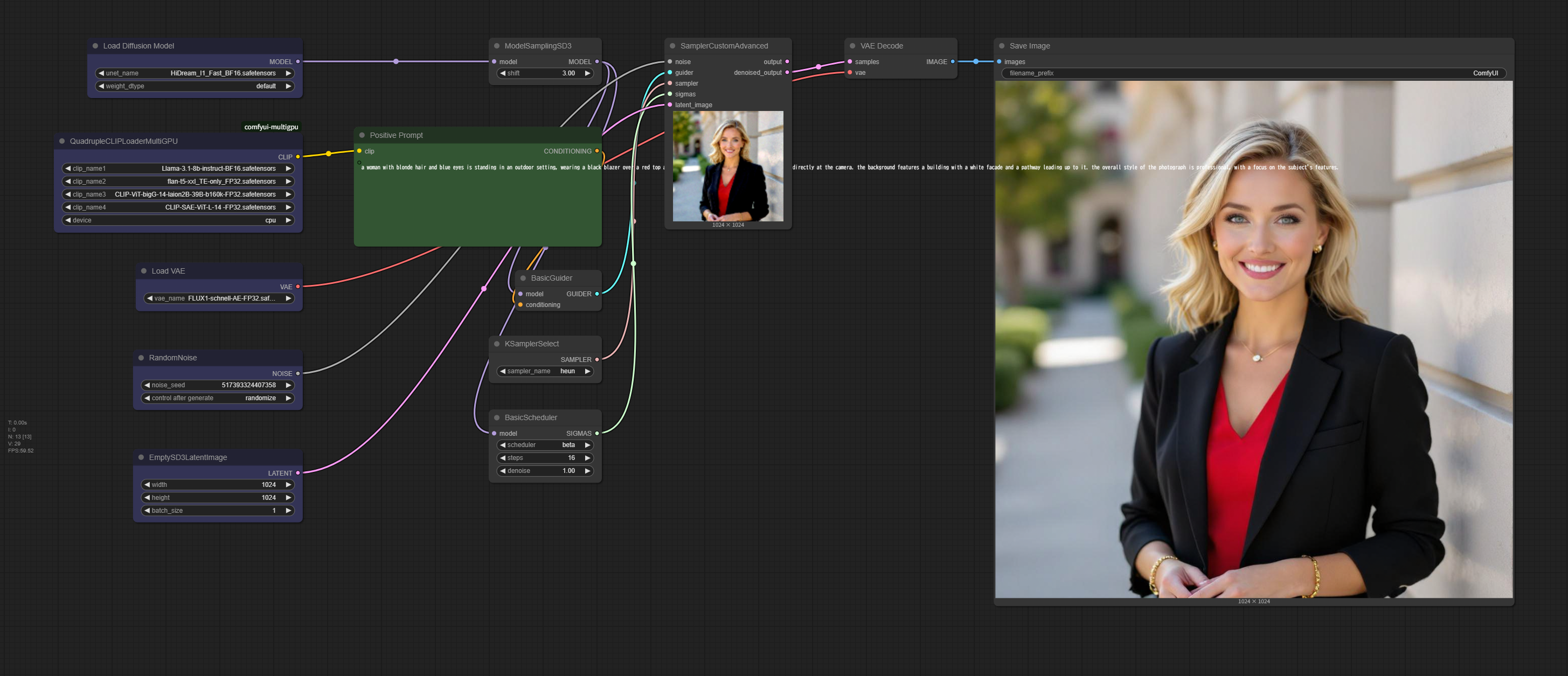

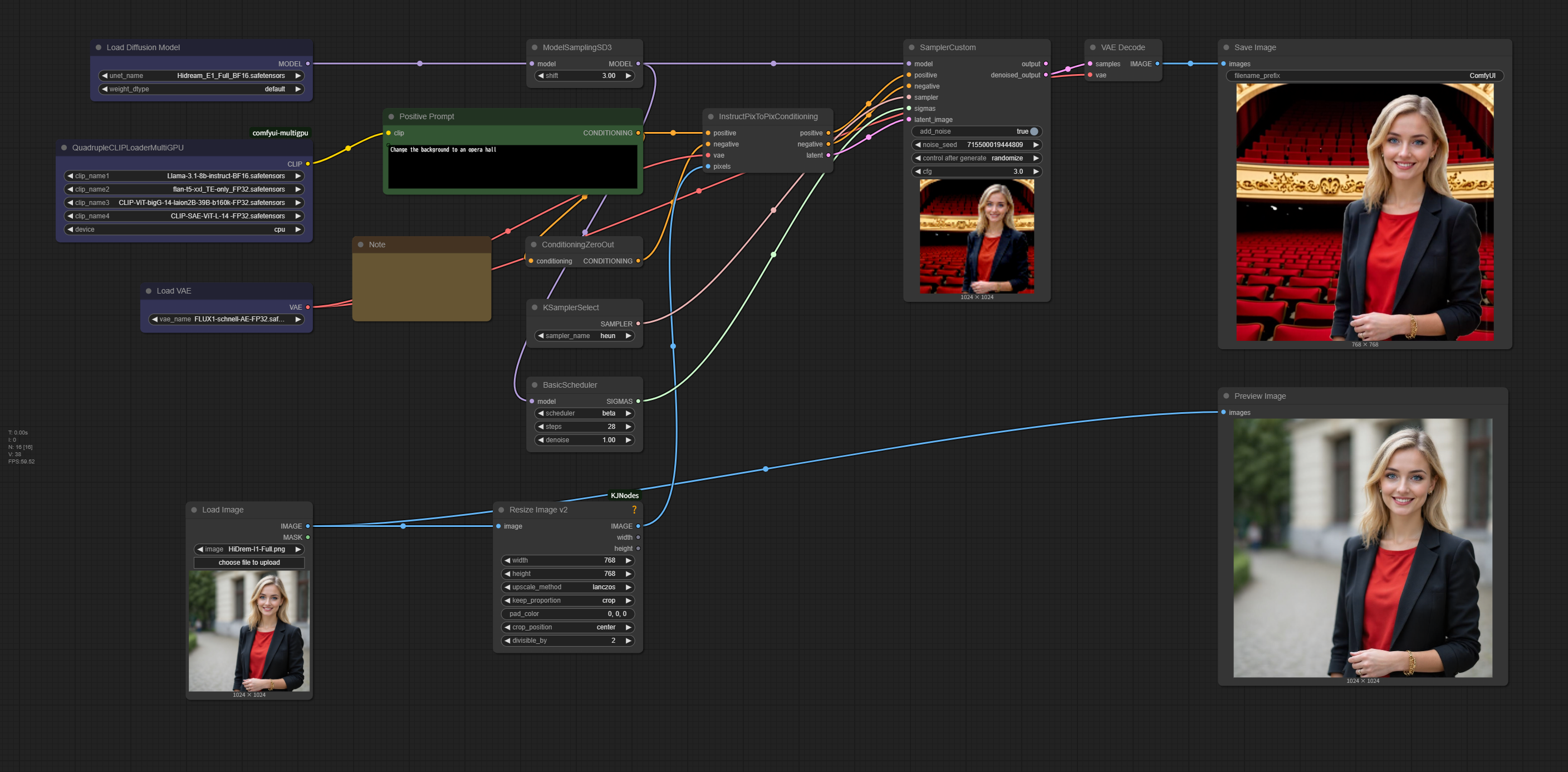

ComfyUI Workflow

The FP16/BF16 models of HiDream-I1 require a minimum split capacity of 10.1 GB for the transformer in ComfyUI.

With 12GB VRAM and 64GB RAM, you can achieve the highest image quality using the following workflows.

HiDream-I1-Full

HiDream-I1-Dev

HiDream-I1-Fast

HiDream-E1-Full

For loading text encoders, use the QuadrupleCLIPLoaderMultiGPU custom node from ComfyUI-MultiGPU. Explicitly specify device: cpu to load the text encoder into system RAM.

In my setup, using the Heun sampler and Beta scheduler generated higher-quality illustrations compared to the default settings.

License

This repository inherits the licenses of the original models:

- MIT License: Include the license text in your distribution.

- Apache 2.0 License: Include the license and provide notices.

- Llama 3.1 Community License: Include the statement "Built with Meta Llama 3" and adhere to the usage policy.

See the Model List for links to the full license texts.