Variational Autoencoder (VAE) - CelebA Dataset

This repository contains a trained Variational Autoencoder (VAE) model on the CelebA dataset. The model is designed to encode and decode facial images, enabling tasks such as image reconstruction, latent space interpolation, and attribute manipulation.

Model Details

- Architecture: Variational Autoencoder (VAE)

- Dataset: CelebA

- Latent Dimension: 200

- Training Subset Size: 80,000 images

- Batch Size: 64

- Learning Rate: 1e-3

- Epochs: 10

Weights and Biases Run

The training process was tracked using Weights and Biases. You can view the full training logs and metrics here.

Usage

Loading the Model

To load the trained model, use the following code snippet:

import torch

from vae_model import VAE # Ensure the VAE class is defined in vae_model.py

# Define the latent dimension

latent_dim = 200

# Initialize the model

model = VAE(latent_dim=latent_dim)

# Load the trained weights

model_path = "./vae_celeba_latent_200_epochs_10_batch_64_subset_80000.pth"

model.load_state_dict(torch.load(model_path))

model.eval()

Applications

- Image Reconstruction: Reconstruct input images using the encoder and decoder.

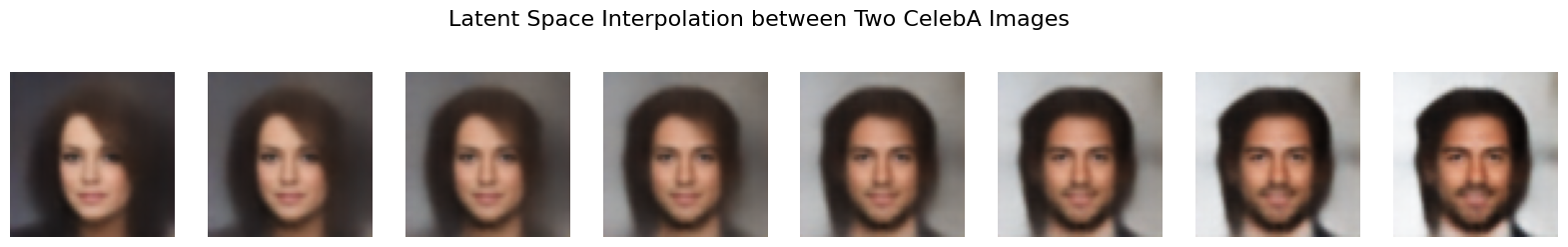

- Latent Space Interpolation: Generate smooth transitions between two images by interpolating in the latent space.

- Attribute Manipulation: Modify specific attributes (e.g., smiling, hair color) by moving along attribute directions in the latent space.

Example Results

Reconstruction

Below is a reconstruction example where the first row represents the original images, and the second row represents the reconstructed images:

Latent Space Interpolation

Below is an example of interpolating between two images in the latent space:

Attribute Manipulation

Manipulating the "Smiling" attribute in the latent space:

License

This project is licensed under the MIT License. See the LICENSE file for details.