LoRA that aims to improve vividity of generated scenarios.

Will produce NSFW output!!

Basically, a lewded, deshivered, and hopefully better keeping to the scene version of Mistral.

Mistral preset in ST will produce replies of different lengths, better adhering to the situation. I recommend it over the Roleplay preset, which almost always will fill up the response length and, in my opinion, is more dry.

Extra stopping strings for Mistral preset: ["[", "### Scenario:", "[ End ]", "#", "User:", "INS", "{{user}}:", "IST"]

Very important! Make the first three message pairs as good as possible, and the ride will become smoother after that.

I would also advise against using asterisks at all. The model will mess them up eventually, and although not critical to performance, it might get annoying later.

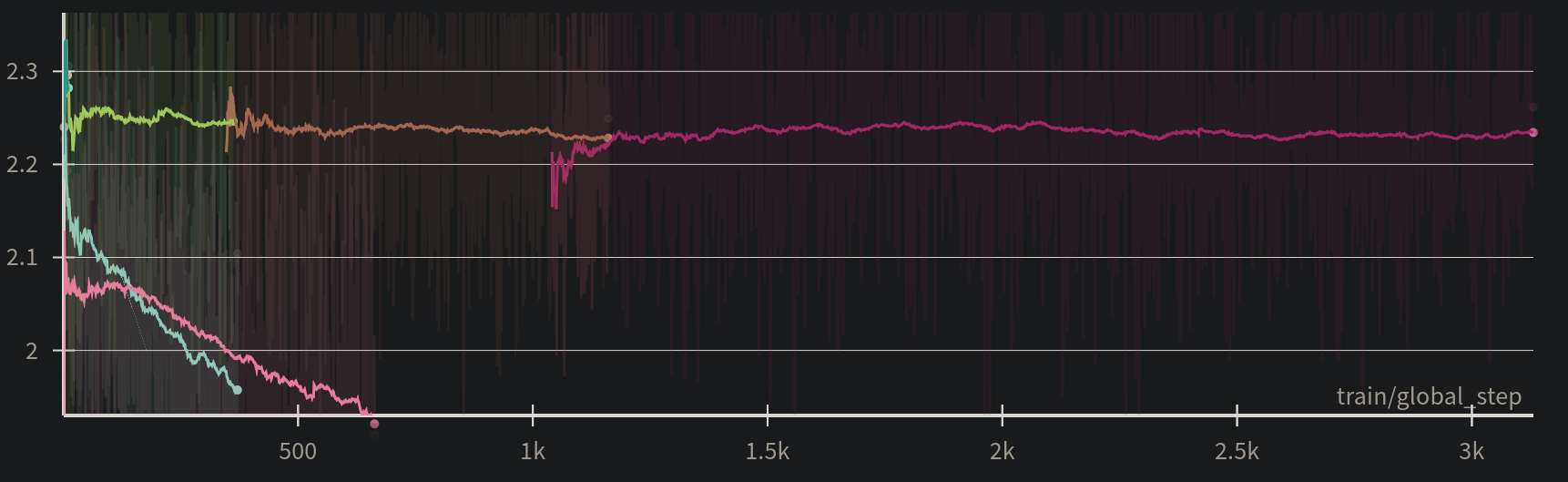

Adapter was trained in three phases. First and largest phase - many diverse conversations. Second and third phases - lewding content.

Please send feedback, logs, your favourite settings or virtualy anything you wish to share.

- Downloads last month

- 17

6-bit