license: cc-by-4.0

task_categories:

- object-detection

language:

- en

8-Calves Dataset

A benchmark dataset for occlusion-rich object detection, identity classification, and multi-object tracking. Features 8 Holstein Friesian calves with unique coat patterns in a 1-hour video with temporal annotations.

Overview

This dataset provides:

- 🕒 1-hour video (67,760 frames @20 fps, 600x800 resolution)

- 🎯 537,908 verified bounding boxes with calf identities (1-8)

- 🖼️ 900 hand-labeled static frames for detection tasks

- Designed to evaluate robustness in occlusion handling, identity preservation, and temporal consistency.

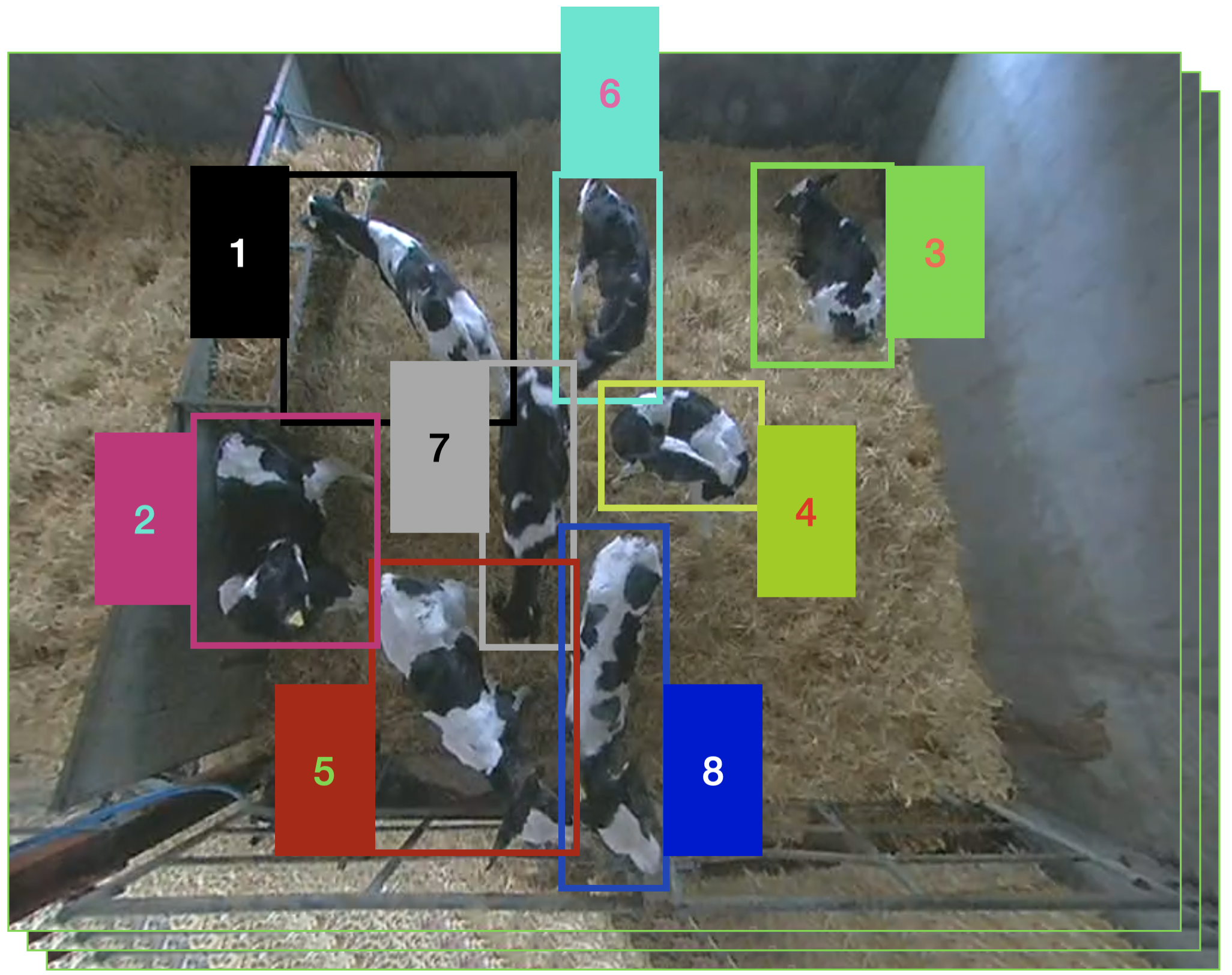

*Example frame with bounding boxes (green) and calf identities. Challenges include occlusion, motion blur, and pose variation.*

*Example frame with bounding boxes (green) and calf identities. Challenges include occlusion, motion blur, and pose variation.*

Key Features

- Temporal Richness: 1-hour continuous recording (vs. 10-minute benchmarks like 3D-POP)

- High-Quality Labels:

- Generated via ByteTrack + YOLOv8m pipeline with manual correction

- <0.56% annotation error rate

- Unique Challenges: Motion blur, pose variation, and frequent occlusions

- Efficiency Testing: Compare lightweight (e.g., YOLOv9t) vs. large models (e.g., ConvNextV2)

Dataset Structure

hand_labelled_frames/ # 900 manually annotated frames and labels in YOLO format, class=0 for cows

pmfeed_4_3_16.avi # 1-hour video (4th March 2016)

pmfeed_4_3_16_bboxes_and_labels.pkl # Temporal annotations

Annotation Details

PKL File Columns:

| Column | Description |

|---|---|

class |

Always 0 (cow detection) |

x, y, w, h |

YOLO-format bounding boxes |

conf |

Ignore (detections manually verified) |

tracklet_id |

Calf identity (1-8) |

frame_id |

Temporal index matching video |

Load annotations:

import pandas as pd

df = pd.read_pickle("pmfeed_4_3_16_bboxes_and_labels.pkl")

Usage

Dataset Download:

Step 1: install git-lfs:

git lfs install

Step 2:

git clone [email protected]:datasets/tonyFang04/8-calves

Step 3: install conda and pip environments:

conda create --name new_env --file conda_requirements.txt

pip install -r pip_requirements.txt

Object Detection

- Training/Validation: Use the first 600 frames from

hand_labelled_frames/(chronological split). - Testing: Evaluate on the full video (

pmfeed_4_3_16.avi) using the provided PKL annotations. - ⚠️ Avoid Data Leakage: Do not use all 900 frames for training - they are temporally linked to the test video.

Recommended Split:

| Split | Frames | Purpose |

|---|---|---|

| Training | 500 | Model training |

| Validation | 100 | Hyperparameter tuning |

| Test | 67,760 | Final evaluation |

Benchmarking YOLO Models:

Step 1:

cd 8-calves/object_detector_benchmark. Run

./create_yolo_dataset.sh and

create_yolo_testset.py. This creates a YOLO dataset with the 500/100/67760 train/val/test split recommended above.

Step 2: find the Albumentations class in the data/augment.py file in ultralytics source code. And replace the default transforms to:

# Transforms

T = [

A.RandomRotate90(p=1.0),

A.HorizontalFlip(p=0.5),

A.RandomBrightnessContrast(p=0.4),

A.ElasticTransform(

alpha=100.0,

sigma=5.0,

p=0.5

),

]

Step 3: run the yolo detectors following the following commands:

cd yolo_benchmark

Model_Name=yolov9t

yolo cfg=experiment.yaml model=$Model_Name.yaml name=$Model_Name

Benchmark Transformer Based Models:

Step 1: run the following commands to load the data into yolo format, then into coco, then into arrow:

cd 8-calves/object_detector_benchmark

./create_yolo_dataset.sh

python create_yolo_testset.py

python yolo_to_coco.py

python data_wrangling.py

Step 2: run the following commands to train:

cd transformer_benchmark

python train.py --config Configs/conditional_detr.yaml

Temporal Classification

- Use

tracklet_id(1-8) from the PKL file as labels. - Temporal Split: 30% train / 30% val / 40% test (chronological order).

Benchmark vision models for temporal classification:

Step 1: cropping the bounding boxes from pmfeed_4_3_16.mp4 using the correct labels in pmfeed_4_3_16_bboxes_and_labels.pkl. Then convert the folder of images cropped from pmfeed_4_3_16.mp4 into lmdb dataset for fast loading:

cd identification_benchmark

python crop_pmfeed_4_3_16.py

python construct_lmdb.py

Step 2: get embeddings from vision model:

cd big_model_inference

Use inference_resnet.py to get embeddings from resnet and inference_transformers.py to get embeddings from transformer weights available on Huggingface:

python inference_resnet.py --resnet_type resnet18

python inference_transformers.py --model_name facebook/convnextv2-nano-1k-224

Step 3: use the embeddings and labels obtained from step 2 to conduct knn evaluation and linear classification:

cd ../classification

python train.py

python knn_evaluation.py

Key Results

Object Detection (YOLO Models)

| Model | Parameters (M) | mAP50:95 (%) | Inference Speed (ms/sample) |

|---|---|---|---|

| YOLOv9c | 25.6 | 68.4 | 2.8 |

| YOLOv8x | 68.2 | 68.2 | 4.4 |

| YOLOv10n | 2.8 | 64.6 | 0.7 |

Identity Classification (Top Models)

| Model | Accuracy (%) | KNN Top-1 (%) | Parameters (M) |

|---|---|---|---|

| ConvNextV2-Nano | 73.1 | 50.8 | 15.6 |

| Swin-Tiny | 68.7 | 43.9 | 28.3 |

| ResNet50 | 63.7 | 38.3 | 25.6 |

Notes:

- mAP50:95: Mean Average Precision at IoU thresholds 0.5–0.95.

- KNN Top-1: Nearest-neighbor accuracy using embeddings.

- Full results and methodology: arXiv paper.

License

This dataset is released under CC-BY 4.0.

Modifications/redistribution must include attribution.

Citation

@article{fang20248calves,

title={8-Calves: A Benchmark for Object Detection and Identity Classification in Occlusion-Rich Environments},

author={Fang, Xuyang and Hannuna, Sion and Campbell, Neill},

journal={arXiv preprint arXiv:2503.13777},

year={2024}

}

Contact

Dataset Maintainer:

Xuyang Fang

Email: [email protected]